Êíèãà: The Master Algorithm: How the Quest for the Ultimate Learning Machine Will Remake Our World

CHAPTER FOUR: How Does Your Brain Learn?

CHAPTER FOUR: How Does Your Brain Learn?

Hebb’s rule, as it has come to be known, is the cornerstone of connectionism. Indeed, the field derives its name from the belief that knowledge is stored in the connections between neurons. Donald Hebb, a Canadian psychologist, stated it this way in his 1949 book The Organization of Behavior: “When an axon of cell A is near enough cell B and repeatedly or persistently takes part in firing it, some growth process or metabolic change takes place in one or both cells such that A’s efficiency, as one of the cells firing B, is increased.” It’s often paraphrased as “Neurons that fire together wire together.”

Hebb’s rule was a confluence of ideas from psychology and neuroscience, with a healthy dose of speculation thrown in. Learning by association was a favorite theme of the British empiricists, from Locke and Hume to John Stuart Mill. In his Principles of Psychology, William James enunciates a general principle of association that’s remarkably similar to Hebb’s rule, with neurons replaced by brain processes and firing efficiency by propagation of excitement. Around the same time, the great Spanish neuroscientist Santiago Ram?n y Cajal was making the first detailed observations of the brain, staining individual neurons using the recently invented Golgi method and cataloguing what he saw like a botanist classifying new species of trees. By Hebb’s time, neuroscientists had a rough understanding of how neurons work, but he was the first to propose a mechanism by which they could encode associations.

In symbolist learning, there is a one-to-one correspondence between symbols and the concepts they represent. In contrast, connectionist representations are distributed: each concept is represented by many neurons, and each neuron participates in representing many different concepts. Neurons that excite one another form what Hebb called a cell assembly. Concepts and memories are represented in the brain by cell assemblies. Each of these can include neurons from different brain regions and overlap with other assemblies. The cell assembly for “leg” includes the one for “foot,” which includes assemblies for the image of a foot and the sound of the word foot. If you ask a symbolist system where the concept “New York” is represented, it can point to the precise location in memory where it’s stored. In a connectionist system, the answer is “it’s stored a little bit everywhere.”

Another difference between symbolist and connectionist learning is that the former is sequential, while the latter is parallel. In inverse deduction, we figure out one step at a time what new rules are needed to arrive at the desired conclusion from the premises. In connectionist models, all neurons learn simultaneously according to Hebb’s rule. This mirrors the different properties of computers and brains. Computers do everything one small step at a time, like adding two numbers or flipping a switch, and as a result they need a lot of steps to accomplish anything useful; but those steps can be very fast, because transistors can switch on and off billions of times per second. In contrast, brains can perform a large number of computations in parallel, with billions of neurons working at the same time; but each of those computations is slow, because neurons can fire at best a thousand times per second.

The number of transistors in a computer is catching up with the number of neurons in a human brain, but the brain wins hands down in the number of connections. In a microprocessor, a typical transistor is directly connected to only a few others, and the planar semiconductor technology used severely limits how much better a computer can do. In contrast, a neuron has thousands of synapses. If you’re walking down the street and come across an acquaintance, it takes you only about a tenth of a second to recognize her. At neuron switching speeds, this is barely enough time for a hundred processing steps, but in those hundred steps your brain manages to scan your entire memory, find the best match, and adapt it to the new context (different clothes, different lighting, and so on). In a brain, each processing step can be very complex and involve a lot of information, consonant with a distributed representation.

This does not mean that we can’t simulate a brain with a computer; after all, that’s what connectionist algorithms do. Because a computer is a universal Turing machine, it can implement the brain’s computations as well as any others, provided we give it enough time and memory. In particular, the computer can use speed to make up for lack of connectivity, using the same wire a thousand times over to simulate a thousand wires. In fact, these days the main limitation of computers compared to brains is energy consumption: your brain uses only about as much power as a small lightbulb, while Watson’s supply could light up a whole office building.

To simulate a brain, we need more than Hebb’s rule, however; we need to understand how the brain is built. Each neuron is like a tiny tree, with a prodigious number of roots-the dendrites-and a slender, sinuous trunk-the axon. The brain is a forest of billions of these trees, but there’s something unusual about them. Each tree’s branches make connections-synapses-to the roots of thousands of others, forming a massive tangle like nothing you’ve ever seen. Some neurons have short axons and some have exceedingly long ones, reaching clear from one side of the brain to the other. Placed end to end, the axons in your brain would stretch from Earth to the moon.

And this jungle crackles with electricity. Sparks run along tree trunks and set off more sparks in neighboring trees. Every now and then, a whole area of the jungle whips itself into a frenzy before settling down again. When you wiggle your toe, a series of electric discharges, called action potentials, runs all the way down your spinal chord and leg until it reaches your toe muscles and tells them to move. Your brain at work is a symphony of these electric sparks. If you could sit inside it and watch what happens as you read this page, the scene you’d see would make even the busiest science-fiction metropolis look laid back by comparison. The end result of this phenomenally complex pattern of neuron firings is your consciousness.

In Hebb’s time there was no way to measure synaptic strength or change in it, let alone figure out the molecular biology of synaptic change. Today, we know that synapses do grow (or form anew) when the postsynaptic neuron fires soon after the presynaptic one. Like all cells, neurons have different concentrations of ions inside and outside, creating a voltage across their membrane. When the presynaptic neuron fires, tiny sacs release neurotransmitter molecules into the synaptic cleft. These cause channels in the postsynaptic neuron’s membrane to open, letting in potassium and sodium ions and changing the voltage across the membrane as a result. If enough presynaptic neurons fire close together, the voltage suddenly spikes, and an action potential travels down the postsynaptic neuron’s axon. This also causes the ion channels to become more responsive and new channels to appear, strengthening the synapse. To the best of our knowledge, this is how neurons learn.

The next step is to turn it into an algorithm.

The rise and fall of the perceptron

The first formal model of a neuron was proposed by Warren McCulloch and Walter Pitts in 1943. It looked a lot like the logic gates computers are made of. An OR gate switches on when at least one of its inputs is on, and an AND gate when all of them are on. A McCulloch-Pitts neuron switches on when the number of its active inputs passes some threshold. If the threshold is one, the neuron acts as an OR gate; if the threshold is equal to the number of inputs, as an AND gate. In addition, a McCulloch-Pitts neuron can prevent another from switching on, which models both inhibitory synapses and NOT gates. So a network of neurons can do all the operations a computer does. In the early days, computers were often called electronic brains, and this was not just an analogy.

What the McCulloch-Pitts neuron doesn’t do is learn. For that we need to give variable weights to the connections between neurons, resulting in what’s called a perceptron. Perceptrons were invented in the late 1950s by Frank Rosenblatt, a Cornell psychologist. A charismatic speaker and lively character, Rosenblatt did more than anyone else to shape the early days of machine learning. The name perceptron derives from his interest in applying his models to perceptual tasks like speech and character recognition. Rather than implement perceptrons in software, which was very slow in those days, Rosenblatt built his own devices. The weights were implemented by variable resistors like those found in dimmable light switches, and weight learning was carried out by electric motors that turned the knobs on the resistors. (Talk about high tech!)

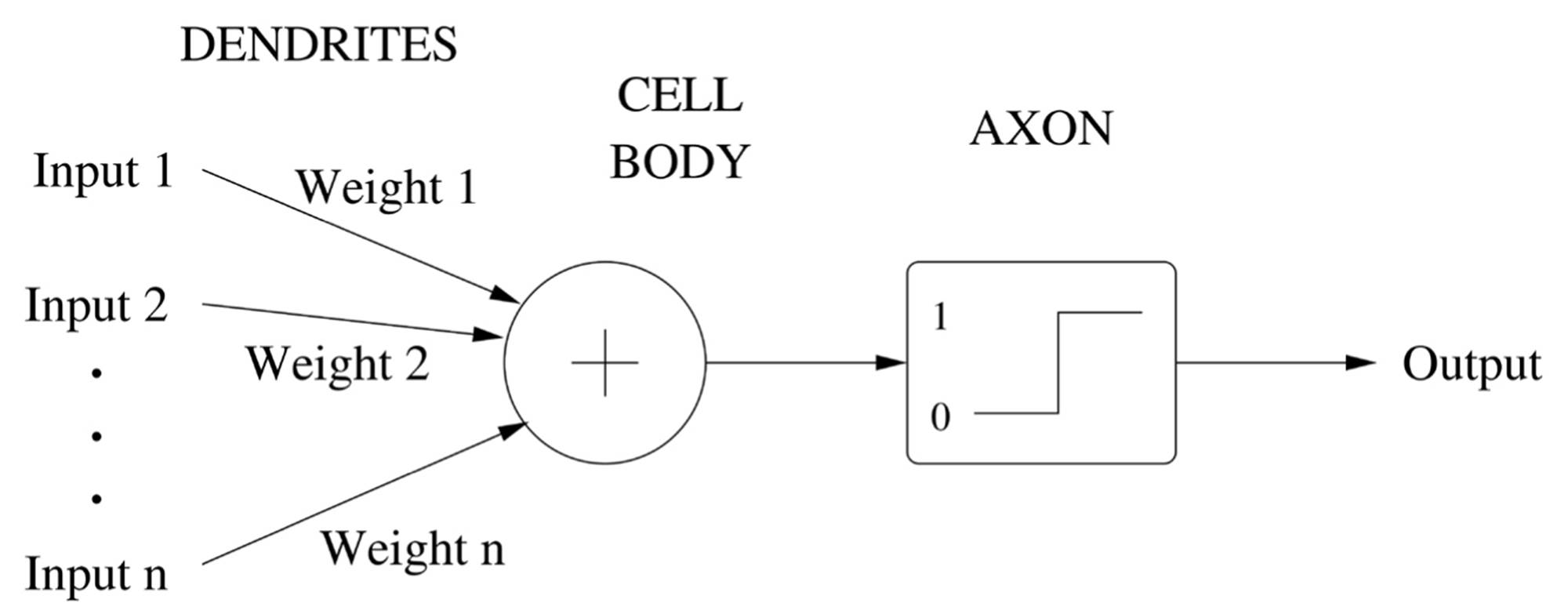

In a perceptron, a positive weight represents an excitatory connection, and a negative weight an inhibitory one. The perceptron outputs 1 if the weighted sum of its inputs is above threshold, and 0 if it’s below. By varying the weights and threshold, we can change the function that the perceptron computes. This ignores a lot of the details of how neurons work, of course, but we want to keep things as simple as possible; our goal is to develop a general-purpose learning algorithm, not to build a realistic model of the brain. If some of the details we ignored turn out to be important, we can always add them in later. Despite our simplifying abstractions, however, we can still see how each part of this model corresponds to a part of the neuron:

The higher an input’s weight, the stronger the corresponding synapse. The cell body adds up all the weighted inputs, and the axon applies a step function to the result. The axon’s box in the diagram shows the graph of a step function: 0 for low values of the input, abruptly changing to 1 when the input reaches the threshold.

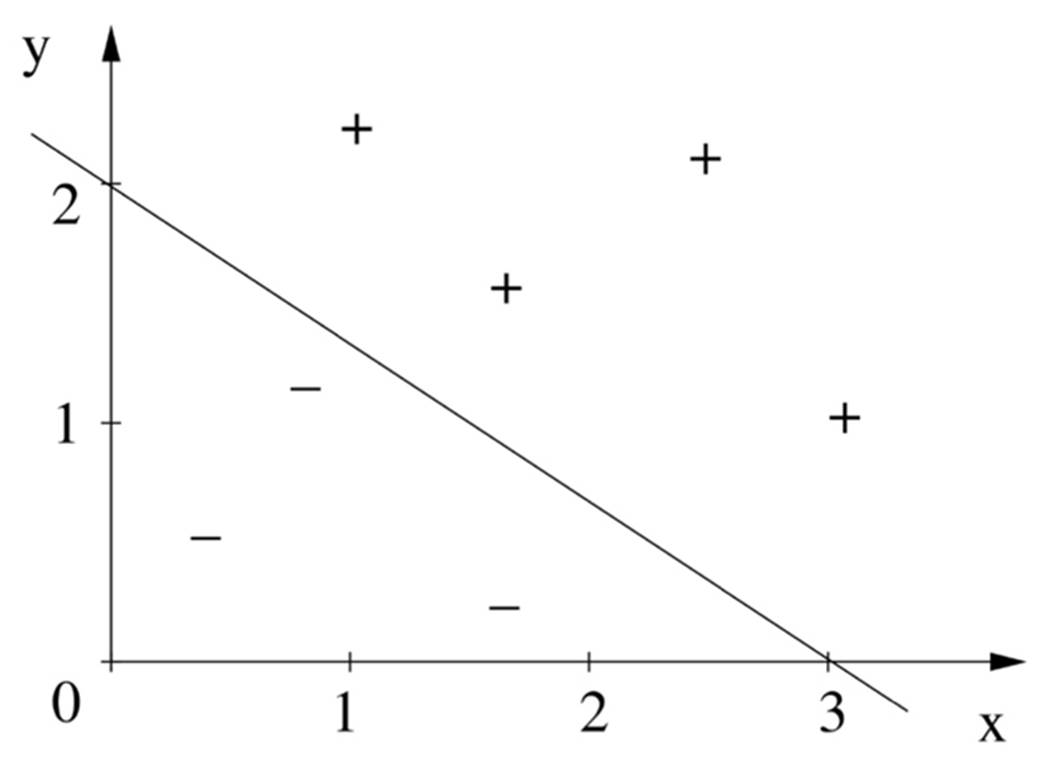

Suppose a perceptron has two continuous inputs x and y. (In other words, x and y can take on any numeric values, not just 0 and 1.) Then each example can be represented by a point on the plane, and the boundary between positive examples (for which the perceptron outputs 1) and negative ones (output 0) is a straight line:

This is because the boundary is the set of points where the weighted sum exactly equals the threshold, and a weighted sum is a linear function. For example, if the weights are 2 for x and 3 for y and the threshold is 6, the boundary is defined by the equation 2 x + 3 y = 6. The point x = 0, y = 2 is on the boundary, and to stay on it we have to take three steps across for every two steps down, so that the gain in x makes up for the loss in y. The resulting points form a straight line.

Learning a perceptron’s weights means varying the direction of the straight line until all the positive examples are on one side and all the negative ones on the other. In one dimension, the boundary is a point; in two, it’s a straight line; in three, it’s a plane; and in more than three, it’s a hyperplane. It’s hard to visualize things in hyperspace, but the math works just the same way. In n dimensions, we have n inputs and the perceptron has n weights. To decide whether the perceptron fires or not, we multiply each weight by the corresponding input and compare the sum of all of them with the threshold.

If all inputs have a weight of one and the threshold is half the number of inputs, then the perceptron fires if more than half its inputs fire. In other words, the perceptron is a like a tiny parliament where the majority wins. (Or perhaps not so tiny, considering it can have thousands of members.) It’s not altogether democratic, though, because in general not everyone has an equal vote. A neural network is more like a social network, where a few close friends count for more than thousands of Facebook ones. And it’s the friends you trust most that influence you the most. If a friend recommends a movie and you go see it and like it, next time around you’ll probably follow her advice again. On the other hand, if she keeps gushing about movies you didn’t enjoy, you will start to ignore her opinions (and perhaps your friendship even wanes a bit).

This is how Rosenblatt’s perceptron algorithm learns weights.

Consider the grandmother cell, a favorite thought experiment of cognitive neuroscientists. The grandmother cell is a neuron in your brain that fires whenever you see your grandmother, and only then. Whether or not grandmother cells really exist is an open question, but let’s design one for use in machine learning. A perceptron learns to recognize your grandmother as follows. The inputs to the cell are either the raw pixels in the image or various hardwired features of it, like brown eyes, which takes the value 1 if the image contains a pair of brown eyes and 0 otherwise. In the beginning, all the connections from features to the neuron have small random weights, like the synapses in your brain at birth. Then we show the perceptron a series of images, some of your grandmother and some not. If it fires upon seeing an image of your grandmother, or doesn’t fire upon seeing something else, then no learning needs to happen. (If it ain’t broke, don’t fix it.) But if the perceptron fails to fire when it’s looking at your grandmother, that means the weighted sum of its inputs should have been higher, so we increase the weights of the inputs that are on. (For example, if your grandmother has brown eyes, the weight of that feature goes up.) Conversely, if the perceptron fires when it shouldn’t, we decrease the weights of the active inputs. It’s the errors that drive the learning. Over time, the features that are indicative of your grandmother acquire high weights, and the ones that aren’t get low weights. Once the perceptron always fires upon seeing your grandmother, and only then, the learning is complete.

The perceptron generated a lot of excitement. It was simple, yet it could recognize printed letters and speech sounds just by being trained with examples. A colleague of Rosenblatt’s at Cornell proved that, if the positive and negative examples could be separated by a hyperplane, the perceptron would find it. For Rosenblatt and others, a genuine understanding of how the brain learns seemed within reach, and with it a powerful general-purpose learning algorithm.

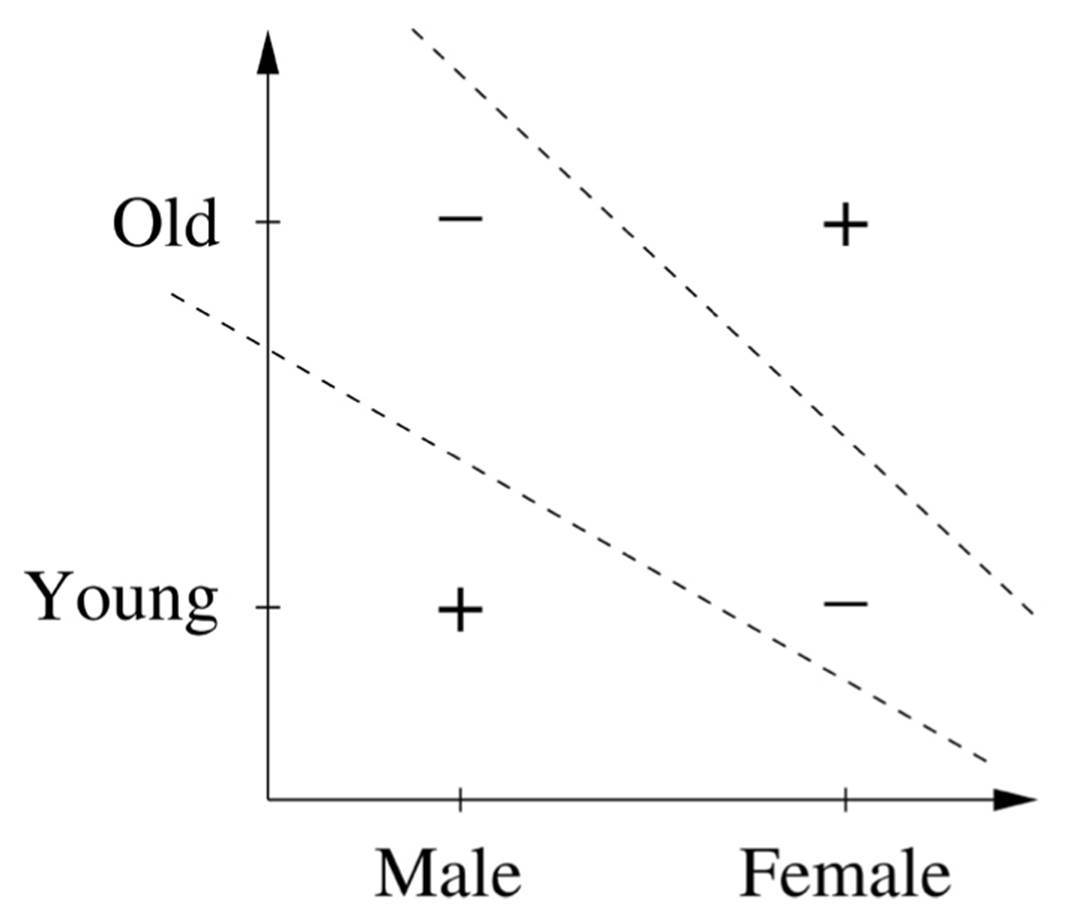

But then the perceptron hit a brick wall. The knowledge engineers were irritated by Rosenblatt’s claims and envious of all the attention and funding neural networks, and perceptrons in particular, were getting. One of them was Marvin Minsky, a former classmate of Rosenblatt’s at the Bronx High School of Science and by then the leader of the AI group at MIT. (Ironically, his PhD had been on neural networks, but he had grown disillusioned with them.) In 1969, Minsky and his colleague Seymour Papert published Perceptrons, a book detailing the shortcomings of the eponymous algorithm, with example after example of simple things it couldn’t learn. The simplest one-and therefore the most damning-was the exclusive-OR function, or XOR for short, which is true if one of its inputs is true but not both. For example, Nike’s two most loyal demographics are supposedly teenage boys and middle-aged women. In other words, you’re likely to buy Nike shoes if you’re young XOR female. Young is good, female is good, but both is not. You’re also an unpromising target for Nike advertising if you’re neither young nor female. The problem with XOR is that there is no straight line capable of separating the positive from the negative examples. This figure shows two failed candidates:

Since perceptrons can only learn linear boundaries, they can’t learn XOR. And if they can’t do even that, they’re not a very good model of how the brain learns, or a viable candidate for the Master Algorithm.

A perceptron models only a single neuron’s learning, however, and although Minsky and Papert acknowledged that layers of interconnected neurons should be capable of more, they didn’t see a way to learn them. Neither did anyone else. The problem is that there’s no clear way to change the weights of the neurons in the “hidden” layers to reduce the errors made by the ones in the output layer. Every hidden neuron influences the output via multiple paths, and every error has a thousand fathers. Who do you blame? Or, conversely, who gets the credit for correct outputs? This credit-assignment problem shows up whenever we try to learn a complex model and is one of the central problems in machine learning.

Perceptrons was mathematically unimpeachable, searing in its clarity, and disastrous in its effects. Machine learning at the time was associated mainly with neural networks, and most researchers (not to mention funders) concluded that the only way to build an intelligent system was to explicitly program it. For the next fifteen years, knowledge engineering would hold center stage, and machine learning seemed to have been consigned to the ash heap of history.

Physicist makes brain out of glass

If the history of machine learning were a Hollywood movie, the villain would be Marvin Minsky. He’s the evil queen who gives Snow White a poisoned apple, leaving her in suspended animation. (In a 1988 essay, Seymour Papert even compared himself, tongue-in-cheek, to the huntsman the queen sent to kill Snow White in the forest.) And Prince Charming would be a Caltech physicist by the name of John Hopfield. In 1982, Hopfield noticed a striking analogy between the brain and spin glasses, an exotic material much beloved of statistical physicists. This set off a connectionist renaissance that culminated a few years later in the invention of the first algorithms capable of solving the credit-assignment problem, ushering in a new era where machine learning replaced knowledge engineering as the dominant paradigm in AI.

Spin glasses are not actually glasses, although they have some glass-like properties. Rather, they are magnetic materials. Every electron is a tiny magnet by virtue of its spin, which can point “up” or “down.” In materials like iron, electrons’ spins tend to line up: if an electron with down spin is surrounded by electrons with up spins, it will probably flip to up. When most of the spins in a chunk of iron line up, it turns into a magnet. In ordinary magnets, the strength of interaction between adjacent spins is the same for all pairs, but in a spin glass it can vary; it may even be negative, causing nearby spins to point in opposite directions. The energy of an ordinary magnet is lowest when all its spins align, but in a spin glass, it’s not so simple. Indeed, finding the lowest-energy state of a spin glass is an NP-complete problem, meaning that just about every other difficult optimization problem can be reduced to it. Because of this, a spin glass doesn’t necessarily settle into its overall lowest energy state; much like rainwater may flow downhill into a lake instead of reaching the ocean, a spin glass may get stuck in a local minimum, a state with lower energy than all the states that can be reached from it by flipping a spin, rather than evolve to the global one.

Hopfield noticed an interesting similarity between spin glasses and neural networks: an electron’s spin responds to the behavior of its neighbors much like a neuron does. In the electron’s case, it flips up if the weighted sum of the neighbors exceeds a threshold and flips (or stays) down otherwise. Inspired by this, he defined a type of neural network that evolves over time in the same way that a spin glass does and postulated that the network’s minimum energy states are its memories. Each such state has a “basin of attraction” of initial states that converge to it, and in this way the network can do pattern recognition: for example, if one of the memories is the pattern of black-and-white pixels formed by the digit nine and the network sees a distorted nine, it will converge to the “ideal” one and thereby recognize it. Suddenly, a vast body of physical theory was applicable to machine learning, and a flood of statistical physicists poured into the field, helping it break out of the local minimum it had been stuck in.

A spin glass is still a very unrealistic model of the brain, though. For one, spin interactions are symmetric, and connections between neurons in the brain are not. Another big issue that Hopfield’s model ignored is that real neurons are statistical: they don’t deterministically turn on and off as a function of their inputs; rather, as the weighted sum of inputs increases, the neuron becomes more likely to fire, but it’s not certain that it will. In 1985, David Ackley, Geoff Hinton, and Terry Sejnowski replaced the deterministic neurons in Hopfield networks with probabilistic ones. A neural network now had a probability distribution over its states, with higher-energy states being exponentially less likely than lower-energy ones. In fact, the probability of finding the network in a particular state was given by the well-known Boltzmann distribution from thermodynamics, so they called their network a Boltzmann machine.

A Boltzmann machine has a mix of sensory and hidden neurons (analogous to, for example, the retina and the brain, respectively). It learns by being alternately awake and asleep, just like humans. While awake, the sensory neurons fire as dictated by the data, and the hidden ones evolve according to the network dynamics and the sensory input. For example, if the network is shown an image of a nine, the neurons corresponding to the black pixels in the image stay on, the others stay off, and the hidden ones fire randomly according to the Boltzmann distribution given those pixel values. During sleep, the machine dreams, leaving both sensory and hidden neurons free to wander. Just before the new day dawns, it compares the statistics of its states during the dream and during yesterday’s activities and changes the connection weights so that they match. If two neurons tend to fire together during the day but less so while asleep, the weight of their connection goes up; if it’s the opposite, they go down. By doing this day after day, the predicted correlations between sensory neurons evolve until they match the real ones. At this point, the Boltzmann machine has learned a good model of the data and effectively solved the credit-assignment problem.

Geoff Hinton went on to try many variations on Boltzmann machines over the following decades. Hinton, a psychologist turned computer scientist and great-great-grandson of George Boole, the inventor of the logical calculus used in all digital computers, is the world’s leading connectionist. He has tried longer and harder to understand how the brain works than anyone else. He tells of coming home from work one day in a state of great excitement, exclaiming “I did it! I’ve figured out how the brain works!” His daughter replied, “Oh, Dad, not again!” Hinton’s latest passion is deep learning, which we’ll meet later in this chapter. He was also involved in the development of backpropagation, an even better algorithm than Boltzmann machines for solving the credit-assignment problem that we’ll look at next. Boltzmann machines could solve the credit-assignment problem in principle, but in practice learning was very slow and painful, making this approach impractical for most applications. The next breakthrough involved getting rid of another oversimplification that dated all the way back to McCulloch and Pitts.

The most important curve in the world

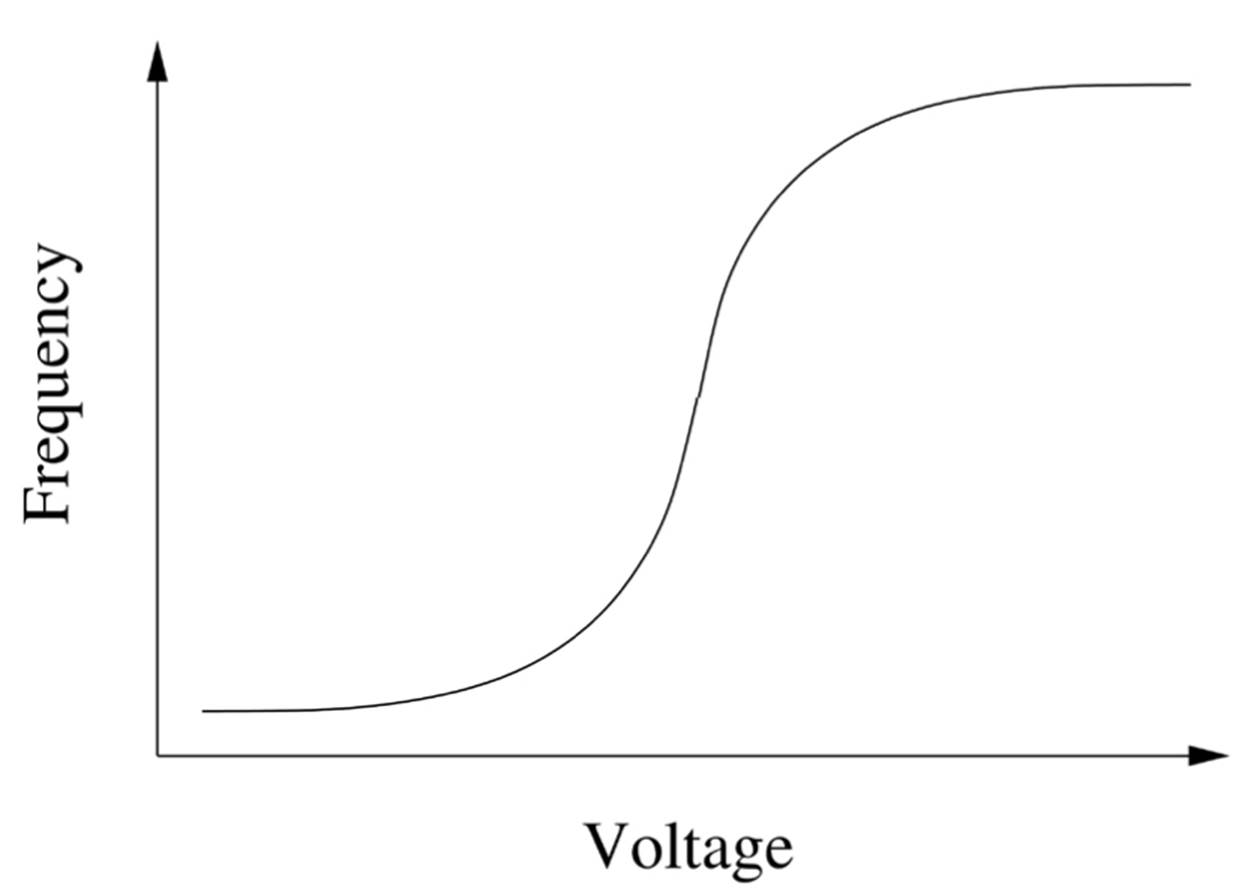

As far as its neighbors are concerned, a neuron can only be in one of two states: firing or not firing. This misses an important subtlety, however. Action potentials are short lived; the voltage spikes for a small fraction of a second and immediately goes back to its resting state. And a single spike barely registers in the receiving neuron; it takes a train of spikes closely on each other’s heels to wake it up. A typical neuron spikes occasionally in the absence of stimulation, spikes more and more frequently as stimulation builds up, and saturates at the fastest spiking rate it can muster, beyond which increased stimulation has no effect. Rather than a logic gate, a neuron is more like a voltage-to-frequency converter. The curve of frequency as a function of voltage looks like this:

This curve, which looks like an elongated S, is variously known as the logistic, sigmoid, or S curve. Peruse it closely, because it’s the most important curve in the world. At first the output increases slowly with the input, so slowly it seems constant. Then it starts to change faster, then very fast, then slower and slower until it becomes almost constant again. The transfer curve of a transistor, which relates its input and output voltages, is also an S curve. So both computers and the brain are filled with S curves. But it doesn’t end there. The S curve is the shape of phase transitions of all kinds: the probability of an electron flipping its spin as a function of the applied field, the magnetization of iron, the writing of a bit of memory to a hard disk, an ion channel opening in a cell, ice melting, water evaporating, the inflationary expansion of the early universe, punctuated equilibria in evolution, paradigm shifts in science, the spread of new technologies, white flight from multiethnic neighborhoods, rumors, epidemics, revolutions, the fall of empires, and much more. The Tipping Point could equally well (if less appealingly) be entitled The S Curve. An earthquake is a phase transition in the relative position of two adjacent tectonic plates. A bump in the night is just the sound of the microscopic tectonic plates in your house’s walls shifting, so don’t be scared. Joseph Schumpeter said that the economy evolves by cracks and leaps: S curves are the shape of creative destruction. The effect of financial gains and losses on your happiness follows an S curve, so don’t sweat the big stuff. The probability that a random logical formula is satisfiable-the quintessential NP-complete problem-undergoes a phase transition from almost 1 to almost 0 as the formula’s length increases. Statistical physicists spend their lives studying phase transitions.

In Hemingway’s The Sun Also Rises, when Mike Campbell is asked how he went bankrupt, he replies, “Two ways. Gradually and then suddenly.” The same could be said of Lehman Brothers. That’s the essence of an S curve. One of the futurist Paul Saffo’s rules of forecasting is: look for the S curves. When you can’t get the temperature in the shower just right-first it’s too cold, and then it quickly shifts to too hot-blame the S curve. When you make popcorn, watch the S curve’s progress: at first nothing happens, then a few kernels pop, then a bunch more, then the bulk of them in a sudden burst of fireworks, then a few more, and then it’s ready to eat. Every motion of your muscles follows an S curve: slow, then fast, then slow again. Cartoons gained a new naturalness when the animators at Disney figured this out and started copying it. Your eyes move in S curves, fixating on one thing and then another, along with your consciousness. Mood swings are phase transitions. So are birth, adolescence, falling in love, getting married, getting pregnant, getting a job, losing it, moving to a new town, getting promoted, retiring, and dying. The universe is a vast symphony of phase transitions, from the cosmic to the microscopic, from the mundane to the life changing.

The S curve is not just important as a model in its own right; it’s also the jack-of-all-trades of mathematics. If you zoom in on its midsection, it approximates a straight line. Many phenomena we think of as linear are in fact S curves, because nothing can grow without limit. Because of relativity, and contra Newton, acceleration does not increase linearly with force, but follows an S curve centered at zero. So does electric current as a function of voltage in the resistors found in electronic circuits, or in a light bulb (until the filament melts, which is itself another phase transition). If you zoom out from an S curve, it approximates a step function, with the output suddenly changing from zero to one at the threshold. So depending on the input voltages, the same curve represents the workings of a transistor in both digital computers and analog devices like amplifiers and radio tuners. The early part of an S curve is effectively an exponential, and near the saturation point it approximates exponential decay. When someone talks about exponential growth, ask yourself: How soon will it turn into an S curve? When will the population bomb peter out, Moore’s law lose steam, or the singularity fail to happen? Differentiate an S curve and you get a bell curve: slow, fast, slow becomes low, high, low. Add a succession of staggered upward and downward S curves, and you get something close to a sine wave. In fact, every function can be closely approximated by a sum of S curves: when the function goes up, you add an S curve; when it goes down, you subtract one. Children’s learning is not a steady improvement but an accumulation of S curves. So is technological change. Squint at the New York City skyline and you can see a sum of S curves unfolding across the horizon, each as sharp as a skyscraper’s corner.

Most importantly for us, S curves lead to a new solution to the credit-assignment problem. If the universe is a symphony of phase transitions, let’s model it with one. That’s what the brain does: it tunes the system of phase transitions inside to the one outside. So let’s replace the perceptron’s step function with an S curve and see what happens.

Climbing mountains in hyperspace

In the perceptron algorithm, the error signal is all or none: you got it either right or wrong. That’s not much to go on, particularly if you have a network of many neurons. You may know that the output neuron is wrong (oops, that wasn’t your grandmother), but what about some neuron deep inside the brain? What does it even mean for such a neuron to be right or wrong? If the neurons’ output is continuous instead of binary, the picture changes. For starters, we now know how much the output neuron is wrong by: the difference between it and the desired output. If the neuron should be firing away (“Oh hi, Grandma!”) and is firing a little, that’s better than if it’s not firing at all. More importantly, we can now propagate that error to the hidden neurons: if the output neuron should fire more and neuron A connects to it, then the more A is firing, the more we should strengthen their connection; but if A is inhibited by another neuron B, then B should fire less, and so on. Based on the feedback from all the neurons it’s connected to, each neuron decides how much more or less to fire. Based on that and the activity of its input neurons, it strengthens or weakens its connections to them. I need to fire more, and neuron B is inhibiting me? Lower its weight. And neuron C is firing away, but its connection to me is weak? Strengthen it. My “customer” neurons, downstream in the network, will tell me how well I’m doing in the next round.

Whenever the learner’s “retina” sees a new image, that signal propagates forward through the network until it produces an output. Comparing this output with the desired one yields an error signal, which then propagates back through the layers until it reaches the retina. Based on this returning signal and on the inputs it had received during the forward pass, each neuron adjusts its weights. As the network sees more and more images of your grandmother and other people, the weights gradually converge to values that let it discriminate between the two. Backpropagation, as this algorithm is known, is phenomenally more powerful than the perceptron algorithm. A single neuron could only learn straight lines. Given enough hidden neurons, a multilayer perceptron, as it’s called, can represent arbitrarily convoluted frontiers. This makes backpropagation-or simply backprop-the connectionists’ master algorithm.

Backprop is an instance of a strategy that is very common in both nature and technology: if you’re in a hurry to get to the top of the mountain, climb the steepest slope you can find. The technical term for this is gradient ascent (if you want to get to the top) or gradient descent (if you’re looking for the valley bottom). Bacteria can find food by swimming up the concentration gradient of, say, glucose molecules, and they can flee from poisons by swimming down their gradient. All sorts of things, from aircraft wings to antenna arrays, can be optimized by gradient descent. Backprop is an efficient way to do it in a multilayer perceptron: keep tweaking the weights so as to lower the error, and stop when all tweaks fail. With backprop, you don’t have to figure out how to tweak each neuron’s weights from scratch, which would be too slow; you can do it layer by layer, tweaking each neuron based on how you tweaked the neurons it connects to. If you had to throw out your entire machine-learning toolkit in an emergency save for one tool, gradient descent is probably the one you’d want to hold on to.

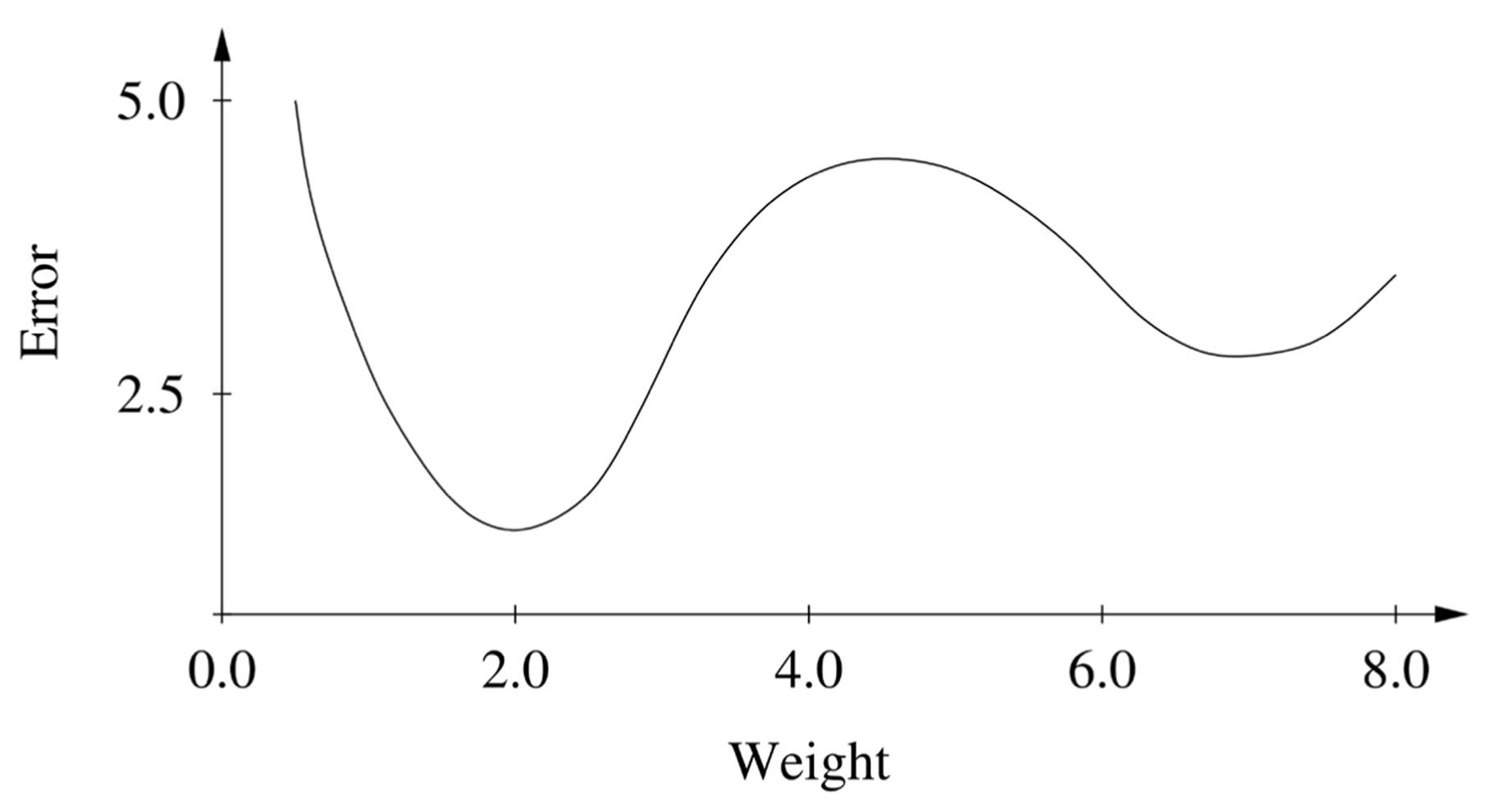

So does backprop solve the machine-learning problem? Can we just throw together a big pile of neurons, wait for it to do its magic, and on the way to the bank collect a Nobel Prize for figuring out how the brain works? Alas, life is not that easy. Suppose your network has only one weight, and this is the graph of the error as a function of it:

The optimal weight, where the error is lowest, is 2.0. If the network starts out with a weight of 0.75, for example, backprop will get to the optimum in a few steps, like a ball rolling downhill. But if it starts at 5.5, on the other hand, backprop will roll down to 7.0 and remain stuck there. Backprop, with its incremental weight changes, doesn’t know how to find the global error minimum, and local ones can be arbitrarily bad, like mistaking your grandmother for a hat. With one weight, you could try every possible value at increments of 0.01 and find the optimum that way. But with thousands of weights, let alone millions or billions, this is not an option because the number of points on the grid goes up exponentially with the number of weights. The global minimum is hidden somewhere in the unfathomable vastness of hyperspace-and good luck finding it.

Imagine you’ve been kidnapped and left blindfolded somewhere in the Himalayas. Your head is throbbing, and your memory is not too good, either. All you know is you need to get to the top of Mount Everest. What do you do? You take a step forward and nearly slide into a ravine. After catching your breath, you decide to be a bit more systematic. You carefully feel around with your foot until you find the highest point you can and step gingerly to that point. Then you do the same again. Little by little, you get higher and higher. After a while, every step you can take is down, and you stop. That’s gradient ascent. If the Himalayas were just Mount Everest, and Everest was a perfect cone, it would work like a charm. But more likely, when you get to a place where every step is down, you’re still very far from the top. You’re just standing on a foothill somewhere, and you’re stuck. That’s what happens to backprop, except it climbs mountains in hyperspace instead of 3-D. If your network has a single neuron, just climbing to better weights one step at a time will get you to the top. But with a multilayer perceptron, the landscape is very rugged; good luck finding the highest peak.

This was part of the reason Minsky, Papert, and others couldn’t see how to learn multilayer perceptrons. They could imagine replacing step functions by S curves and doing gradient descent, but then they were faced with the problem of local minima of the error. In those days researchers didn’t trust computer simulations; they demanded mathematical proof that an algorithm would work, and there’s no such proof for backprop. But what we’ve come to realize is that most of the time a local minimum is fine. The error surface often looks like the quills of a porcupine, with many steep peaks and troughs, but it doesn’t really matter if we find the absolute lowest trough; any one will do. Better still, a local minimum may in fact be preferable because it’s less likely to prove to have overfit our data than the global one.

Hyperspace is a double-edged sword. On the one hand, the higher dimensional the space, the more room it has for highly convoluted surfaces and local optima. On the other hand, to be stuck in a local optimum you have to be stuck in every dimension, so it’s more difficult to get stuck in many dimensions than it is in three. In hyperspace there are mountain passes all over the (hyper) place. So, with a little help from a human sherpa, backprop can often find its way to a perfectly good set of weights. It may be only the mystical valley of Shangri-La, not the sea, but why complain if in hyperspace there are millions of Shangri-Las, each with billions of mountain passes leading to it?

Beware of attaching too much meaning to the weights backprop finds, however. Remember that there are probably many very different ones that are just as good. Learning in multilayer perceptrons is a chaotic process in the sense that starting in slightly different places can cause you to wind up at very different solutions. The phenomenon is the same whether the slight difference is in the initial weights or the training data and manifests itself in all powerful learners, not just backprop.

We could do away with the problem of local optima by taking out the S curves and just letting each neuron output the weighted sum of its inputs. That would make the error surface very smooth, leaving only one minimum-the global one. The problem, though, is that a linear function of linear functions is still just a linear function, so a network of linear neurons is no better than a single neuron. A linear brain, no matter how large, is dumber than a roundworm. S curves are a nice halfway house between the dumbness of linear functions and the hardness of step functions.

The perceptron’s revenge

Backprop was invented in 1986 by David Rumelhart, a psychologist at the University of California, San Diego, with the help of Geoff Hinton and Ronald Williams. Among other things, they showed that backprop can learn XOR, enabling connectionists to thumb their noses at Minsky and Papert. Recall the Nike example: young men and middle-aged women are the most likely buyers of Nike shoes. We can represent this with a network of three neurons: one that fires when it sees a young male, another that fires when it sees a middle-aged female, and another that fires when either of those does. And with backprop we can learn the appropriate weights, resulting in a successful Nike prospect detector. (So there, Marvin.)

In an early demonstration of the power of backprop, Terry Sejnowski and Charles Rosenberg trained a multilayer perceptron to read aloud. Their NETtalk system scanned the text, selected the correct phonemes according to context, and fed them to a speech synthesizer. NETtalk not only generalized accurately to new words, which knowledge-based systems could not, but it learned to speak in a remarkably human-like way. Sejnowski used to mesmerize audiences at research meetings by playing a tape of NETtalk’s progress: babbling at first, then starting to make sense, then speaking smoothly with only the occasional error. (You can find samples on YouTube by typing “sejnowski nettalk.”)

Neural networks’ first big success was in predicting the stock market. Because they could detect small nonlinearities in very noisy data, they beat the linear models then prevalent in finance and their use spread. A typical investment fund would train a separate network for each of a large number of stocks, let the networks pick the most promising ones, and then have human analysts decide which of those to invest in. A few funds, however, went all the way and let the learners themselves buy and sell. Exactly how all these fared is a closely guarded secret, but it’s probably not an accident that machine learners keep disappearing into hedge funds at an alarming rate.

Nonlinear models are important far beyond the stock market. Scientists everywhere use linear regression because that’s what they know, but more often than not the phenomena they study are nonlinear, and a multilayer perceptron can model them. Linear models are blind to phase transitions; neural networks soak them up like a sponge.

Another notable early success of neural networks was learning to drive a car. Driverless cars first broke into the public consciousness with the DARPA Grand Challenges in 2004 and 2005, but a over a decade earlier, researchers at Carnegie Mellon had already successfully trained a multilayer perceptron to drive a car by detecting the road in video images and appropriately turning the steering wheel. Carnegie Mellon’s car managed to drive coast to coast across America with very blurry vision (thirty by thirty-two pixels), a brain smaller than a worm’s, and only a few assists from the human copilot. (The project was dubbed “No Hands Across America.”) It may not have been the first truly self-driving car, but it did compare favorably with most teenage drivers.

Backprop’s applications are now too many to count. As its fame has grown, more of its history has come to light. It turns out that, as is often the case in science, backprop was invented more than once. Yann LeCun in France and others hit on it at around the same time as Rumelhart. A paper on backprop was rejected by the leading AI conference in the early 1980s because, according to the reviewers, Minsky and Papert had already proved that perceptrons don’t work. In fact, Rumelhart is credited with inventing backprop by the Columbus test: Columbus was not the first person to discover America, but the last. It turns out that Paul Werbos, a graduate student at Harvard, had proposed a similar algorithm in his PhD thesis in 1974. And in a supreme irony, Arthur Bryson and Yu-Chi Ho, two control theorists, had done the same even earlier: in 1969, the same year that Minsky and Papert published Perceptrons! Indeed, the history of machine learning itself shows why we need learning algorithms. If algorithms that automatically find related papers in the scientific literature had existed in 1969, they could have potentially helped avoid decades of wasted time and accelerated who knows what discoveries.

Among the many ironies of the history of the perceptron, perhaps the saddest is that Frank Rosenblatt died in a boating accident in Chesapeake Bay in 1969 and never lived to see the second act of his creation.

A complete model of a cell

A living cell is a quintessential example of a nonlinear system. The cell performs all of its functions by turning raw materials into end products through a complex web of chemical reactions. We can discover the structure of this network using symbolist methods like inverse deduction, as we saw in the last chapter, but to build a complete model of a cell we need to get quantitative, learning the parameters that couple the expression levels of different genes, relate environmental variables to internal ones, and so on. This is difficult because there is no simple linear relationship between these quantities. Rather, the cell maintains its stability through interlocking feedback loops, leading to very complex behavior. Backpropagation is well suited to this problem because of its ability to efficiently learn nonlinear functions. If we had a complete map of the cell’s metabolic pathways and enough observations of all the relevant variables, backprop could in principle learn a detailed model of the cell, with a multilayer perceptron to predict each variable as a function of its immediate causes.

For the foreseeable future, however, we’ll have only partial knowledge of cells’ metabolic networks and be able to observe only a fraction of the variables we’d like to. Learning useful models despite all this missing information, and despite all the inevitable inconsistencies in the information that is available, calls for Bayesian methods, which we’ll delve into in Chapter 6. The same goes for making predictions for a particular patient, model in hand: the evidence available is necessarily noisy and incomplete, and Bayesian inference makes the best of it. It helps that, if the goal is to cure cancer, we don’t necessarily need to understand all the details of how tumor cells work, only enough to disable them without harming normal cells. In Chapter 6, we’ll also see how to orient learning toward the goal while steering clear of the things we don’t know and don’t need to know.

More immediately, we know we can use inverse deduction to infer the structure of the cell’s networks from data and previous knowledge, but there’s a combinatorial explosion of ways to apply it, and we need a strategy. Since metabolic networks were designed by evolution, perhaps simulating it in our learning algorithms is the way to go. In the next chapter, we’ll see how to do just that.

Deeper into the brain

When backprop first hit the streets, connectionists had visions of quickly learning larger and larger networks until, hardware permitting, they amounted to artificial brains. It didn’t turn out that way. Learning networks with one hidden layer was fine, but after that things soon got very difficult. Networks with a few layers worked only if they were carefully designed for the application (character recognition, say). Beyond that, backprop broke down. As we add layers, the error signal becomes more and more diffuse, like a river branching into smaller and smaller tributaries, until we’re down to individual raindrops that just don’t register. Learning with dozens or hundreds of hidden layers, like the brain, remained a distant dream, and by the mid-1990s, the excitement for multilayer perceptrons had petered out. A hard core of connectionists soldiered on, but by and large the attention of the machine-learning field moved elsewhere. (We’ll survey those lands in Chapters 6 and 7.)

Today, however, connectionism is resurgent. We’re learning deeper networks than ever before, and they’re setting new standards in vision, speech recognition, drug discovery, and other areas. The new field of deep learning is on the front page of the New York Times. Look under the hood, and… surprise: it’s the trusty old backprop engine, still humming. What changed? Nothing much, say the critics: just faster computers and bigger data. To which Hinton and others reply: exactly, we were right all along!

In truth, connectionists have made genuine progress. One of the protagonists of this latest twist in the connectionist roller coaster is an unassuming little device called an autoencoder. An autoencoder is a multilayer perceptron whose output is the same as its input. In goes a picture of your grandmother and out comes-the same picture of your grandmother. At first this seems like a silly idea: What use could such a contraption possibly be? The key is to make the hidden layer much smaller than the input and output layers, so the network can’t just learn to copy the input to the hidden layer and the hidden layer to the output, in which case we may as well throw the whole thing out. But if the hidden layer is small, something interesting happens: the network is forced to encode the input in fewer bits, so it can be represented in the hidden layer, and then decode those bits back to full size. It could, for example, learn to encode a million-pixel image of your grandmother as just the seven-character word grandma, or some such short code invented by itself, and simultaneously learn to decode “grandma” into an image of dear old granny. So an autoencoder is not unlike a file compression tool, with two important advantages: it figures out how to compress things on its own, and like Hopfield networks, it can turn a noisy, distorted image into a nice clean one.

Autoencoders were known in the 1980s, but they were very hard to learn, even though they had a single hidden layer. Figuring out how to pack a lot of information into the same few bits is a hellishly difficult problem (one code for your grandmother, a slightly different one for your grandfather, another one for Jennifer Aniston, etc). The landscape in hyperspace is just too rugged to get to a good peak; the hidden units need to learn what amounts to too many exclusive-ORs of the inputs. So autoencoders didn’t really catch on. The trick that took over a decade to discover was to make the hidden layer larger than the input and output ones. Huh? Actually, that’s only half the trick: the other half is to force all but a few of the hidden units to be off at any given time. This still prevents the hidden layer from just copying the input, and-crucially-it makes learning much easier. If we allow different bits to represent different inputs, the inputs no longer have to compete to set the same bits. Also, the network now has many more parameters, so the hyperspace you’re in has many more dimensions, and you have many more ways to get out of what would otherwise be local maxima. This is called a sparse autoencoder, and it’s a neat trick.

We haven’t seen any deep learning yet, though. The next clever idea is to stack sparse autoencoders on top of each other like a club sandwich. The hidden layer of the first autoencoder becomes the input/output layer of the second one, and so on. Because the neurons are nonlinear, each hidden layer learns a more sophisticated representation of the input, building on the previous one. Given a large set of face images, the first autoencoder learns to encode local features like corners and spots, the second uses those to encode facial features like the tip of a nose or the iris of an eye, the third one learns whole noses and eyes, and so on. Finally, the top layer can be a conventional perceptron that learns to recognize your grandmother from the high-level features provided by the layer below it-much easier than using only the crude information provided by a single hidden layer or than trying to backpropagate through all the layers at once. The Google Brain network of New York Times fame is a nine-layer sandwich of autoencoders and other ingredients that learns to recognize cats from YouTube videos. At one billion connections, it was at the time the largest network ever learned. It’s no surprise that Andrew Ng, one of the project’s principals, is also one of the leading proponents of the idea that human intelligence boils down to a single algorithm, and all we need to do is figure it out. Ng, whose affability belies a fierce ambition, believes that stacked sparse autoencoders can take us closer to solving AI than anything that came before.

Stacked autoencoders are not the only kind of deep learner. Another is based on Boltzmann machines, and another-convolutional neural networks-on a model of the visual cortex. Despite their remarkable successes, however, all of these are still a far cry from the brain. The Google network can recognize cat faces seen head on; humans can recognize cats in any pose and even when the face is hard to make out. The Google network is still pretty shallow; only three of its nine layers are autoencoders. A multilayer perceptron is a passable model of the cerebellum, the part of the brain responsible for low-level motor control, but the cortex is another story. It’s missing the backward connections needed to propagate errors, for one, and yet it’s where the real learning wizardry resides. In his book On Intelligence, Jeff Hawkins advocated designing algorithms closely based on the organization of the cortex, but so far none of these algorithms can compete with today’s deep networks.

This may change as our understanding of the brain improves. Inspired by the human genome project, the new field of connectomics seeks to map every synapse in the brain. The European Union is investing a billion euros to build a soup-to-nuts model of it. America’s BRAIN initiative, with $100 million in funding in 2014 alone, has similar aims. Nevertheless, symbolists are very skeptical of this path to the Master Algorithm. Even if we can image the whole brain at the level of individual synapses, we (ironically) need better machine-learning algorithms to turn those images into wiring diagrams; doing it by hand is out of the question. Worse than that, even if we had a complete map of the brain, we would still be at a loss to figure out what it does. The nervous system of the C. elegans worm consists of only 302 neurons and was completely mapped in 1986, but we still have only a fragmentary understanding of what it does. We need higher-level concepts to make sense of the morass of low-level details, weeding out the ones that are specific to wetware or just quirks of evolution. We don’t build airplanes by reverse engineering feathers, and airplanes don’t flap their wings. Rather, airplane designs are based on the principles of aerodynamics, which all flying objects must obey. We still do not understand those analogous principles of thought.

Perhaps connectomics is overkill. Some connectionists have been overheard claiming that backprop is the Master Algorithm and we just need to scale it up. But symbolists pour scorn on this notion. They point to a long list of things that humans can do but neural networks can’t. Take commonsense reasoning. It involves combining pieces of information that may have never been seen together before. Did Mary eat a shoe for lunch? No, because Mary is a person, people only eat edible things, and shoes are not edible. Symbolic systems have no trouble with this-they just chain the relevant rules-but multilayer perceptrons can’t do it; once they’re done learning, they just compute the same fixed function over and over again. Neural networks are not compositional, and compositionality is a big part of human cognition. Another big issue is that humans-and symbolic models like sets of rules and decision trees-can explain their reasoning, while neural networks are big piles of numbers that no one can understand.

But if humans have all these abilities that their brains didn’t learn by tweaking synapses, where did they come from? Unless you believe in magic, the answer must be evolution. If you’re a connectionism skeptic and you have the courage of your convictions, it behooves you to figure out how evolution learned everything a baby knows at birth-and the more you think is innate, the taller the order. But if you can figure it out and program a computer to do it, it would be churlish to deny that you’ve invented at least one version of the Master Algorithm.

- Prologue

- CHAPTER ONE: The Machine-Learning Revolution

- CHAPTER TWO: The Master Algorithm

- CHAPTER THREE: Hume’s Problem of Induction

- CHAPTER FOUR: How Does Your Brain Learn?

- CHAPTER FIVE: Evolution: Nature’s Learning Algorithm

- CHAPTER SIX: In the Church of the Reverend Bayes

- CHAPTER SEVEN: You Are What You Resemble

- CHAPTER EIGHT: Learning Without a Teacher

- CHAPTER NINE: The Pieces of the Puzzle Fall into Place

- CHAPTER TEN: This Is the World on Machine Learning

- Epilogue

- Acknowledgments

- Further Readings

- Index

- Pedro Domingos

- Ñîäåðæàíèå êíèãè

- Ïîïóëÿðíûå ñòðàíèöû

- How to read

- Chapter 5. Preparations

- Chapter 6. Traversing of tables and chains

- Chapter 7. The state machine

- Chapter 8. Saving and restoring large rule-sets

- Chapter 9. How a rule is built

- Chapter 10. Iptables matches

- Chapter 11. Iptables targets and jumps

- Chapter 12. Debugging your scripts

- Chapter 5 Installing and Configuring VirtualCenter 2.0

- Chapter 13. rc.firewall file

- Chapter 14. Example scripts