Êíèãà: The Master Algorithm: How the Quest for the Ultimate Learning Machine Will Remake Our World

CHAPTER ONE: The Machine-Learning Revolution

CHAPTER ONE: The Machine-Learning Revolution

We live in the age of algorithms. Only a generation or two ago, mentioning the word algorithm would have drawn a blank from most people. Today, algorithms are in every nook and cranny of civilization. They are woven into the fabric of everyday life. They’re not just in your cell phone or your laptop but in your car, your house, your appliances, and your toys. Your bank is a gigantic tangle of algorithms, with humans turning the knobs here and there. Algorithms schedule flights and then fly the airplanes. Algorithms run factories, trade and route goods, cash the proceeds, and keep records. If every algorithm suddenly stopped working, it would be the end of the world as we know it.

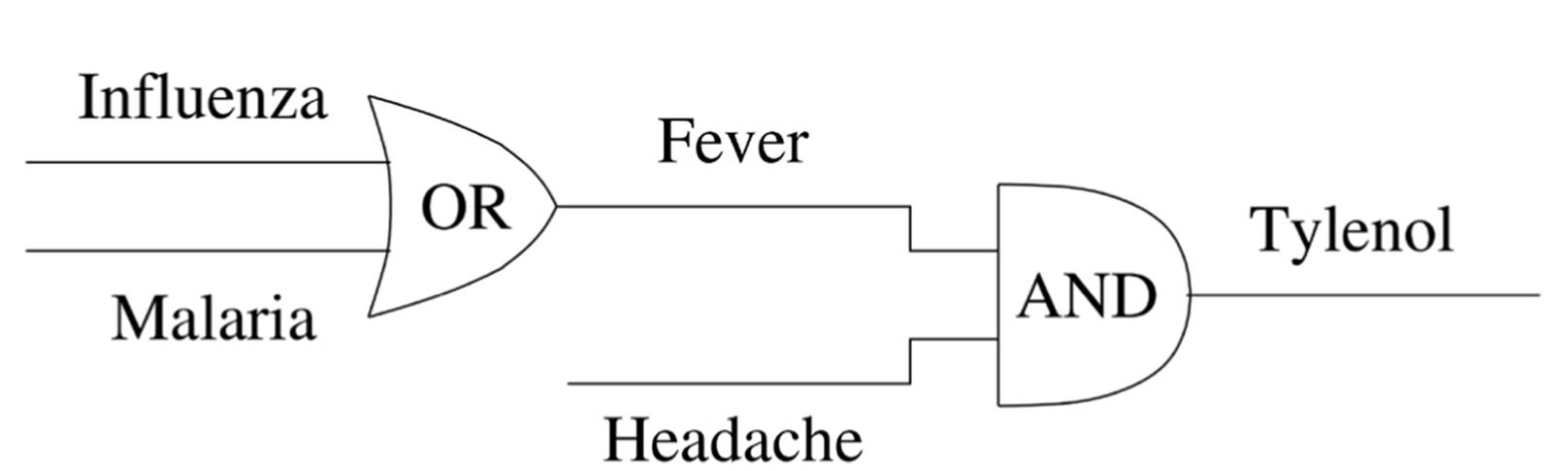

An algorithm is a sequence of instructions telling a computer what to do. Computers are made of billions of tiny switches called transistors, and algorithms turn those switches on and off billions of times per second. The simplest algorithm is: flip a switch. The state of one transistor is one bit of information: one if the transistor is on, and zero if it’s off. One bit somewhere in your bank’s computers says whether your account is overdrawn or not. Another bit somewhere in the Social Security Administration’s computers says whether you’re alive or dead. The second simplest algorithm is: combine two bits. Claude Shannon, better known as the father of information theory, was the first to realize that what transistors are doing, as they switch on and off in response to other transistors, is reasoning. (That was his master’s thesis at MIT-the most important master’s thesis of all time.) If transistor A turns on only when transistors B and C are both on, it’s doing a tiny piece of logical reasoning. If A turns on when either B or C is on, that’s another tiny logical operation. And if A turns on whenever B is off, and vice versa, that’s a third operation. Believe it or not, every algorithm, no matter how complex, can be reduced to just these three operations: AND, OR, and NOT. Simple algorithms can be represented by diagrams, using different symbols for the AND, OR, and NOT operations. For example, if a fever can be caused by influenza or malaria, and you should take Tylenol for a fever and a headache, this can be expressed as follows:

By combining many such operations, we can carry out very elaborate chains of logical reasoning. People often think computers are all about numbers, but they’re not. Computers are all about logic. Numbers and arithmetic are made of logic, and so is everything else in a computer. Want to add two numbers? There’s a combination of transistors that does that. Want to beat the human Jeopardy! champion? There’s a combination of transistors for that too (much bigger, naturally).

It would be prohibitively expensive, though, if we had to build a new computer for every different thing we want to do. Rather, a modern computer is a vast assembly of transistors that can do many different things, depending on which transistors are activated. Michelangelo said that all he did was see the statue inside the block of marble and carve away the excess stone until the statue was revealed. Likewise, an algorithm carves away the excess transistors in the computer until the intended function is revealed, whether it’s an airliner’s autopilot or a new Pixar movie.

An algorithm is not just any set of instructions: they have to be precise and unambiguous enough to be executed by a computer. For example, a cooking recipe is not an algorithm because it doesn’t exactly specify what order to do things in or exactly what each step is. Exactly how much sugar is a spoonful? As everyone who’s ever tried a new recipe knows, following it may result in something delicious or a mess. In contrast, an algorithm always produces the same result. Even if a recipe specifies precisely half an ounce of sugar, we’re still not out of the woods because the computer doesn’t know what sugar is, or an ounce. If we wanted to program a kitchen robot to make a cake, we would have to tell it how to recognize sugar from video, how to pick up a spoon, and so on. (We’re still working on that.) The computer has to know how to execute the algorithm all the way down to turning specific transistors on and off. So a cooking recipe is very far from an algorithm.

On the other hand, the following is an algorithm for playing tic-tac-toe:

If you or your opponent has two in a row, play on the remaining square.

Otherwise, if there’s a move that creates two lines of two in a row, play that.

Otherwise, if the center square is free, play there.

Otherwise, if your opponent has played in a corner, play in the opposite corner.

Otherwise, if there’s an empty corner, play there.

Otherwise, play on any empty square.

This algorithm has the nice property that it never loses! Of course, it’s still missing many details, like how the board is represented in the computer’s memory and how this representation is changed by a move. For example, we could have two bits for each square, with the value 00 if the square is empty, which changes to 01 if it has a naught and 10 if it has a cross. But it’s precise and unambiguous enough that any competent programmer could fill in the blanks. It also helps that we don’t really have to specify an algorithm ourselves all the way down to individual transistors; we can use preexisting algorithms as building blocks, and there’s a huge number of them to choose from.

Algorithms are an exacting standard. It’s often said that you don’t really understand something until you can express it as an algorithm. (As Richard Feynman said, “What I cannot create, I do not understand.”) Equations, the bread and butter of physicists and engineers, are really just a special kind of algorithm. For example, Newton’s second law, arguably the most important equation of all time, tells you to compute the net force on an object by multiplying its mass by its acceleration. It also tells you implicitly that the acceleration is the force divided by the mass, but making that explicit is itself an algorithmic step. In any area of science, if a theory cannot be expressed as an algorithm, it’s not entirely rigorous. (Not to mention you can’t use a computer to solve it, which really limits what you can do with it.) Scientists make theories, and engineers make devices. Computer scientists make algorithms, which are both theories and devices.

Designing an algorithm is not easy. Pitfalls abound, and nothing can be taken for granted. Some of your intuitions will turn out to have been wrong, and you’ll have to find another way. On top of designing the algorithm, you have to write it down in a language computers can understand, like Java or Python (at which point it’s called a program). Then you have to debug it: find every error and fix it until the computer runs your program without screwing up. But once you have a program that does what you want, you can really go to town. Computers will do your bidding millions of times, at ultrahigh speed, without complaint. Everyone in the world can use your creation. The cost can be zero, if you so choose, or enough to make you a billionaire, if the problem you solved is important enough. A programmer-someone who creates algorithms and codes them up-is a minor god, creating universes at will. You could even say that the God of Genesis himself is a programmer: language, not manipulation, is his tool of creation. Words become worlds. Today, sitting on the couch with your laptop, you too can be a god. Imagine a universe and make it real. The laws of physics are optional.

Over time, computer scientists build on each other’s work and invent algorithms for new things. Algorithms combine with other algorithms to use the results of other algorithms, in turn producing results for still more algorithms. Every second, billions of transistors in billions of computers switch billions of times. Algorithms form a new kind of ecosystem-ever growing, comparable in richness only to life itself.

Inevitably, however, there is a serpent in this Eden. It’s called the complexity monster. Like the Hydra, the complexity monster has many heads. One of them is space complexity: the number of bits of information an algorithm needs to store in the computer’s memory. If the algorithm needs more memory than the computer can provide, it’s useless and must be discarded. Then there’s the evil sister, time complexity: how long the algorithm takes to run, that is, how many steps of using and reusing the transistors it has to go through before it produces the desired results. If it’s longer than we can wait, the algorithm is again useless. But the scariest face of the complexity monster is human complexity. When algorithms become too intricate for our poor human brains to understand, when the interactions between different parts of the algorithm are too many and too involved, errors creep in, we can’t find them and fix them, and the algorithm doesn’t do what we want. Even if we somehow make it work, it winds up being needlessly complicated for the people using it and doesn’t play well with other algorithms, storing up trouble for later.

Every computer scientist does battle with the complexity monster every day. When computer scientists lose the battle, complexity seeps into our lives. You’ve probably noticed that many a battle has been lost. Nevertheless, we continue to build our tower of algorithms, with greater and greater difficulty. Each new generation of algorithms has to be built on top of the previous ones and has to deal with their complexities in addition to its own. The tower grows taller and taller, and it covers the whole world, but it’s also increasingly fragile, like a house of cards waiting to collapse. One tiny error in an algorithm and a billion-dollar rocket explodes, or the power goes out for millions. Algorithms interact in unexpected ways, and the stock market crashes.

If programmers are minor gods, the complexity monster is the devil himself. Little by little, it’s winning the war.

There has to be a better way.

Enter the learner

Every algorithm has an input and an output: the data goes into the computer, the algorithm does what it will with it, and out comes the result. Machine learning turns this around: in goes the data and the desired result and out comes the algorithm that turns one into the other. Learning algorithms-also known as learners-are algorithms that make other algorithms. With machine learning, computers write their own programs, so we don’t have to.

Wow.

Computers write their own programs. Now that’s a powerful idea, maybe even a little scary. If computers start to program themselves, how will we control them? Turns out we can control them quite well, as we’ll see. A more immediate objection is that perhaps this sounds too good to be true. Surely writing algorithms requires intelligence, creativity, problem-solving chops-things that computers just don’t have? How is machine learning distinguishable from magic? Indeed, as of today people can write many programs that computers can’t learn. But, more surprisingly, computers can learn programs that people can’t write. We know how to drive cars and decipher handwriting, but these skills are subconscious; we’re not able to explain to a computer how to do these things. If we give a learner a sufficient number of examples of each, however, it will happily figure out how to do them on its own, at which point we can turn it loose. That’s how the post office reads zip codes, and that’s why self-driving cars are on the way.

The power of machine learning is perhaps best explained by a low-tech analogy: farming. In an industrial society, goods are made in factories, which means that engineers have to figure out exactly how to assemble them from their parts, how to make those parts, and so on-all the way to raw materials. It’s a lot of work. Computers are the most complex goods ever invented, and designing them, the factories that make them, and the programs that run on them is a ton of work. But there’s another, much older way in which we can get some of the things we need: by letting nature make them. In farming, we plant the seeds, make sure they have enough water and nutrients, and reap the grown crops. Why can’t technology be more like this? It can, and that’s the promise of machine learning. Learning algorithms are the seeds, data is the soil, and the learned programs are the grown plants. The machine-learning expert is like a farmer, sowing the seeds, irrigating and fertilizing the soil, and keeping an eye on the health of the crop but otherwise staying out of the way.

Once we look at machine learning this way, two things immediately jump out. The first is that the more data we have, the more we can learn. No data? Nothing to learn. Big data? Lots to learn. That’s why machine learning has been turning up everywhere, driven by exponentially growing mountains of data. If machine learning was something you bought in the supermarket, its carton would say: “Just add data.”

The second thing is that machine learning is a sword with which to slay the complexity monster. Given enough data, a learning program that’s only a few hundred lines long can easily generate a program with millions of lines, and it can do this again and again for different problems. The reduction in complexity for the programmer is phenomenal. Of course, like the Hydra, the complexity monster sprouts new heads as soon as we cut off the old ones, but they start off smaller and take a while to grow, so we still get a big leg up.

We can think of machine learning as the inverse of programming, in the same way that the square root is the inverse of the square, or integration is the inverse of differentiation. Just as we can ask “What number squared gives 16?” or “What is the function whose derivative is x + 1?” we can ask, “What is the algorithm that produces this output?” We will soon see how to turn this insight into concrete learning algorithms.

Some learners learn knowledge, and some learn skills. “All humans are mortal” is a piece of knowledge. Riding a bicycle is a skill. In machine learning, knowledge is often in the form of statistical models, because most knowledge is statistical: all humans are mortal, but only 4 percent are Americans. Skills are often in the form of procedures: if the road curves left, turn the wheel left; if a deer jumps in front of you, slam on the brakes. (Unfortunately, as of this writing Google’s self-driving cars still confuse windblown plastic bags with deer.) Often, the procedures are quite simple, and it’s the knowledge at their core that’s complex. If you can tell which e-mails are spam, you know which ones to delete. If you can tell how good a board position in chess is, you know which move to make (the one that leads to the best position).

Machine learning takes many different forms and goes by many different names: pattern recognition, statistical modeling, data mining, knowledge discovery, predictive analytics, data science, adaptive systems, self-organizing systems, and more. Each of these is used by different communities and has different associations. Some have a long half-life, some less so. In this book I use the term machine learning to refer broadly to all of them.

Machine learning is sometimes confused with artificial intelligence (or AI for short). Technically, machine learning is a subfield of AI, but it’s grown so large and successful that it now eclipses its proud parent. The goal of AI is to teach computers to do what humans currently do better, and learning is arguably the most important of those things: without it, no computer can keep up with a human for long; with it, the rest follows.

In the information-processing ecosystem, learners are the superpredators. Databases, crawlers, indexers, and so on are the herbivores, patiently munging on endless fields of data. Statistical algorithms, online analytical processing, and so on are the predators. Herbivores are necessary, since without them the others couldn’t exist, but superpredators have a more exciting life. A crawler is like a cow, the web is its worldwide meadow, each page is a blade of grass. When the crawler is done munging, a copy of the web is sitting on its hard disks. An indexer then makes a list of the pages where each word appears, much like the index at the end of a book. Databases, like elephants, are big and heavy and never forget. Among these patient beasts dart statistical and analytical algorithms, compacting and selecting, turning data into information. Learners eat up this information, digest it, and turn it into knowledge.

Machine-learning experts (aka machine learners) are an elite priesthood even among computer scientists. Many computer scientists, particularly those of an older generation, don’t understand machine learning as well as they’d like to. This is because computer science has traditionally been all about thinking deterministically, but machine learning requires thinking statistically. If a rule for, say, labeling e-mails as spam is 99 percent accurate, that does not mean it’s buggy; it may be the best you can do and good enough to be useful. This difference in thinking is a large part of why Microsoft has had a lot more trouble catching up with Google than it did with Netscape. At the end of the day, a browser is just a standard piece of software, but a search engine requires a different mind-set.

The other reason machine learners are the ?ber-geeks is that the world has far fewer of them than it needs, even by the already dire standards of computer science. According to tech guru Tim O’Reilly, “data scientist” is the hottest job title in Silicon Valley. The McKinsey Global Institute estimates that by 2018 the United States alone will need 140,000 to 190,000 more machine-learning experts than will be available, and 1.5 million more data-savvy managers. Machine learning’s applications have exploded too suddenly for education to keep up, and it has a reputation for being a difficult subject. Textbooks are liable to give you math indigestion. This difficulty is more apparent than real, however. All of the important ideas in machine learning can be expressed math-free. As you read this book, you may even find yourself inventing your own learning algorithms, with nary an equation in sight.

The Industrial Revolution automated manual work and the Information Revolution did the same for mental work, but machine learning automates automation itself. Without it, programmers become the bottleneck holding up progress. With it, the pace of progress picks up. If you’re a lazy and not-too-bright computer scientist, machine learning is the ideal occupation, because learning algorithms do all the work but let you take all the credit. On the other hand, learning algorithms could put us out of our jobs, which would only be poetic justice.

By taking automation to new heights, the machine-learning revolution will cause extensive economic and social changes, just as the Internet, the personal computer, the automobile, and the steam engine did in their time. One area where these changes are already apparent is business.

Why businesses embrace machine learning

Why is Google worth so much more than Yahoo? They both make their money from showing ads on the web, and they’re both top destinations. Both use auctions to sell ads and machine learning to predict how likely a user is to click on an ad (the higher the probability, the more valuable the ad). But Google’s learning algorithms are much better than Yahoo’s. This is not the only reason for the difference in their market caps, of course, but it’s a big one. Every predicted click that doesn’t happen is a wasted opportunity for the advertiser and lost revenue for the website. With Google’s annual revenue of $50 billion, every 1 percent improvement in click prediction potentially means another half billion dollars in the bank, every year, for the company. No wonder Google is a big fan of machine learning, and Yahoo and others are trying hard to catch up.

Web advertising is just one manifestation of a much larger phenomenon. In every market, producers and consumers need to connect before a transaction can happen. In pre-Internet days, the main obstacles to this were physical. You could only buy books from your local bookstore, and your local bookstore had limited shelf space. But when you can download any book to your e-reader any time, the problem becomes the overwhelming number of choices. How do you browse the shelves of a bookstore that has millions of titles for sale? The same goes for other information goods: videos, music, news, tweets, blogs, plain old web pages. It also goes for every product and service that can be procured remotely: shoes, flowers, gadgets, hotel rooms, tutoring, investments. It even applies to people looking for a job or a date. How do you find each other? This is the defining problem of the Information Age, and machine learning is a big part of the solution.

As companies grow, they go through three phases. First, they do everything manually: the owners of a mom-and-pop store personally know their customers, and they order, display, and recommend items accordingly. This is nice, but it doesn’t scale. In the second and least happy phase, the company grows large enough that it needs to use computers. In come the programmers, consultants, and database managers, and millions of lines of code get written to automate all the functions of the company that can be automated. Many more people are served, but not as well: decisions are made based on coarse demographic categories, and computer programs are too rigid to match humans’ infinite versatility.

After a point, there just aren’t enough programmers and consultants to do all that’s needed, and the company inevitably turns to machine learning. Amazon can’t neatly encode the tastes of all its customers in a computer program, and Facebook doesn’t know how to write a program that will choose the best updates to show to each of its users. Walmart sells millions of products and has billions of choices to make every day; if the programmers at Walmart tried to write a program to make all of them, they would never be done. Instead, what these companies do is turn learning algorithms loose on the mountains of data they’ve accumulated and let them divine what customers want.

Learning algorithms are the matchmakers: they find producers and consumers for each other, cutting through the information overload. If they’re smart enough, you get the best of both worlds: the vast choice and low cost of the large scale, with the personalized touch of the small. Learners are not perfect, and the last step of the decision is usually still for humans to make, but learners intelligently reduce the choices to something a human can manage.

In retrospect, we can see that the progression from computers to the Internet to machine learning was inevitable: computers enable the Internet, which creates a flood of data and the problem of limitless choice; and machine learning uses the flood of data to help solve the limitless choice problem. The Internet by itself is not enough to move demand from “one size fits all” to the long tail of infinite variety. Netflix may have one hundred thousand DVD titles in stock, but if customers don’t know how to find the ones they like, they will default to choosing the hits. It’s only when Netflix has a learning algorithm to figure out your tastes and recommend DVDs that the long tail really takes off.

Once the inevitable happens and learning algorithms become the middlemen, power becomes concentrated in them. Google’s algorithms largely determine what information you find, Amazon’s what products you buy, and Match.com’s who you date. The last mile is still yours-choosing from among the options the algorithms present you with-but 99.9 percent of the selection was done by them. The success or failure of a company now depends on how much the learners like its products, and the success of a whole economy-whether everyone gets the best products for their needs at the best price-depends on how good the learners are.

The best way for a company to ensure that learners like its products is to run them itself. Whoever has the best algorithms and the most data wins. A new type of network effect takes hold: whoever has the most customers accumulates the most data, learns the best models, wins the most new customers, and so on in a virtuous circle (or a vicious one, if you’re the competition). Switching from Google to Bing may be easier than switching from Windows to Mac, but in practice you don’t because Google, with its head start and larger market share, knows better what you want, even if Bing’s technology is just as good. And pity a new entrant into the search business, starting with zero data against engines with over a decade of learning behind them.

You might think that after a while more data is just more of the same, but that saturation point is nowhere in sight. The long tail keeps going. If you look at the recommendations Amazon or Netflix gives you, it’s clear they’re still very crude, and Google’s search results still leave a lot to be desired. Every feature of a product, every corner of a website can potentially be improved using machine learning. Should the link at the bottom of a page be red or blue? Try them both and see which one gets the most clicks. Better still, keep the learners running and continuously adjust all aspects of the website.

The same dynamic happens in any market where there’s lots of choice and lots of data. The race is on, and whoever learns fastest wins. It doesn’t stop with understanding customers better: companies can apply machine learning to every aspect of their operations, provided data is available, and data is pouring in from computers, communication devices, and ever-cheaper and more ubiquitous sensors. “Data is the new oil” is a popular refrain, and as with oil, refining it is big business. IBM, as well plugged into the corporate world as anyone, has organized its growth strategy around providing analytics to companies. Businesses look at data as a strategic asset: What data do I have that my competitors don’t? How can I take advantage of it? What data do my competitors have that I don’t?

In the same way that a bank without databases can’t compete with a bank that has them, a company without machine learning can’t keep up with one that uses it. While the first company’s experts write a thousand rules to predict what its customers want, the second company’s algorithms learn billions of rules, a whole set of them for each individual customer. It’s about as fair as spears against machine guns. Machine learning is a cool new technology, but that’s not why businesses embrace it. They embrace it because they have no choice.

Supercharging the scientific method

Machine learning is the scientific method on steroids. It follows the same process of generating, testing, and discarding or refining hypotheses. But while a scientist may spend his or her whole life coming up with and testing a few hundred hypotheses, a machine-learning system can do the same in a fraction of a second. Machine learning automates discovery. It’s no surprise, then, that it’s revolutionizing science as much as it’s revolutionizing business.

To make progress, every field of science needs to have data commensurate with the complexity of the phenomena it studies. This is why physics was the first science to take off: Tycho Brahe’s recordings of the positions of the planets and Galileo’s observations of pendulums and inclined planes were enough to infer Newton’s laws. It’s also why molecular biology, despite being younger than neuroscience, has outpaced it: DNA microarrays and high-throughput sequencing provide a volume of data that neuroscientists can only hope for. And it’s the reason why social science research is such an uphill battle: if all you have is a sample of a hundred people, with a dozen measurements apiece, all you can model is some very narrow phenomenon. But even this narrow phenomenon does not exist in isolation; it’s affected by a myriad others, which means you’re still far from understanding it.

The good news today is that sciences that were once data-poor are now data-rich. Instead of paying fifty bleary-eyed undergraduates to perform some task in the lab, psychologists can get as many subjects as they want by posting the task on Amazon’s Mechanical Turk. (It makes for a more diverse sample too.) It’s getting hard to remember, but little more than a decade ago sociologists studying social networks lamented that they couldn’t get their hands on a network with more than a few hundred members. Now there’s Facebook, with over a billion. A good chunk of those members post almost blow-by-blow accounts of their lives too; it’s like having a live feed of social life on planet Earth. In neuroscience, connectomics and functional magnetic resonance imaging have opened an extraordinarily detailed window into the brain. In molecular biology, databases of genes and proteins grow exponentially. Even in “older” sciences like physics and astronomy, progress continues because of the flood of data pouring forth from particle accelerators and digital sky surveys.

Big data is no use if you can’t turn it into knowledge, however, and there aren’t enough scientists in the world for the task. Edwin Hubble discovered new galaxies by poring over photographic plates, but you can bet the half-billion sky objects in the Sloan Digital Sky Survey weren’t identified that way. It would be like trying to count the grains of sand on a beach by hand. You can write rules to distinguish galaxies from stars from noise objects (such as birds, planes, Superman), but they’re not very accurate. Instead, the SKICAT (sky image cataloging and analysis tool) project used a learning algorithm. Starting from plates where objects were labeled with the correct categories, it figured out what characterizes each one and applied the result to all the unlabeled plates. Even better, it could classify objects that were too faint for humans to label, and these comprise the majority of the survey.

With big data and machine learning, you can understand much more complex phenomena than before. In most fields, scientists have traditionally used only very limited kinds of models, like linear regression, where the curve you fit to the data is always a straight line. Unfortunately, most phenomena in the world are nonlinear. (Or fortunately, since otherwise life would be very boring-in fact, there would be no life.) Machine learning opens up a vast new world of nonlinear models. It’s like turning on the lights in a room where only a sliver of moonlight filtered before.

In biology, learning algorithms figure out where genes are located in a DNA molecule, where superfluous bits of RNA get spliced out before proteins are synthesized, how proteins fold into their characteristic shapes, and how different conditions affect the expression of different genes. Rather than testing thousands of new drugs in the lab, learners predict whether they will work, and only the most promising get tested. They also weed out molecules likely to have nasty side effects, like cancer. This avoids expensive failures, like candidate drugs being nixed only after human trials have begun.

The biggest challenge, however, is assembling all this information into a coherent whole. What are all the things that affect your risk of heart disease, and how do they interact? All Newton needed was three laws of motion and one of gravitation, but a complete model of a cell, an organism, or a society is more than any one human can discover. As knowledge grows, scientists specialize ever more narrowly, but no one is able to put the pieces together because there are far too many pieces. Scientists collaborate, but language is a very slow medium of communication. Scientists try to keep up with others’ research, but the volume of publications is so high that they fall farther and farther behind. Often, redoing an experiment is easier than finding the paper that reported it. Machine learning comes to the rescue, scouring the literature for relevant information, translating one area’s jargon into another’s, and even making connections that scientists weren’t aware of. Increasingly, machine learning acts as a giant hub, through which modeling techniques invented in one field make their way into others.

If computers hadn’t been invented, science would have ground to a halt in the second half of the twentieth century. This might not have been immediately apparent to the scientists because they would have been focused on whatever limited progress they could still make, but the ceiling for that progress would have been much, much lower. Similarly, without machine learning, many sciences would face diminishing returns in the decades to come.

To see the future of science, take a peek inside a lab at the Manchester Institute of Biotechnology, where a robot by the name of Adam is hard at work figuring out which genes encode which enzymes in yeast. Adam has a model of yeast metabolism and general knowledge of genes and proteins. It makes hypotheses, designs experiments to test them, physically carries them out, analyzes the results, and comes up with new hypotheses until it’s satisfied. Today, human scientists still independently check Adam’s conclusions before they believe them, but tomorrow they’ll leave it to robot scientists to check each other’s hypotheses.

A billion Bill Clintons

Machine learning was the kingmaker in the 2012 presidential election. The factors that usually decide presidential elections-the economy, likability of the candidates, and so on-added up to a wash, and the outcome came down to a few key swing states. Mitt Romney’s campaign followed a conventional polling approach, grouping voters into broad categories and targeting each one or not. Neil Newhouse, Romney’s pollster, said that “if we can win independents in Ohio, we can win this race.” Romney won them by 7 percent but still lost the state and the election.

In contrast, President Obama hired Rayid Ghani, a machine-learning expert, as chief scientist of his campaign, and Ghani proceeded to put together the greatest analytics operation in the history of politics. They consolidated all voter information into a single database; combined it with what they could get from social networking, marketing, and other sources; and set about predicting four things for each individual voter: how likely he or she was to support Obama, show up at the polls, respond to the campaign’s reminders to do so, and change his or her mind about the election based on a conversation about a specific issue. Based on these voter models, every night the campaign ran 66,000 simulations of the election and used the results to direct its army of volunteers: whom to call, which doors to knock on, what to say.

In politics, as in business and war, there is nothing worse than seeing your opponent make moves that you don’t understand and don’t know what to do about until it’s too late. That’s what happened to the Romney campaign. They could see the other side buying ads in particular cable stations in particular towns but couldn’t tell why; their crystal ball was too fuzzy. In the end, Obama won every battleground state save North Carolina and by larger margins than even the most accurate pollsters had predicted. The most accurate pollsters, in turn, were the ones (like Nate Silver) who used the most sophisticated prediction techniques; they were less accurate than the Obama campaign because they had fewer resources. But they were a lot more accurate than the traditional pundits, whose predictions were based on their expertise.

You might think the 2012 election was a fluke: most elections are not close enough for machine learning to be the deciding factor. But machine learning will cause more elections to be close in the future. In politics, as in everything, learning is an arms race. In the days of Karl Rove, a former direct marketer and data miner, the Republicans were ahead. By 2012, they’d fallen behind, but now they’re catching up again. We don’t know who’ll be ahead in the next election cycle, but both parties will be working hard to win. That means understanding the voters better and tailoring the candidates’ pitches-even choosing the candidates themselves-accordingly. The same applies to entire party platforms, during and between election cycles: if detailed voter models, based on hard data, say a party’s current platform is a losing one, the party will change it. As a result, major events aside, gaps between candidates in the polls will be smaller and shorter lived. Other things being equal, the candidates with the better voter models will win, and voters will be better served for it.

One of the greatest talents a politician can have is the ability to understand voters, individually or in small groups, and speak directly to them (or seem to). Bill Clinton is the paradigmatic example of this in recent memory. The effect of machine learning is like having a dedicated Bill Clinton for every voter. Each of these mini-Clintons is a far cry from the real one, but they have the advantage of numbers; even Bill Clinton can’t know what every single voter in America is thinking (although he’d surely like to). Learning algorithms are the ultimate retail politicians.

Of course, as with companies, politicians can put their machine-learned knowledge to bad uses as well as good ones. For example, they could make inconsistent promises to different voters. But voters, media, and watchdog organizations can do their own data mining and expose politicians who cross the line. The arms race is not just between candidates but among all participants in the democratic process.

The larger outcome is that democracy works better because the bandwidth of communication between voters and politicians increases enormously. In these days of high-speed Internet, the amount of information your elected representatives get from you is still decidedly nineteenth century: a hundred bits or so every two years, as much as fits on a ballot. This is supplemented by polling and perhaps the occasional e-mail or town-hall meeting, but that’s still precious little. Big data and machine learning change the equation. In the future, provided voter models are accurate, elected officials will be able to ask voters what they want a thousand times a day and act accordingly-without having to pester the actual flesh-and-blood citizens.

One if by land, two if by Internet

Out in cyberspace, learning algorithms man the nation’s ramparts. Every day, foreign attackers attempt to break into computers at the Pentagon, defense contractors, and other companies and government agencies. Their tactics change continually; what worked against yesterday’s attacks is powerless against today’s. Writing code to detect and block each one would be as effective as the Maginot Line, and the Pentagon’s Cyber Command knows it. But machine learning runs into a problem if an attack is the first of its kind and there aren’t any previous examples of it to learn from. Instead, learners build models of normal behavior, of which there’s plenty, and flag anomalies. Then they call in the cavalry (aka system administrators). If cyberwar ever comes to pass, the generals will be human, but the foot soldiers will be algorithms. Humans are too slow and too few and would be quickly swamped by an army of bots. We need our own bot army, and machine learning is like West Point for bots.

Cyberwar is an instance of asymmetric warfare, where one side can’t match the other’s conventional military power but can still inflict grievous damage. A handful of terrorists armed with little more than box cutters can knock down the Twin Towers and kill thousands of innocents. All the biggest threats to US security today are in the realm of asymmetric warfare, and there’s an effective weapon against all of them: information. If the enemy can’t hide, he can’t survive. The good news is that we have plenty of information, and that’s also the bad news.

The National Security Agency (NSA) has become infamous for its bottomless appetite for data: by one estimate, every day it intercepts over a billion phone calls and other communications around the globe. Privacy issues aside, however, it doesn’t have millions of staffers to eavesdrop on all these calls and e-mails or even just keep track of who’s talking to whom. The vast majority of calls are perfectly innocent, and writing a program to pick out the few suspicious ones is very hard. In the old days, the NSA used keyword matching, but that’s easy to get around. (Just call the bombing a “wedding” and the bomb the “wedding cake.”) In the twenty-first century, it’s a job for machine learning. Secrecy is the NSA’s trademark, but its director has testified to Congress that mining of phone logs has already halted dozens of terrorism threats.

Terrorists can hide in the crowd at a football game, but learners can pick out their faces. They can make exotic bombs, but learners can sniff them out. Learners can also do something more subtle: connect the dots between events that individually seem harmless but add up to an ominous pattern. This approach could have prevented 9/11. There’s a further twist: once a learned program is deployed, the bad guys change their behavior to defeat it. This contrasts with the natural world, which always works the same way. The solution is to marry machine learning with game theory, something I’ve worked on in the past: don’t just learn to defeat what your opponent does now; learn to parry what he might do against your learner. Factoring in the costs and benefits of different actions, as game theory does, can also help strike the right balance between privacy and security.

During the Battle of Britain, the Royal Air Force held back the Luftwaffe despite being heavily outnumbered. German pilots couldn’t understand how, wherever they went, they always ran into the RAF. The British had a secret weapon: radar, which detected the German planes well before they crossed into Britain’s airspace. Machine learning is like having a radar that sees into the future. Don’t just react to your adversary’s moves; predict them and preempt them.

An example of this closer to home is what’s known as predictive policing. By forecasting crime trends and strategically focusing patrols where they’re most likely to be needed, as well as taking other preventive measures, a city’s police force can effectively do the job of a much larger one. In many ways, law enforcement is similar to asymmetric warfare, and many of the same learning techniques apply, whether it’s in fraud detection, uncovering criminal networks, or plain old beat policing.

Machine learning also has a growing role on the battlefield. Learners can help dissipate the fog of war, sifting through reconnaissance imagery, processing after-action reports, and piecing together a picture of the situation for the commander. Learning powers the brains of military robots, helping them keep their bearings, adapt to the terrain, distinguish enemy vehicles from civilian ones, and home in on their targets. DARPA’s AlphaDog carries soldiers’ gear for them. Drones can fly autonomously with the help of learning algorithms; although they are still partly controlled by human pilots, the trend is for one pilot to oversee larger and larger swarms. In the army of the future, learners will greatly outnumber soldiers, saving countless lives.

Where are we headed?

Technology trends come and go all the time. What’s unusual about machine learning is that, through all these changes, through boom and bust, it just keeps growing. Its first big hit was in finance, predicting stock ups and downs, starting in the late 1980s. The next wave was mining corporate databases, which by the mid-1990s were starting to grow quite large, and in areas like direct marketing, customer relationship management, credit scoring, and fraud detection. Then came the web and e-commerce, where automated personalization quickly became de rigueur. When the dot-com bust temporarily curtailed that, the use of learning for web search and ad placement took off. For better or worse, the 9/11 attacks put machine learning in the front line of the war on terror. Web 2.0 brought a swath of new applications, from mining social networks to figuring out what bloggers are saying about your products. In parallel, scientists of all stripes were increasingly turning to large-scale modeling, with molecular biologists and astronomers leading the charge. The housing bust barely registered; its main effect was a welcome transfer of talent from Wall Street to Silicon Valley. In 2011, the “big data” meme hit, putting machine learning squarely in the center of the global economy’s future. Today, there seems to be hardly an area of human endeavor untouched by machine learning, including seemingly unlikely candidates like music, sports, and wine tasting.

As remarkable as this growth is, it’s only a foretaste of what’s to come. Despite its usefulness, the generation of learning algorithms currently at work in industry is, in fact, quite limited. When the algorithms now in the lab make it to the front lines, Bill Gates’s remark that a breakthrough in machine learning would be worth ten Microsofts will seem conservative. And if the ideas that really put a glimmer in researchers’ eyes bear fruit, machine learning will bring about not just a new era of civilization, but a new stage in the evolution of life on Earth.

What makes this possible? How do learning algorithms work? What can’t they currently do, and what will the next generation look like? How will the machine-learning revolution unfold? And what opportunities and dangers should you look out for? That’s what this book is about-read on!

- Prologue

- CHAPTER ONE: The Machine-Learning Revolution

- CHAPTER TWO: The Master Algorithm

- CHAPTER THREE: Hume’s Problem of Induction

- CHAPTER FOUR: How Does Your Brain Learn?

- CHAPTER FIVE: Evolution: Nature’s Learning Algorithm

- CHAPTER SIX: In the Church of the Reverend Bayes

- CHAPTER SEVEN: You Are What You Resemble

- CHAPTER EIGHT: Learning Without a Teacher

- CHAPTER NINE: The Pieces of the Puzzle Fall into Place

- CHAPTER TEN: This Is the World on Machine Learning

- Epilogue

- Acknowledgments

- Further Readings

- Index

- Pedro Domingos

- Ñîäåðæàíèå êíèãè

- Ïîïóëÿðíûå ñòðàíèöû

- 4.4.4 The Dispatcher

- About the author

- Chapter 5. Preparations

- Chapter 6. Traversing of tables and chains

- Chapter 7. The state machine

- Chapter 8. Saving and restoring large rule-sets

- Chapter 9. How a rule is built

- Chapter 10. Iptables matches

- Chapter 11. Iptables targets and jumps

- Chapter 12. Debugging your scripts

- Chapter 5 Installing and Configuring VirtualCenter 2.0

- Chapter 13. rc.firewall file