Книга: Mastering VMware® Infrastructure3

iSCSI Network Storage

Разделы на этой странице:

iSCSI Network Storage

As a response to the needs of not-so-deep-pocketed network administrators, Internet Small Computer Systems Interface (iSCSI) has become a strong alternative to fibre channel. The popularity of iSCSI storage, which offers both lower cost and increasing speeds, will continue to grow as it finds its place in virtualized networks.

Understanding iSCSI Storage Networks

iSCSI storage provides a block-level transfer of data using the SCSI communication protocol over a standard TCP/IP network. By using block-level transfer, as in a fibre channel solution, the storage device looks like a local device to the requesting host. With proper planning, an iSCSI SAN can perform nearly as well as a fibre channel SAN — or better. This depends on other factors, but we can dive into those in a moment. And before we make that dive into the configuration of iSCSI with ESX, let's first take a look at the components involved in an iSCSI SAN. Despite the fact that the goals and overall architecture of iSCSI are similar to fibre channel, when you dig into the configuration details, the communication architecture, and individual components of iSCSI, the differences are profound.

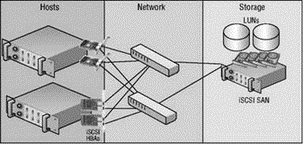

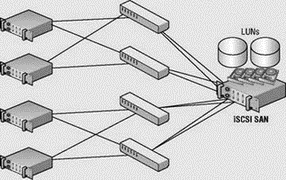

The components that make up an iSCSI SAN architecture, shown in Figure 4.19, include:

Hardware initiator A hardware device referred to as an iSCSI host bus adapter (HBA) that resides in an ESX Server host and initiates storage communication to the storage processor (SP) of the iSCSI storage device.

Software initiator A software-based storage driver initiator that does not require specific hardware and transmits over standard, supported Ethernet adapters.

Storage device The physical device that houses the disk subsystem upon which LUNs are built.

Logical unit number (LUN) A logical configuration of disk space created from one or more underlying physical disks. LUNs are most commonly created on multiple disks in a RAID configuration appropriate for the disk usage. LUN design considerations and methodologies will be covered later in this chapter.

Storage processor (SP) A communication device in the storage device that receives storage requests from storage area network nodes.

Challenge Handshake Authentication Protocol (CHAP) An authentication protocol used by the iSCSI initiator and target that involves validating a single set of credentials provided by any of the connecting ESX Server hosts.

Ethernet switches Standard hardware devices used for managing the flow of traffic between ESX Server nodes and the storage device.

iSCSI qualified name (IQN) The full name of an iSCSI node in the format of iqn.<year>-<month>.com.domain:alias. For example, iqn.1998-08.com.vmware:silo1-1 represents the registration of vmware.com on the Internet in August (08) of 1998. Nodes on an iSCSI deployment will have default IQNs that can be changed. However, changing an IQN requires a reboot of the ESX Server host.

iSCSI is thus a cheaper shared storage solution than fibre channel. Of course, the reduced cost does come at the expense of the better performance that fibre channel offers. Ultimately, the question comes down to that difference in performance. The performance difference can, in large part, reflect the storage design and the disk intensity of the virtual machines stored on the iSCSI LUNs. Although this is true for fibre channel storage as well, it is less of a concern given the greater bandwidth available via a 4GB fibre channel architecture. In either case, it is the duty of the ESX Server administrator and the SAN administrator to regularly monitor the saturation level of the storage network.

Figure 4.19 An iSCSI SAN includes an overall architecture similar to fibre channel, but the individual components differ in their communication mechanisms.

When deploying an iSCSI storage network, you'll find that adhering to the following rules can help mitigate performance degradation or security concerns:

? Always deploy iSCSI storage on a dedicated network.

? Configure all nodes on the storage network with static IP addresses.

? Configure the network adapters to use full-duplex, gigabit autonegotiated recommended communication.

? Avoid funneling storage requests from multiple servers into a single link between the network switch and the storage device.

Deploying a dedicated iSCSI storage network reduces network bandwidth contention between the storage traffic and other common network traffic types such as e-mail, Internet, and file transfer. A dedicated network also offers administrators the luxury of isolating the SCSI communication protocol from ‘‘prying eyes’’ that have no legitimate need to access the data on the storage device.

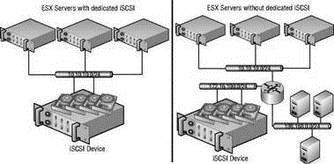

iSCSI storage deployments should always utilize dedicated storage networks to minimize contention and increase security. Achieving this goal is a matter of implementing a dedicated switch or switches to isolate the storage traffic from the rest of the network. Figure 4.20 shows the differences and one that is integrated with the other network segments.

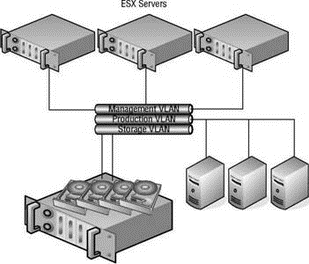

If a dedicated physical network is not possible, using a virtual local area network (VLAN) will segregate the traffic to ensure storage traffic security. Figure 4.21 shows iSCSI implemented over a VLAN to achieve better security. However, this type of configuration still forces the iSCSI communication to compete with other types of network traffic.

Figure 4.21 iSCSI can be implemented across VLANs to enhance security.

Figure 4.20 iSCSI should have a dedicated and isolated network.

Figure 4.21 iSCSI communication traffic can be isoloated from other network traffic by using vLANs.

Real World Scenario

A Common iSCSI Network Infrastructure Mistake

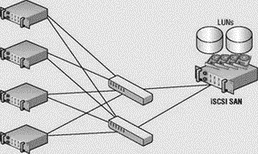

A common deployment error with iSCSI storage networks is the failure to provide enough connectivity between the Ethernet switches and the storage device to adequately handle the traffic requests from the ESX Server hosts. In the sample architecture shown here, four ESX Server hosts are configured with redundant connections to two Ethernet switches, which each have a connection to the iSCSI storage device. At first glance, it looks as if the infrastructure has been designed to support a redundant storage communication strategy. And perhaps it has. But what it has not done is maximize the efficiency of the storage traffic.

If each link between the ESX Server hosts and the Ethernet switches is a 1GB link, that means there is a total storage bandwidth of 8GB or 4GB per Ethernet switch. However, the connection between the Ethernet switches and the iSCSI storage device consists of a single 1GB link per switch. If each host maximizes the throughput from host to switch, the bandwidth needs will exceed the capabilities of the switch-to-storage link and will force packets to be dropped. Since TCP is a reliable transmission protocol, the dropped packets will be re-sent as needed until they have reached their destination. All of the new data processing, coupled with the persistent retries of dropped packets, consumes more and more resources and strains the communication, thus resulting in a degradation of server performance.

To protect against funneling too much data to the switch-to-storage link, the iSCSI storage network should be configured with multiple available links between the switches and the storage device. The image shown here represents an iSCSI storage network configuration that promotes redundancy and communication efficiency by increasing the available bandwidth between the switches and the storage device. This configuration will result in reduced resource usage as a result of less packet-dropping and less retrying.

To learn more about iSCSI, visit the Storage Networking Industry Association website at http://www.snia.org/tech_activities/ip_storage/iscsi.

Configuring ESX for iSCSI Storage

I can't go into the details of configuring the iSCSI storage side of things because each product has nuances that do not cross vendor boundaries, and companies don't typically carry an iSCSI SAN from each potential vendor. On the bright side, what I can and most certainly will cover in great detail is how to configure an ESX Server host to connect to an iSCSI storage device using both hardware and software iSCSI initiation.

As noted in the previous section, VMware is limited in its support for hardware device compatibility. As with fibre channel, you should always check VMware's website to review the latest SAN compatibility guide before purchasing any new storage devices. While software-initiated iSCSI has maintained full support since the release of ESX 3.0, hardware initiation with iSCSI devices did not garner full support until the ESX 3.0.1 release. The prior release, ESX 3.0, provided only experimental support for hardware-initiated iSCSI.

VMware iSCSI SAN Compatibility

Each of the manufacturers listed here provides an iSCSI storage solution that has been tested and approved for use by VMware:

? 3PAR: http://www.3par.com

? Compellent: http://www.compellent.com

? Dell: http://www.dell.com

? EMC: http://www.emc.com

? EqualLogic: http://www.equallogic.com

? Fujitsu Siemens: http://www.fujitsu-siemens.com

? HP: http://www.hp.com

? IBM: http://www.ibm.com

? LeftHand Networks: http://www.lefthandnetworks.com

? Network Appliance (NetApp): http://www.netapp.com

? Sun Microsystems: http://www.sun.com

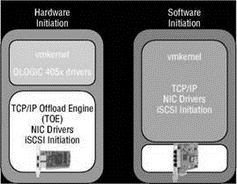

An ESX Server host can initiate communication with an iSCSI storage device by using a hardware device with dedicated iSCSI technology built into the device, or by using a software-based initiator that utilizes standard Ethernet hardware and is managed like normal network communication. Using a dedicated iSCSI HBA that understands the TCP/IP stack and the iSCSI communication protocol provides an advantage over software initiation. Hardware initiation eliminates some processing overhead in the Service Console and VMkernel by offloading the TCP/IP stack to the hardware device. This technology is often referred to as the TCP/IP Offload Engine (TOE). When you use an iSCSI HBA for hardware initiation, the VMkernel needs only the drivers for the HBA and the rest is handled by the device.

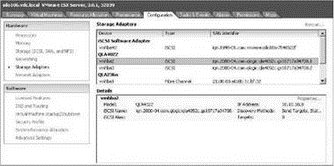

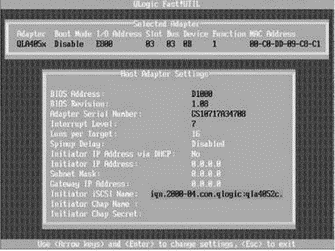

For best performance, the iSCSI hardware-based initiation is the appropriate deployment. After you boot the server, the iSCSI HBA will display all its information in the Storage Adapters node of the Configuration tab, as shown in Figure 4.22. By default, as shown in Figure 4.23, iSCSI HBA devices will assign an IQN in the BIOS of the iSCSI HBA. Configuring the hardware iSCSI initiation with an HBA installed on the host is very similar to configuring a fibre channel HBA — the device will appear in the Storage Adapters node of the Configuration tab. The vmhba#'s for fibre channel and iSCSI HBAs will be enumerated numerically. For example, if an ESX Server host includes two fibre channel HBAs labeled vmhba1 and vmhba2, adding two iSCSI HBAs will result in labels of vmhba3 and vmhba4. The software iSCSI adapter in ESX Server 3.5 will always be labeled as vmhba32.. You can then configure the adapter(s) to support the storage infrastructure.

Figure 4.22 Supported iSCSI HBA devices will automatically be found and can be configured in the BIOS of the ESX host.

Figure 4.23 The BIOS of the QLogic card provides an opportunity to configure the iSCSI HBA. If it's not configured in the BIOS, the defaults pass into the configuration display in the VI Client.

iSCSI Host bus adapters for ESX 3.0

At the time this book was written, the only supported iSCSI HBA was a specific set of QLogic cards in the 4050 series. Although a card may work, if it is not on the compatibility list, obtaining support from VMware will be challenging. As with other hardware situations in VI3, always check the VMware compatibility guide prior to purchasing or installing an iSCSI HBA.

To modify the setting of an iSCSI HBA, perform the following steps:

1. In the Storage Adapters node on the Configuration tab, select the appropriate iSCSI HBA (i.e., vmhba2 or vmhba3) from the list and click the Properties link.

2. Click the Configure button.

3. For a custom iSCSI qualified name, enter a new iSCSI name and iSCSI alias in the respective text boxes.

4. If desired, entire the static IP address, subnet mask, default gateway, and DNS server for the iSCSI HBA.

5. Click OK. Do not click Close.

Once you've configured the iSCSI HBA with the appropriate IP information, you must configure it to accurately find the target available via iSCSI storage devices. ESX provides for the two types of target identification:

? Static discovery

? Dynamic discovery

As the names suggest, one method involves manual configuration of target information (static) while the other involves a less cumbersome, administratively easier means of finding storage (dynamic). The dynamic discovery method is also referred to as the SendTargets method in light of the SendTarget request made by the ESX host. To dynamically discover the available storage targets, you must configure the host manually with the IP address of at least one node. Ironically, when configuring a host to perform a SendTarget request (dynamic discovery), you configure a target on the Dynamic Discovery tab of the iSCSI initiator Properties box, and all of the dynamically identified targets appear on the Static Discovery tab. You perform static assignment as well on the Dynamic Discovery tab so that dynamic targets appear on the Static Discovery tab. Figure 4.24 details the SendTargets method of iSCSI LUN discovery.

The hardware-initiated iSCSI allows for either dynamic or static discovery of targets. The iSCSI software initiator built into ESX 3.0 only allows for the SendTargets discovery method.

To configure the iSCSI HBA for target discovery using the SendTargets method, perform the following steps:

1. In the iSCSI Initiator Properties dialog box, select the Dynamic Discovery tab and click the Add button.

2. Enter the IP address of the iSCSI device and the port number (if it has been changed from the default port of 3260).

3. Click Close.

4. Click the Rescan link.

5. Review the Static Discovery tab of the iSCSI HBA properties.

Figure 4.24 The SendTargets iSCSI LUN discovery method requires that you manually configure at least one iSCSI target to issue a SendTargets request. The iSCSI device will then return information about all the targets available.

In this section I've hinted, or, better yet, blatantly stated, that iSCSI storage networks should be isolated from the other IP networks already configured in your infrastructure. However, this is not always a possibility due to such factors as budget constraints, IP addressing challenges, host limitations, and more. If you cannot isolate the iSCSI storage network, you can configure the storage device and the ESX nodes to use the Challenge Handshake Authentication Protocol (CHAP). CHAP provides a secure means of authenticating a user account without the need for exchanging the password over publicly accessible networks.

To configure an iSCSI HBA to authenticate using CHAP, follow these steps:

1. From the Storage Adapters node on the Configuration page, select the iSCSI HBA to be configured and click the Properties link.

2. Select the CHAP Authentication tab and click Configure.

3. Insert a custom name in the CHAP Name text box or select the Use Initiator Name checkbox.

4. Type a strong and secure string in the Chap Secret text box.

5. Click OK.

6. Click Close.

Software-initiated iSCSI is a cheaper solution than the iSCSI HBA hardware initiation because it does not require any special hardware. Software-based iSCSI initiation, as the name suggests, begins in the VMkernel and utilizes a normal Ethernet adapter installed on the ESX Server host. Unlike the iSCSI HBA solution, software initiation relies on a set of drivers and a TCP/IP stack that resides in the VMkernel. In addition, the iSCSI software initiator, of which there is only one, uses the name vmhba32 as opposed to being enumerated with the rest of the HBAs within a host. Figure 4.25 outlines the architectural differences between the hardware and software initiation mechanisms on ESX Server.

Figure 4.25 ESX Server supports using an iSCSI HBA hardware-based initiation, which reduces overhead on the VMkernel. For a cheaper solution, ESX Server also supports a software-based initiation that does not require specialized hardware.

Using the iSCSI software initiation built into ESX Server provides an easy means of configuring the host to communicate with the iSCSI storage device. The iSCSI software initiator uses the SendTargets method for obtaining information about target devices. The SendTargets request requires the manual entry of one of the IP addresses on the storage device. The software initiator will then query the provided IP address in search of all additional targets.

To enable iSCSI software initiation on an ESX Server, perform the following steps:

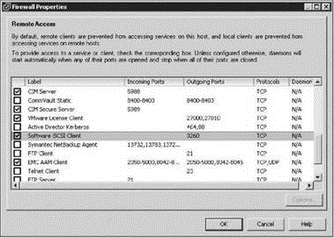

1. Enable the Software iSCSI client in the firewall of the ESX Server host as shown in Figure 4.26:

? On the Configuration tab of the ESX host, click the Security Profile link.

? Click the Properties link.

? Enable the Software iSCSI Client checkbox,

or

? Open an SSH session with root privileges and type the following commands:

esxcfg-firewall -r swISCSIClient

Figure 4.26 The iSCSI software must be enabled in the Service Console firewall.

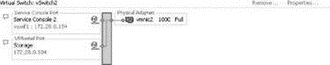

2. Create a virtual switch with a VMkernel port and a Service Console (vswif) port. Bind the virtual switch to a physical network adapter connected to the dedicated storage network. Figure 4.27 shows a correctly configured switch for use in connecting to an iSCSI storage device.

Creating a VMkernel Port from a Command Line

Log on to an ESX host using an SSH session and elevate the permissions using #su — if necessary. Follow these steps:

1. Add a new port group named Storage to the virtual switch on the dedicated storage network:

esxcfg-vswitch -A Storage vSwitch2

2. Configure the VMkernel NIC with an IP address of 172.28.0.106 and a subnet mask of 255.255.255.0:

esxcfg-vmknic -a -i 172.28.0.106 -n 255.255.255.0 Storage

3. Set the default gateway of the VMkernel to 172.28.0.1:

esxcfg-route 172.28.0.1

Figure 4.27 The VMkernel and Service Console must be able to communicate with the iSCSI storage device.

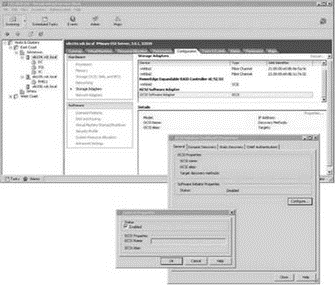

From the Storage Adapters node on the Configuration tab, shown in Figure 4.28, enable the iSCSI initiator. Alternatively, open an SSH session with root privileges and type the following command:

esxcfg-swiscsi -e

Figure 4.28 Enabling the iSCSI will automatically populate the iSCSI name and alias for the software initiator.

4. Select the vmhba40 option beneath the iSCSI Software Adapter and click the Properties link.

5. Select the Dynamic Discovery tab and click the Add button.

6. Enter the IP address of the iSCSI device and the port number (if it has been changed from the default port of 3260).

7. Click OK. Click Close.

8. Select the Rescan link from the Storage Adapters node on the Configuration tab.

9. Click OK to scan for both new storage devices and new VMFS volumes.

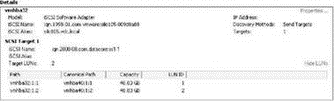

10. As shown in Figure 4.29, any available iSCSI LUNs will now be reflected in the Details section of the vmhba40 option.

Figure 4.29 After configuring the iSCSI software adapter with the IP address of the iSCSI storage target, a rescan will identify the LUNs on the storage device that have been made available to the ESX host.

The vmkiscsi-tool Command

The vmki scsi -tool [options] vmhba## command allows command-line management of the iSCSI software initiator. The options for this command-line tool include:

? -I is used with -l or -a to display or add the iSCSI name.

? -k is used with -l or -a to display or add the iSCSI alias.

? -D is used with -a to perform discovery of a specified target device.

? -T is used with -l to list found targets.

Review the following examples:

? To view the iSCSI name of the software initiator:

vmkiscsi-tool -I -l

? To view the iSCSI alias of the software initiator:

vmkiscsi-tool -k -l

? To discover additional iSCSI targets at 172.28.0.122:

vmkisci-tool -D -a 172.28.0.122 vmhba40

? To list found targets:

vmkiscsi-tool -T -l vmhba40

- Managing the File And Storage Services role

- Chapter 4 Creating and Managing Storage Devices

- Understanding VI3 Storage Options

- Understanding a Storage Area Network

- ESX Network Storage Architectures: Fibre Channel, iSCSI, and NAS

- Network Attached Storage and Network File System

- Chapter 4: Creating and Managing Storage Devices

- Storage Management Best Practices

- Глава 8 Технологии IP Storage и InfiniBand

- 8.1 Технология IP Storage

- CHAPTER 14 Networking

- Installing Using a Network