Книга: Mastering VMware® Infrastructure3

Understanding VI3 Storage Options

Разделы на этой странице:

Understanding VI3 Storage Options

VMware Infrastructure 3 (VI3) offers several options for deploying a storage configuration as the back-end to an ESX Server implementation. These options include storage for virtual machines, ISO images, or templates for server provisioning. An ESX Server can have one or more storage options available to it, including:

? Fibre Channel storage

? iSCSI software-initiated storage

? iSCSI hardware-initiated storage

? Network Attached Storage (NAS)

? Local storage

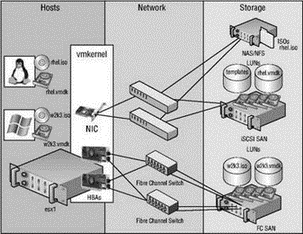

ESX Server can take advantage of multiple storage architectures within the same host or even for the same virtual machine. ESX Server uses a proprietary file system called VMware File System (VMFS) that provides significant benefits for storing and managing virtual machine files. The virtual machines hosted by an ESX Server can have the associated virtual machine disk files (.vmdk) or mounted CD/DVD-ROM images (.iso) stored in different locations on different storage devices. Figure 4.1 shows an ESX Server host with multiple storage architectures. A Windows virtual machine has the virtual machine disk and CD-ROM image file stored on two different Fibre Channel storage area network (SAN) logical unit numbers (LUNs) on the same storage device. At the same time, a Linux virtual machine stores its virtual disk files on an iSCSI SAN LUN and its CD-ROM images on an NAS device.

Figure 4.1 An ESX Server host can be configured with multiple storage options for hosting files used by virtual machines, ISO images, or templates.

The Role of Local Storage

During installation, an ESX Server host is configured by default with a local VMFS storage location, named Storage1 by default. The value of this local storage, however, is severely diminished because of the inability to support VMotion, DRS, or HA, and therefore should only be used for non-mission-critical virtual machines or templates and ISO images that are not required by other ESX hosts.

For this reason, when you're sizing a new ESX host, it is not important to dedicate time and money to large storage pools connected to the internal controllers of the host. Investing in large RAID 5, RAID 1+0, or RAID 0+1 volumes for ESX hosts is extremely unnecessary. In order for you to gain the full benefits of virtualization, the virtual machine disk files must reside on a shared storage device that is accessible by multiple ESX hosts. Direct any fiscal and administrative attention to memory and CPU sizing, or even network adapters, but not to locally attached hard drives.

The purpose of this chapter is to answer all your questions about deploying, configuring, and managing a back-end storage solution for your virtualized environment. Ultimately, each implementation will differ, and therefore the various storage architectures available might be the proper solution in one scenario but not in another. As you'll see, each of the storage solutions available to VI3 provides its own set of advantages and disadvantages.

Choosing the right storage technology for your infrastructure begins with a strong understanding of each technology and an intimate knowledge of the systems that will be virtualized as part of the VI3 deployment. Table 4.1 outlines the features of the three shared storage technologies.

Table 4.1: Features of Shared Storage Technologies

| Feature | Fibre Channel | iSCSI | NAS/NFS |

|---|---|---|---|

| Ability to format VMFS | Yes | Yes | No |

| Ability to hold VM files | Yes | Yes | Yes |

| Ability to boot ESX | Yes | Yes | No |

| VMotion, DRS, HA | Yes | Yes | Yes |

| Microsoft clustering | Yes | No | No |

| VCB | Yes | Yes | No |

| Templates, ISOs | Yes | Yes | Yes |

| Raw device mapping | Yes | Yes | No |

Microsoft Cluster Services

As of the writing of this book, VMware had not yet approved support for building Microsoft server clusters with virtual machines running on ESX Server 3.5. All previous versions up to 3.5 offered support, and once VMware has performed due diligence in testing server clusters on the latest version, it is assumed that the support will continue.

Once you have mastered the differences among the various architectures and identified the features of each that are most relevant to your data and virtual machines, you can feel confident in your decision. Equipped with the right information, you will be able to identify a solid storage platform on which your virtual infrastructure will be scalable, efficient, and secure.

The storage adapters in ESX Server will be identified automatically during the boot process and are available for configuration through the VI client or by using a set of command-line tools. The storage adapters in your server must be compatible. Remember to check the VI3 I/O Compatibility Guide before adding any new hardware to your server. You can find this guide at VMware's website (http://www.vmware.com/pdf/vi3_io_guide.pdf).

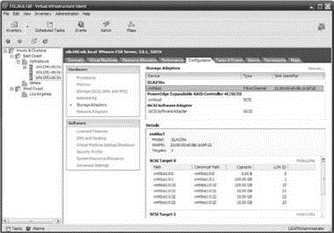

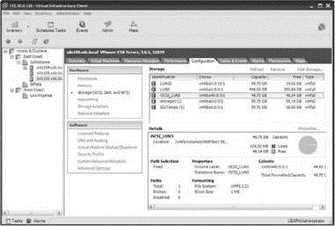

In the following sections, we'll cover both sets of tools, command line and GUI, and explore how to use them to create and manage the various storage types. Figure 4.2 and Figure 4.3 show the Virtual Infrastructure (VI) Client's configuration options for storage adapters and storage, respectively.

Figure 4.2 Storage adapters in ESX Server are found automatically because only adapters certified to work with VMware products should be used. Consult the appropriate guides before adding new hardware.

Figure 4.3 The VI Client provides an easy-to-use interface for adding new datastores located on fibre channel, iSCSI, or NAS storage devices.

- Chapter 4 Creating and Managing Storage Devices

- Appendix D. TCP options

- LOG target options

- Configuration options

- Глава 8 Технологии IP Storage и InfiniBand

- 8.1 Технология IP Storage

- Build Options

- The Menu Options

- Understanding the Command Line

- Temporary File Storage in the

- Understanding Set User ID and Set Group ID Permissions

- 10.1.5 The Options Menu