Книга: Distributed operating systems

4.5.1. Component Faults

4.5.1. Component Faults

Computer systems can fail due to a fault in some component, such as a processor, memory, I/O device, cable, or software. A fault is a malfunction, possibly caused by a design error, a manufacturing error, a programming error, physical damage, deterioration in the course of time, harsh environmental conditions (it snowed on the computer), unexpected inputs, operator error, rodents eating part of it, and many other causes. Not all faults lead (immediately) to system failures, but some do.

Faults are generally classified as transient, intermittent, or permanent. Transient faults occur once and then disappear. if the operation is repeated, the fault goes away. A bird flying through the beam of a microwave transmitter may cause lost bits on some network (not to mention a roasted bird). If the transmission times out and is retried, it will probably work the second time.

An intermittent fault occurs, then vanishes of its own accord, then reappears, and so on. A loose contact on a connector will often cause an intermittent fault. Intermittent faults cause a great deal of aggravation because they are difficult to diagnose. Typically, whenever the fault doctor shows up, the system works perfectly.

A permanent fault is one that continues to exist until the faulty component is repaired. Burnt-out chips, software bugs, and disk head crashes often cause permanent faults.

The goal of designing and building fault-tolerant systems is to ensure that the system as a whole continues to function correctly, even in the presence of faults. This aim is quite different from simply engineering the individual components to be highly reliable, but allowing (even expecting) the system to fail if one of the components does so.

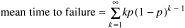

Faults and failures can occur at all levels: transistors, chips, boards, processors, operating systems, user programs, and so on. Traditional work in the area of fault tolerance has been concerned mostly with the statistical analysis of electronic component faults. Very briefly, if some component has a probability p of malfunctioning in a given second of time, the probability of it not failing for k consecutive seconds and then failing is p(1–p)k. The expected time to failure is then given by the formula

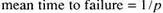

Using the well-known equation for an infinite sum starting at k-1: ??k=?/(1–?), substituting ?=1–p, differentiating both sides of the resulting equation with respect to p, and multiplying through by –p we see that

For example, if the probably of a crash is 10-6 per second, the mean time to failure is 106 sec or about 11.6 days.

- Catalog Component Dependencies

- 4.2.4. Kernel Image Components

- 10.1.3. DCE Components

- 10.6.2. Security Components

- 10.7.2. DFS Components in the Server Kernel

- 10.7.3. DFS Components in the Client Kernel

- 10.7.4. DFS Components in User Space

- Installing DHCP components

- Setting document defaults

- 9.2.2 File System Component

- 9.2.3 Remote Procedure Call Component

- 9.2.6 Other Components