Книга: Mastering VMware® Infrastructure3

ESX Server Disk Partitioning

Разделы на этой странице:

ESX Server Disk Partitioning

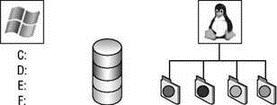

Before we offer step-by-step instructions for installing ESX Server, it is important to review some of the functional components of the disk architecture upon which ESX Server will be installed. Because of its roots in Red Hat Linux, ESX Server does not use drive letters to represent the partitioning of the physical disks. Instead, like Linux, ESX Server uses mount points to represent the various partitions. Mount points involve the association of a directory with a partition on the physical disk. Using mount points for various directories under the root file system protects the root file system by not allowing a directory to consume so much space that the root becomes full. Since most folks are familiar with the Microsoft Windows operating system, think of the following example. Suppose you have a server that runs Windows using a standard C: system volume label. What happens when the C drive runs out of space? Without going into detail let's just leave the answer as a simple one: bad things. Yes, bad things happen when the C drive of a Windows computer runs out of space. In ESX Server, as noted, there is no C drive. The root of the operating system file structure is called exactly that: the root. The root is noted with the / character. Like Windows, if the / (root) runs out of space, bad things happen. Figure 2.2 compares Windows disk partitioning and notation against the Linux disk partitioning and notation methods.

Figure 2.2 Windows and Linux represent disk partitions in different ways. Windows, by default uses drive letters, while Linux uses mount points.

In addition, because of the standard x86 architecture, the disk partitioning strategy for ESX Server involves creating three primary partitions and an extended partition that contains multiple logical partitions. The standard x86 disk partitioning strategy does not allow for more than three primary partitions to be created.

Allow Me

It is important to understand the disk architecture for ESX Server; however, as you will soon see, the installation wizard provides a selection that creates all the proper partitions automatically.

With that said, the partitions created are enough for ESX Server 3.5 to run properly, but there is room for customizing the defaults. The default partitioning strategy for ESX Server 3.5 is shown in Table 2.2.

Table 2.2: Default ESX Partition Scheme

| Mount point name | Type | Size |

|---|---|---|

| /boot | Ext3 | 100MB |

| / | Ext3 | 5000MB (5GB) |

| (none) | VMFS3 | Varies |

| (none) | Swap | 544MB |

| /var/log | Ext3 | 2000MB (2GB) |

| (none) | vmkcore | 100MB |

The /boot Partition

The /boot partition, as its name suggests, stores all the files necessary to boot and ESX Server. The default size of 100MB is ample space for the necessary files. This 100MB size, however, is twice the size of the default boot partition created during the installation of the ESX 2 product. It is not uncommon to find recommendations of doubling this to 200MB in anticipation of a future increase. By no means is this a requirement — it is just a suggestion. The assumption is that an existing installation is already configured for support of the next version of ESX, presumably ESX 4.0.

The / Partition

The / partition is the root of the Service Console operating system. We have already alluded to the importance of the / (root) of the file system, but now we should detail the implications of its configuration. Is 5GB enough for the / of the console operating system? The obvious answer is that 5GB must be enough if that is what VMware chose as the default. The minimum size of the / partition is 2.5GB, so the default is twice the size of the minimum. So why change the size of the / partition? Keep in mind that the / partition is where any third-party applications would install by default. This means that six months, eight months, or a year from now when there are dozens of third-party applications available for ESX Server, all of these applications will likely be installed into the / partition. As you can imagine, 5GB can be used rather quickly. One of the last things on any administrator's list of things to do is reinstallations of each of their ESX Servers. Planning for future growth and the opportunity to install third-party programs into the Service Console means creating a / partition with plenty of space to grow. I, as well as many other consultants, often recommend that the / partition be given more than the default 5GB of space. It is not uncommon for virtualization architects to suggest root partition sizes of 20GB to 25GB. However, the most important factor is to choose a size that fits your comfort for growth.

The Swap Partition

The swap partition, as the name suggests, is the location of the Service Console swap file. This partition defaults to 544MB. As a general rule, swap files are created with a size equal to two times the memory allocated to the operating system. The same holds true for ESX Server. The swap partition is 544MB in size by default because the Service Console is allocated 272MB of RAM by default. By today's standards, 272MB of RAM seems low, but only because we are used to Windows servers requiring more memory for better performance. The Service Console is not as memory intensive as Windows operating systems can be. This is not to say that 272MB is always enough. Continuing with ideas from the previous section, if the future of the ESX Server deployment includes the installation of third-party products into the Service Console, then additional RAM will certainly be warranted. Unlike Windows or Linux, the Service Console is limited to only 800MB of RAM. The Post-Installation Configuration section of this chapter will show exactly how to make this change, but it is important to plan for this change during the installation so that the swap partition can be increased accordingly. If the Service Console is to be adjusted up to the 800MB maximum, then the swap partition should be increased to 1600MB (2 ? 800MB).

The /var/log Partition

The /var/log partition is created with a default size of 2000MB, or 2GB of space. This is typically a safe value for this partition. However, I recommend that you make a change to this default configuration. ESX Server uses /var directory during patch management tasks. Since the default partition is /var/log, this means that the /var partition is still under the / (root) partition. Therefore, space consumed in /var is space consumed in / (root). For this reason I recommend that you change the mount point to /var instead of /var/log and that you increase the space to a larger value like 10GB or 15GB. This alteration provides ample space for patch management without jeopardizing the / (root) file system and still providing a dedicated partition to store log data.

The vmkcore Partition

The vmkcore partition is the dump partition where ESX Server writes information about a system halt. We are all familiar with the infamous Windows blue screen of death (BSOD) either from experience or the multitude of jokes that arose from the ever-so-frequent occurrences. When an ESX Server crashes, it, like Windows, writes detailed information about the system crash. This information is written to the vmkcore type partition. Unlike Windows, an ESX Server system crash results in a purple screen of death (PSOD) that many administrators have never seen. The size of this partition does not need to be altered.

The vmfs3 Partition

You might have noticed that I skipped over the VMFS3 partition. I did so for a reason. The VMFS3 partition is created, by default, with a size equal to the disk size minus the default sizes of all other partitions. In other words, ESX Server creates all the other partition types and then uses the remaining free space as the local VMFS3 storage. In most VI3 infrastructures, the local VMFS3 storage device will be negligible in light of the dedicated storage devices that will be in place. Fibre channel and iSCSI storage devices that provide the proper infrastructure for VMotion, DRS, and HA reduce the need for large amounts of local VMFS3 storage.

All That Space and Nothing to Do

Although local disk space is useless in the face of a dedicated storage network, there are ways to take advantage of local storage rather than let it go to waste. LeftHand Networks (http://www.lefthandnetworks.com) has developed a virtual storage appliance (VSA) that presents local ESX Server storage space as an iSCSI target. In addition, this space can be combined with other local storage on other servers to provide data redundancy. And the best part of being able to present local storage as virtual shared storage units is the availability of VMotion, DRS, and HA.

Table 2.3 provides a customized partitioning strategy that offers strong support for any future needs in an ESX Server installation.

Table 2.3: Custom ESX Partition Scheme

| Mount point name | Type | Size |

|---|---|---|

| /boot | Ext3 | 200MB |

| / | Ext3 | 25,000MB (25GB) |

| (none) | VMFS3 | Varies |

| (none) | Swap | 1,600MB(1.6GB) |

| /var | Ext3 | 12,000MB (12GB) |

| (none) | vmkcore | 100MB |

Local Disks, Redundant Disks

Just because local VMFS 3 storage might not hold much significance in an ESX Server deployment does not mean that all local storage is irrelevant. The availability of the /(root) file system, vmkcore, Service Console swap, and so forth is critical to a functioning ESX Server. For the safety of the installed Service Console always install ESX Server on a hardware-based RAID array. Unless you intend to use a product like LeftHand Networks' VSA, there is little need to build a RAID 5 array with three or more large hard drives. A RAID1 (mirrored) array provides the needed reliability while minimizing the disk requirements.

ESX Server 3.5 offers a CD-based installation and an unattended installation that uses the same kickstart file technology commonly used for unattended Linux installations. We'll begin by looking at a standard CD installation and then transition into the automated ESX Server installation method.

- Тестирование Web-сервиса XML с помощью WebDev.WebServer.exe

- InterBase Super Server для Windows

- Каталог BIN в SuperServer

- Минимальный состав сервера InterBase SuperServer

- InterBase Classic Server под Linux

- Каталог BIN в InterBase Classic Server для Linux

- SuperServer

- Classic vs SuperServer

- Рекомендации по выбору архитектуры: Classic или SuperServer?

- Улучшенное время отклика для версии SuperServer

- Эффективное взаимодействие процессов архитектуры Classic Server

- Yaffil Classic Server - замена InterBase Classic 4.0