Книга: Distributed operating systems

1.3.1. Bus-Based Multiprocessors

1.3.1. Bus-Based Multiprocessors

Bus-based multiprocessors consist of some number of CPUs all connected to a common bus, along with a memory module. A simple configuration is to have a high-speed backplane or motherboard into which CPU and memory cards can be inserted. A typical bus has 32 or 64 address lines, 32 or 64 data lines, and perhaps 32 or more control lines, all of which operate in parallel. To read a word of memory, a CPU puts the address of the word it wants on the bus address lines, then puts a signal on the appropriate control lines to indicate that it wants to read. The memory responds by putting the value of the word on the data lines to allow the requesting CPU to read it in. Writes work in a similar way.

Since there is only one memory, if CPU A writes a word to memory and then CPU В reads that word back a microsecond later, В will get the value just written. A memory that has this property is said to be coherent. Coherence plays an important role in distributed operating systems in a variety of ways that we will study later.

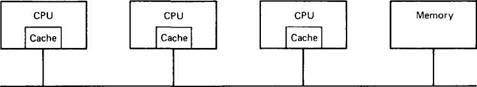

The problem with this scheme is that with as few as 4 or 5 CPUs, the bus will usually be overloaded and performance will drop drastically. The solution is to add a high-speed cache memory between the CPU and the bus, as shown in Fig. 1-5. The cache holds the most recently accessed words. All memory requests go through the cache. If the word requested is in the cache, the cache itself responds to the CPU, and no bus request is made. If the cache is large enough, the probability of success, called the hit rate, will be high, and the amount of bus traffic per CPU will drop dramatically, allowing many more CPUs in the system. Cache sizes of 64K to 1M are common, which often gives a hit rate of 90 percent or more.

Bus Fig. 1-5. A bus-based multiprocessor.

However, the introduction of caches also brings a serious problem with it. Suppose that two CPUs, A and B, each read the same word into their respective caches. Then A overwrites the word. When В next reads that word, it gets the old value from its cache, not the value A just wrote. The memory is now incoherent, and the system is difficult to program.

Many researchers have studied this problem, and various solutions are known. Below we will sketch one of them. Suppose that the cache memories are designed so that whenever a word is written to the cache, it is written through to memory as well. Such a cache is, not surprisingly, called a write-through cache. In this design, cache hits for reads do not cause bus traffic, but cache misses for reads, and all writes, hits and misses, cause bus traffic.

In addition, all caches constantly monitor the bus. Whenever a cache sees a write occurring to a memory address present in its cache, it either removes that entry from its cache, or updates the cache entry with the new value. Such a cache is called a snoopy cache (or sometimes, a snooping cache) because it is always "snooping" (eavesdropping) on the bus. A design consisting of snoopy write-through caches is coherent and is invisible to the programmer. Nearly all bus-based multiprocessors use either this architecture or one closely related to it. Using it, it is possible to put about 32 or possibly 64 CPUs on a single bus. For more about bus-based multiprocessors, see Lilja (1993).

- 1.3.2. Switched Multiprocessors

- 1.3.3. Bus-Based Multicomputers

- 6.2.2. Bus-Based Multiprocessors

- 6.2.3. Ring-Based Multiprocessors

- 6.2.5. NUMA Multiprocessors

- Chapter 16. Commercial products based on Linux, iptables and netfilter

- 11.1.3. Шина SMBus

- Text-Based Console Login

- GUI-Based Printer Configuration Quick Start

- Console-Based Monitoring

- Using ssh-keygen to Enable Key-Based Logins