Книга: Programming with POSIX® Threads

5.6.2 One-to-one (kernel level)

5.6.2 One-to-one (kernel level)

One-to-one thread mapping is also sometimes called a "kernel thread" implementation. The Pthreads library assigns each thread to a kernel entity. It generally must use blocking kernel functions to wait on mutexes and condition variables. While synchronization may occur either within the kernel or in user mode, thread scheduling occurs within the kernel.

Pthreads threads can take full advantage of multiprocessor hardware in a one-to-one implementation without any extra effort on your part, for example, separating your code into multiple processes. When a thread blocks in the kernel,

it does not affect other threads any more than the blocking of a normal UNIX process affects other processes. One thread can even process a page fault without affecting other threads.

One-to-one implemenations suffer from two main problems. The first is that they do not scale well. That is, each thread in your application is a kernel entity. Because kernel memory is precious, kernel objects such as processes and threads are often limited by preallocated arrays, and most implementations will limit the number of threads you can create. It will also limit the number of threads that can be created on the entire system — so depending on what other processes are doing, your process may not be able to reach its own limit.

The second problem is that blocking on a mutex and waiting on a condition variable, which happen frequently in many applications, are substantially more expensive on most one-to-one implementations, because they require entering the machine's protected kernel mode. Note that locking a mutex, when it was not already locked, or unlocking a mutex, when there are no waiting threads, may be no more expensive than on a many-to-one implementation, because on most systems those functions can be completed in user mode.

A one-to-one implementation can be a good choice for CPU-bound applications, which don't block very often. Many high-performance parallel applications begin by creating a worker thread for each physical processor in the system, and. once started, the threads run independently for a substantial time period. Such applications will work well because they do not strain the kernel by creating a lot of threads, and they don't require a lot of calls into the kernel to block and unblock their threads.

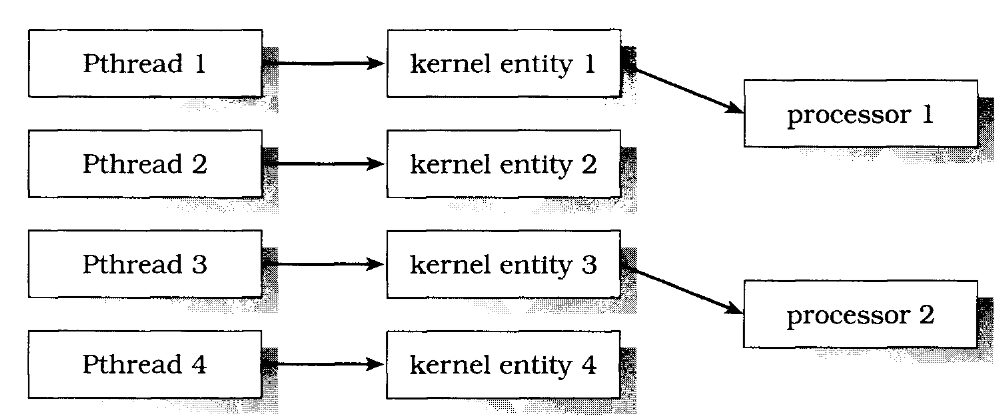

Figure 5.6 shows the mapping ofPthreads threads (left column) to kernel entities (middle column) to physical processors (right column). In this case, the process has four Pthreads threads, labeled "Pthread 1" through "Pthread 4.* Each Pthreads thread is permanently bound to the corresponding kernel entity. The kernel schedules the four kernel entities (along with those from other processes) onto the two physical processors, labeled "processor 1" and "processor 2." The important characteristics of this model are shown in Table 5.3.

FIGURE 5.6 One-to-one thread mapping

| Advantages | Disadvantages |

| Can take advantage of multiprocessor hardware within a single process. | Relatively slow thread context switch (calls into kernel). |

| No latency during system service blocking. | Poor scaling when many threads are used, because each Pthreads thread takes kernel resources from the system. |

TABLE 5.3 One-to-one thread scheduling

- 4.2. Linux Kernel Construction

- 4.2.1. Top-Level Source Directory

- 5.6 Threads and kernel entities

- 5.6.1 Many-to-one (user level)

- 5.6.3 Many-to-few (two level)

- Kernel setup

- Kernel Address Space

- Phone Services

- Part One. “Regulability”

- Setting the Time Zone

- Loading the Linux Kernel

- System Services and Runlevels