Книга: Real-Time Concepts for Embedded Systems

15.7.4 Using Condition Variables to Synchronize between Readers and Writers

15.7.4 Using Condition Variables to Synchronize between Readers and Writers

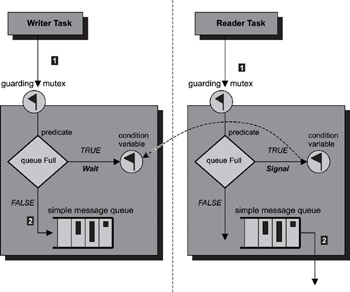

The design pattern shown in Figure 15.20 demonstrates the use of condition variables. A condition variable can be associated with the state of a shared resource. In this example, multiple tasks are trying to insert messages into a shared message queue. The predicate of the condition variable is 'the message queue is full.' Each writer task tries first to insert the message into the message queue. The task waits (and is blocked) if the message queue is currently full. Otherwise, the message is inserted, and the task continues its execution path.

Figure 15.20: Using condition variables for task synchronization.

Note the message queue shown in Figure 15.20 is called a 'simple message queue.' For the sake of this example, the reader should assume this message queue is a simple buffer with structured content. This simple message queue is not the same type of message queue that is provided by the RTOS.

Dedicated reader (or consumer) tasks periodically remove messages from the message queue. The reader task signals on the condition variable if the message queue is full, in effect waking up the writer tasks that are blocked waiting on the condition variable. Listing 15.5 shows the pseudo code for reader tasks and Listing 15.6 shows the pseudo code for writer tasks.

Listing 15.5: Pseudo code for reader tasks.

Lock(guarding_mutex)

Remove message from message queue

If (msgQueue Was Full)

Signal(Condition_variable)

Unlock(guarding_mutex)

Listing 15.6: Pseudo code for writer tasks.

Lock(guarding_mutex)

While (msgQueue is Full)

Wait(Condition_variable)

Produce message into message queue

Unlock(guarding_mutex)

As Chapter 8 discusses, the call to event_receive is a blocking call. The calling task is blocked if the event register is empty when the call is made. Remember that the event register is a synchronous signal mechanism. The task might not run immediately when events are signaled to it, if a higher priority task is currently executing. Events from different sources are accumulated until the associated task resumes execution. At that point, the call returns with a snapshot of the state of the event register. The task operates on this returned value to determine which events have occurred.

Problematically, however, the event register cannot accumulate event occurrences of the same type before processing begins. The task would have missed all but one timer tick event if multiple timer ticks had occurred before the task resumed execution. Introducing a counting semaphore into the circuit can solve this problem. Soft timers, as Chapter 11 discusses, do not have stringent deadlines. It is important to track how many ticks have occurred. This way, the task can perform recovery actions, such as fast-forwarding time to reduce the drift.

The data buffer in this design pattern is different from an RTOS-supplied message queue. Typically, a message queue has a built-in flow control mechanism. Assume that this message buffer is a custom data transfer mechanism that is not supplied by the RTOS.

Note that the lock call on the guarding mutex is a blocking call. Either a writer task or a reader task is blocked if it tries to lock the mutex while in the locked state. This feature guarantees serialized access to the shared message queue. The wait operation and the signal operation are both atomic operations with respect to the predicate and the guarding mutex, as Chapter 8 discusses.

In this example, the reader tasks create the condition for the writer tasks to proceed producing messages. The one-way condition creation of this design implies that either there are more writer tasks than there are reader tasks, or that the production of messages is faster than the consumption of these messages.

- 15.7.1 Data Transfer with Flow Control

- 15.7.2 Asynchronous Data Reception from Multiple Data Communication Channels

- 15.7.3 Multiple Input Communication Channels

- 15.7.4 Using Condition Variables to Synchronize between Readers and Writers

- 15.7.5 Sending High Priority Data between Tasks

- 15.7.6 Implementing Reader-Writer Locks Using Condition Variables

- 8.5.2 Typical Condition Variable Operations

- Разработка приложений баз данных InterBase на Borland Delphi

- Open Source Insight and Discussion

- Introduction to Microprocessors and Microcontrollers

- Chapter 6. Traversing of tables and chains

- Chapter 8. Saving and restoring large rule-sets

- Chapter 11. Iptables targets and jumps

- Chapter 5 Installing and Configuring VirtualCenter 2.0

- Chapter 16. Commercial products based on Linux, iptables and netfilter

- Appendix A. Detailed explanations of special commands

- Appendix B. Common problems and questions

- Appendix E. Other resources and links