Книга: Agile Software Development

Light But Sufficient

The pieces of the puzzle are in place. We have seen Software development as a cooperative game of invention and communication.

People as funky but good at looking around and taking initiative, communicating particularly well informally, face-to-face Methodology as the set of conventions the team adopts, with different conventions suiting different sorts of projects Light methodologies as delivering more quickly, but having to become heavier as the team size grows Projects as unique ecosystems, a project's methodology needing to fit the project ecosystem.

Everything fits together neatly, except, How light is right for any one project, and how do we do this on our project?

"Light but Sufficient" discusses how light is right for any one project, in particular, what it means to be too light. The target is to balance lightness with sufficiency.

"Agile" discusses the significance of certain project "sweet spots": colocation, proximity to users, experienced developers, and so on. Less agile mechanisms must be used as the project sits farther from those sweet spots. Virtual teams, in particular, lie far from the sweet spot, and so make agile, distributed development more difficult.

"Becoming Self-Adapting" describes a technique for evolving a light-but-sufficient, project-personal methodology quickly enough to be useful to the project. The key idea is to reflect every few weeks on what works well and what should be changed.

Light But Sufficient

The theory so far seems to say that we should use a mostly oral tradition to bind the huge amount of information generated within the project.

Common sense tells us that oral tradition is insufficient. Looking for Documentation

A programmer told of his company rewriting their current core product because there is no documentation, no one left who knows how the system was built, and they are unable to make their next changes. He said he hopes there will be documentation after the project, this time. Another told of three projects, each of which will build on the previous. The three are not at the same location. He said that they can't possibly work on a strictly oral basis.

It is possible to have too little stickiness in the information at hand. It is time to revisit the Cooperative Game principle:

The primary goal is to deliver software; the secondary goal is to set up for the following game.

Reaching the primary goal is clear: if you don't deliver the software, it won't matter how nicely you have set up for the following game.

If, on the other hand, you deliver the software and do a poor job of setting set up for the following game, you jeopardize that game.

The two are competing activities. Balancing the two competing activities relies on two arts.

The first art is guessing how to allocate resources to each goal. Ideally, documentation activities are deferred as long as possible, and then made as small as possible. Excessive documentation done too early delays the delivery of the software. If, however, too little documentation is done too late, the person who knows something needed for the next project has already vanished.

The second art is guessing how much can be bound in your group's oral tradition and how much has to be committed to archival documentation. Recall that we don't care, care, past a certain point, whether the models and other documentation are complete, correctly match the "real" world (whatever that is), or are up-to-date with the current version of the code. We care whether the people receiving them find them useful for their specific needs. The correct documentation is exactly that needed for the receiver to make her next move in the game. Any effort to make the models complete, correct and current past that point is a waste of money.

Usually, the people on the successful projects I have interviewed felt that they had succeeded "despite the obviously incomplete documents and sloppy processes" (their words, not mine). Viewed in our current light, however, we can guess that they succeeded exactly because the people made good choices in stopping work on certain communications as soon as they reached sufficiency and before diminishing returns set in. They made the paperwork adequate, they didn't polish it.

Adequate is a great condition if the team is in a race for an end goal and short on resources. Recall the programmer who said, "It is clear to me as I start creating use cases, object models and the like, that the work is doing some good. But at some point, it stops being useful, and starts being both drudgery and a waste of effort. I can't detect when that point is crossed, and I have never heard it discussed. It is frustrating, because it turns a useful activity into a wasteful activity."

We are seeking that point, the one at which useful work becomes wasteful. That is the second art.

Barely sufficient

I don't think I need to give examples of overly heavy or overly light methodologies. Most people have seen or heard enough of these.

"Just-barely-too-light" methodologies, on the other hand, are hard to find, and very informative. They are the ones that help us understand what barely sufficient means.

Two such project stories are given earlier in the book: "Just Never Documentation" in Chapter 1, and "Sticking Thoughts on the Wall" in Chapter 3. In each, an otherwise well-run project ran below the level of sufficiency at a key moment. Just Never Documentation (Recapped) This team followed all of the XP practices, and delivered software in a timely manner to a receptive customer. At the end of several years, the sponsoring executives slowed and eventually stopped new development. Once the team members dispersed, there was no archived documentation on the system, and no team of people conversant with its structure. The formerly sufficient oral culture was now insufficient.

In this story, the team reached the first goal of the game, delivering a running system. They failed to set up for the next game, maintenance and evolution.

Using my own logic against me, one could argue that the documentation was exactly and perfectly sufficient for the needs of the company: The project was canceled, never to be restarted, and so the correct, minimal amount of documentation was zero! However, drawing on Naur's "programming as theory building," we can see that the team had successfully built up their own "theory" during the creation of the software, but they left insufficient tracks for the next team to benefit from the lessons they had learned. Sticking Thoughts on the Wall (Recapped) The analysts could not keep track of the domain in their heads, it was so complex. However, they had just switched from a heavy process to XP, and thought they were forbidden from producing any paperwork. As the months went by, they found it increasingly hard to decide what to develop next, and to determine the implications of their decisions. They were running below the threshold of sufficiency for their portion of the game. Rather than less, they needed more documentation to make their project work.

They eventually recognized their situation, and started inventing information holders so that their communications would reach sufficiency.

What we should see is that "insufficiency" lies, not in the methodology, but in the fit between the methodology and the project as ecosystem. What is barely sufficient for one team may be overly sufficient or insufficient for another. Insufficiency occurs when team members do not communicate well enough for other team members to carry out their work.

The ideal quantity, "barely sufficient," varies by time and place within any one project. The same methodology may be overly sufficient at one moment on a project and insufficient at another moment.

That second art mentioned above is finding the point of "barely sufficient," and then finding it again when it moves.

Recommendations for Documentation

This leads us to a set of recommendations: Don't ask for requirements to be perfect, design documents to be up-to-date with the code, project plan to match the state of the project. Ask, instead, that the requirements gatherers capture just enough to communicate with the designers.

Ask them to replace typing with faster communications media where possible, including visits in person or short video clips. If the designers happen all to be expert and sitting close by each other, ask to dispense with design documentation beyond whiteboard sketches, and then capture the whiteboard drawings with photos or printing whiteboards. Bear in mind that there will be other people coming after this design team, people who will, indeed, need more design documentation. Run that as a parallel and resource-competing thread of the project, instead of forcing it into the linear path of the project's development process. Be as inventive as possible about ways to reach the two goals adequately, dodging the impracticalities of being perfect.

Find (using exaggerated adjectives for a moment) the lightest, sloppiest methodology possible for the situation. Make sure it is just rigorous enough that the communication actually is sufficient.

Agile

Agile implies being effective and manoeverable. An agile process is both light and sufficient. The lightness is a means of staying manoeverable. The sufficiency is a matter of staying in the game.

The question for using agile methodologies is not to ask, "Can an agile methodology be used in this situation" but "How can we remain agile in this situation?"

A 40-person team won't be as agile as a six-person colocated team. However, each can maximize its use of the agile methodology principles, and run as light and fast as they can creatively make their circumstances allow. The 40-person team will use a heavier-agile methodology, the six-person team will use a lighter-agile one. Each team will focus on communications, community, frequent wins and feedback.

If they are paying attention, they will reflect periodically about the fit of their methodology to their ecology, and keep finding where the point "barely sufficient" has moved itself to.

Sweet Spots

Part of getting to agile is identifying the sweet spots of effective software development and moving the project as close as possible to those sweet spots.

A team that can arrange to land on any of those sweet spots, gets to take advantage of some extra efficient mechanism. To the extent the team can't arrange to land in a sweet spot, it must use less efficient mechanisms. At that point, the team should think creatively to see how to get to the sweet spot, and to deal with not being there.

Here are a selection of five sweet spots:

Two to eight people in one room

Information moves the fastest in this sweet spot. The people ask each other questions without overly raising their voices. They are aware of when the other people are available to answer questions. They overhear relevant conversations without pausing their work. They keep the design ideas and project plan on the board, in ready sight.

People repeatedly tell me said that while the environment can get noisy, they have never been on a more effective project than when their small team sat in the same room.

With leaving this sweet spot, the cost of moving information goes up very fast. Every doorway, corner and elevator multiplies that cost.

The story, "e-Presence and e-Awareness" (Chapter 3), tells of one team not being able to land in this sweet spot. They used web cams on their workstations to get some of the presence and awareness of sitting in the same room. They used chat boxes to get answers to they very many small questions that constantly arise. They were creative in mimicking the sweet spot in an otherwise unsweet situation.

On-site usage experts

Having a usage expert available at all times means that the feedback time from imagined to evaluated solution is as short as possible, often just minutes to a few hours.

Such rapid feedback means that the development team grows a deeper understanding of the needs and habits of the users, and start making fewer mistakes coming up with new ideas. They try more ideas, making for a better final product. With a good sense of collaboration, the programmers will test the usage experts' ideas and offer counter-proposals. This will sharpen the customers' own ideas for the how the new system should look.

The cost of missing this sweet spot is a lowered probability of making a really useable product, and a much higher cost for running all the experiments.

There are many alternative, if less effective, mechanisms you can use when you can't land on this sweet spot. They have been well documented over the years: weekly interview sessions with the users; ethnographic studies of the user community; surveys; friendly alpha-test groups. There are certainly others.

Missing this sweet spot does not excuse you from getting good user feedback. It just means you have to work harder for it.

One-Month increments

There is no substitute for rapid feedback, both on the product and on the development process itself. Incremental development is perfect for providing feedback points. Short increments help the both the requirements and the process itself gets repaired quickly. The question is, how long should the delivery increments be?

The correct answer varies, but project teams I have interviewed vote for 1-3 months, with a possible reduction to two weeks and a possible extension to four months.

It seems that people are able to focus their efforts for about three months, but not much longer. People tell me that with a longer increment period, they tend to get distracted and lose intensity and drive. In addition, increments provide a team with chances to repair their process. The longer the increment, the longer between such repair points.

If this were the only consideration, then the ideal increment period might be one week. However, there is a cost to deploying the product at the end of an increment.

I place the sweet spot at around one month, but have seen successful use of two or three months.

If the team cannot deliver to an end user every few months, for some reason, it should prepare a fully built increment in that period, and get it ready for delivery, pretending, if necessary, that the sponsor will suddenly demand its delivery. The point of working this way is to exercise every part of the development process, and to improve all parts of the process every few months.

Fully automated regression tests

Fully automated regression tests (unit or functional tests, or both) bring two advantages: The developers can revise the code and retest it at the push of a button. People who have such tests report that they freely replace and improve awkward modules, knowing that the tests will help keep them from introducing subtle bugs. People report that they relax better on the weekends when they have automated regression tests. They run the tests every Monday morning, and discover if someone has changed their system out from under them.

In other words, automated regression tests improve both the system design quality and the programmers' quality of life.

There are some parts of the system (and some systems) that are difficult to create automated tests for.

One of those is the graphical user interface. Experienced developers know this, and allocate special effort to minimize the portions of the system not amenable to automated regression tests.

When the system itself does not have automated regression tests, experienced programmers find ways to create automated tests for their own portion of the system.

Experienced developers

In an ideal situation, the sweet spot, the team consists of only experienced developers. Teams like this that I know report much different, and better, results compared with the average, mixed team.

Since good, experienced developers may be two to ten times as effective as their colleagues, it would be possible to shrink the number of developers drastically if the team consists entirely of experienced developers.

On project Winifred, we estimated before and after the project that six good Smalltalk programmers could develop the system in the needed timeframe. Not being able to get six good Smalltalk programmers at that time, we used 24 programmers. The four experienced ones built most of the hard parts of the system, and spent much of their time helping the inexperienced ones.

If you can't land in this sweet spot, consider bringing in a half-time or full-time trainer or mentor to increase the abilities of the inexperienced people

The Trouble with Virtual Teams

"Virtual" is a euphemism meaning "not sitting together." With the current popularity of this phrase, project sponsors excuse themselves for imposing enormous communication barriers on their teams.

We have seen the damage caused to a project by having people sit apart. The speed of development is related to the time and energy cost per idea transfer, with large increases in transfer cost as the distance between people increases, and large lost opportunity costs when some key question does not get asked. Splitting up the team is just asking for added project costs.

I categorize geographically distributed teams into three sorts, some of them more damaging than others. My terms for them are multi-site, offshore, and distributed development.

Multi-site Development

Multi-site is when a larger team works in relatively few locations, each location contains a complete development group, and the groups are developing fairly decoupled subsystems.

Multi-site development has been performed successfully for decades.

The key in multi-site development is to have full and competent teams in each location, and to make sure that the leaders in each location meet often enough to share their vision and understanding. Although many things can go wrong in multi-site development, it has been demonstrated to work many times, and there are fairly standard rules about getting it to work, unlike the other two virtual team models.

Offshore development

Offshore development is when "designers" in one location send specifications and tests to "programmers" in another location, usually in another country.

Since the offshore location lacks architects, designers and testers, this is quite different than multi-site development.

Here's how offshore development looks, using the words of cooperative games and convection currents.

The designers at the one site have to communicate their ideas to people having a different vocabulary, sitting several time zones away, over a thin communications channel. The programmers need a thousand questions answered. When they find mistakes in the design, they have to do three expensive things: first, wait until the next phone or video meeting; second, convey their observations; and third, convince the designers of the possible mistake in the design. The cost in erg-seconds per meme is staggering, the delays enormous. Testing Off-shore Coding

One designer told me that his team had to specify the program to the level of writing the code itself, and then had to write tests to check that the programmers had correctly implemented every line they had written. The designers did all the paperwork they considered unpleasant, without the reward of being able to do the programming. In the time they spent specifying and testing, they could have written the code themselves, and they would have been able to discover their design mistakes much faster.

I have not been able to find methodologically successful offsite development projects. They fail the third test: The people I have interviewed have vowed not to do it again.

Fortunately, some offshore software houses are converting their projects into something more like multi-site development, with architects, designers, programmers and testers at the programming location. While the communications line is still long and thin, they can at least gain some of the feedback and communication advantages of multi-site development.

Distributed development

Distributed development is when a team is spread across relatively many locations with relatively few, often only one or two, people per location.

Distributed development is becoming more commonplace, but it is not becoming more effective. The cost of transferring ideas is great, and the lost opportunity costs of undetected questions greater. Distributed development model works when it mimics multi-site development, with meaningful subteams of one or two people each person's assignment is clear and contained.

However, the following is more common: Criss-Crossed Distribution

A company was developing four related products in four locations, each product having multiple subsystems.

A sweet spot would be to have all systems of one product developed at the same location, or one subsystem for all products. With either of these, the people would be physically proximate to the people they needed to exchange information with. Instead, the dozens of people involved were arranged so that people working in the same city worked on different subsystems of different products. They were surrounded by people whose work had little to do with theirs, and separated from those with whom the needed to communicate with!

Occasionally, people tell of developing software effectively with someone at a different location. What this tells me is that is something new to discover: What permits these people to communicate so well over such a thin communications line? Is it just a lucky alignment of their personalities or thinking styles? Have they constructed a small multi-site model? Or are they drawing on something that we haven't learned to name yet?

Successful Distributed Development

I spent an evening talking with a couple of people who were successfully using four or five people who never met as a group.

They said that besides partitioning the problem carefully, they spent a lot of time on the phone, calling each person multiple times each day.

In addition to those obvious tactics, the team coordinator worked particularly hard to keep trust and amicability levels very high. She visited each developer every few weeks and made sure that they found her visits helpful (not blame sessions).

This coordinator was interested in replicating their development model. We concluded, by the end of the evening, that she would need to find another development coordinator with a similar personal talent for developing trust and amicability. Two aspects of their development struck me: Their attention to building trust among themselves, The vast amount of energy they invested into communication on a daily basis, to achieve opportunistic learning, trust and feedback.

Open-Source Development

Open source development, although similar in appearance to distributed development, differs in its philosophical, economic, and team structure models. In contrast to the resource-constrained cooperative game most software development projects play, an open-source project is playing a non-resource-constrained cooperative game.

An industrial project aims to reach its goal in a given time frame with a given amount of money. The constraints of money and time limit how many people can work on it, and for how long. In these games we hear three phrases: "Finish it before the market window closes!" "Your job is to make the trade-off between quality and development time!" "Ship it!" An open-source development project, on the other hand, runs with the idea that with enough eyes, minds, fingers and time, really good designs and really good quality code will show up. There are, in principle, an unlimited number of people interested in contributing, and no particular market window to hit. The project has its own life and existence. Each person improves the system where it is weak, at whatever rate that time and energy indicate.

The reward structure is also different, being based on intrinsic, as opposed to external rewards (see Chapter 2). People develop open-source code for pleasure, as service to a community they care about, and for peer recognition. The motivational model is discussed at length in "Homesteading the noosphere" (?? URL).

A goal for an industrial developer would be to become the next Bill Gates. The corresponding goal for an open-source developer would be to become the next Linus Torvalds.

Finally, the open-source team structure of open-source development is different. Anyone may contribute code, but there is a designated gate-keeper protecting the center, the code base. That gatekeeper needn't be the best programmer, but needs to be a good programmer with good people skills and a very good eye for quality. Over time, the few, best contributors come to occupy the center, becoming intellectual owners of the design. Around these few people are an unlimited number of people who contribute patches and code suggestions, detect and report bugs, and who write documentation.

It has been suggested, and I find it plausible, that one of the key aspects of open-source development

If you have been reading this book from the beginning, you should still see one mystery at this point.

Every person is different, every project is different, a project differs internally across subject areas, subsystems, subteams and time. Each situation calls for a different methodology (set of group conventions).

All communication is visible to anyone. I find this plausible using the following comparison with industrial projects:

On an industrial project with a colocated team, trouble comes if the team evolves into a society with an upper and a lower class. If analysts sit on one side of the building and programmers sit on the opposite side, an "us-them" separation easily builds that causes hostility between the groups (I almost wrote "factions"). In a well-balanced team, however, there is only "us", there is not an "us-them" sensation. A key role in the presence or absence of this split is the nature of the background chit-chat within the group. When the seating forms enclaves of common specialists (I almost wrote "ghettos"), that background chit-chat almost inevitably contains comments about "them."

In open-source development, the equivalent situation would be that one sub-group, the colocated one, is thought to be having a set of discussions that the others are not able to see. The distributed people would find it easy to develop a sense of being second-class citizens, cut away from the heart of the community, and cut off from relevant and interesting conversations.

When all communication is online, visible to everyone, there is no natural place for rumors to grow in hiding, and once again there is only "us."

I would like one day to see or do a decent investigation of this aspect of open-source development.

The mystery is how to construct a different methodology for each situation, without spending so much time designing the methodology that the team doesn't deliver software. You also don't want everyone on your project to have to grow into a methodology expert.

I hope you can guess what's coming.

Becoming Self-Adapting

Bother to Reflect

The trick to fitting your conventions to your ever-changing needs is to bother to think about what you are doing.

Individually and as a team, do two things:

Bother to think about what you are doing.

Have the team spend one hour together every other week reflecting on its working habits.

If you do these two things, you can make your methodology effective, agile, and tailored to your situation. If you can't do that, well ... you will stay where you are.

Although the magical ingredient is remarkably simple, it is quite difficult to pull off, given people's funky nature. People usually resist having the meeting. In some organizations, the distrust level is so high that people will not speak at such a get-together.

There is only one thing to do:

Do it once, post the results, and then see if you can do it again.

You may need to find someone within your organization who has the personal skills to make the meeting work. You may need to go outside your organization for the first few times, to get someone with the right personal skills, and whom everyone in the room can accept.

A Methodology-Growing Technique

Here is a small technique for on-the-fly methodology construction and tuning. I present it as what to do at five different times: Right now

At the start of the project In the middle of the first increment Between each increment In the middle of subsequent increments.

After that, I describe a sample one-hour reflection workshop.

Right Now

Discover the strengths and weaknesses of your organization through short project interviews.

You can do this at the start of the project, but you can also do this right away, regardless of where you are in any project. The information will do you good in all cases, and you can start to build your own project interview collection.

Ideally, have several people interview several other people each, and start your collection with six or ten interview reports. It is useful but not critical to interview more than one person on one project. For example, you might talk to any two of the following: the project manager, the team lead, a user interface designer, a programmer. Their different perspectives on the same project will prove informative. Even more informative, however, will be the common responses across multiple projects.

The important thing to keep in mind is that whatever the interviewee says is relevant. During an interview, I don't speak my own opinions on any matter, but use my judgement to select a next question to ask.

In my interviews, I follow a particular ritual:

I ask to see one sample of each work product produced.

Looking at these, I detect how much bureaucracy was likely to be on the project, and see what questions I should ask about the work products.

I look for duplicated work, places where they might have been difficult to keep up to date.

I ask whether iterative development was in use, and if so, how the documents were updated in following iterations.

I look, in particular, for ways in which informal communication was used to patch over inconsistencies in the paperwork. Work Product Redundancy

On one project, the team lead showed me 23 work products.

I noticed a fair degree of overlap across them, so I asked if the later ones were generated by tools from the earlier ones. The team lead said, no, the people had to reenter them from scratch. So I followed up by asking how the people felt about this. He said, they really hated it, but he made them do it anyway.

After looking at the work samples, I ask for a short history of the project: date started, staff changes (growing and shrinking), team structure, the emotionally high and low points of the project life. I do this mostly to calibrate the size and type of the project, and to detect where there may be interesting other questions to ask.

Discovering Incremental Development

That is how I learned the fascinating story about the project I call "Ingrid" (Cockburn 1998).

During just the project inception phase, the team had hit most of the failure indicators I knew at the time. That their first four-month increment was a catastrophe came as no surprise to me. I even wondered why I had traveled so far just to hear about such an obvious failure. The surprise was in what they did after that.

After that first increment, they changed almost everything about the project. I had never seen that done before. Four months later, they rebuilt the project again - not as drastically, but enough to make a difference.

Every four months, they delivered running, tested software, and then sat down to examine what they were doing, how to get better (just as I am asking you to do).

The most amazing thing was that they didn't just talk about changing their way of working, they actually changed their way of working.

The value of this interview lay, not in our discussing deliverables, but in my hearing their phenomenal determination to succeed, their willingness to change, every four months, whatever was necessary to get the success they wanted.

After hearing the history of the project and listening for interesting threads of inquiry to pursue, I ask, "What were the key things you did wrong, that you wouldn't want to repeat on your next project?"

I write down whatever they say, and I fish around for related issues to investigate.

After hearing the things not to repeat, I ask, "What were the key things you did right, that you would certainly like to preserve in your next project?" I write down whatever they say. If the person says, "Well, the Thursday afternoon volleyball games were really good," I write that down. Getting Seriously Drunk Together Once when I asked this question (in Scandinavia), a person said, "Getting seriously drunk together."

We went out and practiced that night, and I did, indeed, see improved communication between the people the next day.

In response to this question, people have named everything from where they sit, to having food in the refrigerator, to social activities, communication channels, software tools, software architecture and domain modeling. Whatever you hear, write it down.

I revisit the issues in the conversation by asking,

"What are your priorities with respect to the things you liked on the project - which is most critical to keep, and which most negotiable?"

I write those down.

It is useful to ask at this point, "Was there anything that surprised you about the project?"

Finally, I ask whether there is anything else I should hear about, and see where that goes. At one company, we constructed a two-page interview template on which to write the results, so we could exchange them easily. That template contained the following sections:

1. Project name, job of person interviewed (the interviewee stays anonymous)

2. Project data (start / end dates, maximum staff, target domain, technology in use).

3. Project history

4. Did wrong / would not repeat

5. Did right / would preserve

6. Priorities

7. Other

Do this exercise, collect the filled-in templates, look them over. Depending on your situation, you might have each interviewer talk about the interview, or you may just all read the notes.

Look for common themes across the projects. The Communication Theme

At the company where we created the template, one theme showed up across the projects:

"When we have good communications with the customer sponsors and within the team, we have a good outcome. When we don't have good communications, we don't have good results."

Although that may seem trivially true, it seldom gets written down and attended to. In fact, within a year of that result, the following story occurred at that company: The Communication Theme in Action

Mine was one of three projects going on at the same time, each of which involved small teams with people sitting in several cities.

As you would expect I spent a great deal of energy on communications with the sponsors and programmers.

The three projects completed at about the same time. The director of development asked me the difference could be, that the project I was on was successful, while the other two that ran at the same time were unsuccessful.

Recalling the project interviews, I suggested it might have something to do with the quality of communication between the development and sponsoring groups, and within the team.

He said this was an interesting idea. Both the programmers and the sponsors on the other projects had both reported problems in communicating with their project leads. Both programmers and sponsors had felt isolated. The sponsors of my project, on the other hand, had been very happy with the communications.

The theme was different in another company. Here is what one interviewee told me: The Cultural Gap Theme

Our user interface designers all have Ph.D.s in psychology and sit together several floors above the programmers. There is an educational, a cultural, and a physical gap between them and the programmers. We have some difficulty due to the different approach of these people, and to the distance we sit from them. This company will need extra mechanisms to increase contact between those two groups of people and extra reviews of their work..

The point of these stories is to highlight that what you learn in the interviews is likely to be relevant on your next project. Pay attention to the warnings that come in the project interviews.

At the Start of the Project

Expect to do some tailoring to the corporate methodology standard. This will be needed whether the base methodology is ISO9001, XP, RUP, Crystal, or a local brew.

Stage 1: Base Methodology to be Tuned

If possible, have two people work together on creating the base methodology proposal for the project. It will go faster, they will spot each other's errors, and they will help each other come up with ideas.

They have four steps to go through:

Determine how many people are going to be coodinated, and their geographic distribution (see the grid in Figure 5-21). Decide what level of correctness is expected of this software, what degree of damage it could cause. Determine and write down the priorities for the project: time to market, correctness, or whatever they may be.

Using the methodology design principles from Chapter 4, select the basic parameters for the methodology: how tight the standards need to be, the extent of documentation needed, the ceremony in the reviews, the increment length (the time period until running code is delivered to real, if sample, users). If the increment length is longer than four months, they will have to find some way to create a tested, running version of the system every four months or less, to simulate having real increments.

Select a base for the methodology, one not too different from the way they would like to work.

Recall that it is easier to modify an existing one than to invent one from scratch. They may choose to start from the corporate standard, the published Unified Process, XP, Crystal Clear, Crystal Orange, or the last project's methodology.

Boil the methodology down to the basic work flow involved - who hands what to whom - and the conventions they think the group should agree to.

These steps could take between a day and a few days for a small or medium-sized project. If it looks like they will spend more than a week on it, then get one or two more people from the project team involved and drive it to completion in just two more days.

Stage 2: The Starter Methodology

Hold a team meeting to discuss the base methodology's work flow and conventions, and adjust it to become the starter methodology. For larger projects, where it is impractical to gather the whole team, gather the key representatives of each job role. The purpose of the meeting is to Catch embellishments Look for ways to streamline the process and ways to communicate with less cost Detect other issues that did not get spotted in the baes methodology draft Consider these questions in that meeting: How long are the iterations and increments to be (and what is the difference)? Where will people sit? What can be done to keep communication and morale high? What work products and reviews will be needed, at what ceremony levels? Which standards for tools, drawings, tests, and code are mandatory, which just recommended? How will time reporting be done? What other conventions should be set initially, and which might be evolved over time? An important agenda item for the meeting is selecting a way for the team to detect morale and communication problems.

The meeting results will include: Basic work flow

Hand-off criteria between roles, particularly including overlapped development and declaration milestones Draft standards or conventions to be followed Peculiarities of communication to be practiced This is your starter methodology. The meeting could take half a day, but should not exceed one day.

In the Middle of the First Increment

Whether your increment length is two weeks or three months, run a small interview with the team members, individually or in a group meeting, at approximately the mid-point of the increment. Allow one to three hours.

The single question for resolution is, "Are we going to make it, working the way we are working?"

In the first increment, you can't afford to change your group's whole way of working unless it is catastrophically broken. What you are looking ofr is to get safely to your first delivery. If the starter methodology will hold up that long, you will have more time, more insight and a better moment to adjust it, after you have successfully made your first delivery.

Therefore, the purpose of this interview or meeting is to detect whether something is critically wrong and the first delivery will fail.

If you discover that the team's way of working isn't working, first consider reducing the scope of the first delivery.

Most teams overstate how much they can deliver in the first increment - to me, this is simply normal, and not a fault of the methodology. It is a result of overambitious management driving the schedule unrealistically, and overly optimistic developers, who overlook the learning to be done, the meetings to be held, the normal bugs they put into the code. It comes from underestimating the learning curve of new technology and new teammates. Overstating how much can be delivered in the first increment is actually quite normal.

Therefore, your first approach is to reduce scope.

You may, however, discover that reducing scope will not be sufficient. You may discover that the requirements are incomprehensible to the programmers, or that the architects won't get their glorious architecture specification done in time.

If this is the case, then you need to react quickly and find a new way of working, which, combined with drastically reduced functional scope, will allow you to meet that first delivery deadline.

You may introduce overlapped development, or put people physically closer together, cut down the ambition level for the initial architecture, or make greater use of informal communication channels. You may have to make emergency staff changes, or introduce emergency training, consulting or experienced contractors.

Your goal is to delivery something, some small, running, tested code in the first increment. This is a critical success factor on a project (Cockburn 1998). Once you deliver this first release, you will have time to pause and consider what is happening.

After each increment

Hold a team reflection workshop after each increment.

Bothering to reflect is a critical success factor in evolving a successful methodology, just as incremental development is a critical success factor in delivering software.

The length of this reflection workshop may vary from company to company or country to country. Americans like to be always busy, short of money and on the run. I see Americans allocating only two to four hours for this workshop. In other parts of the world, the workshop may be given more time.

I once participated in a two-day offsite version that combined reflection, team-building, and planning for the next increment. It took place in Europe, not surprisingly.

The dominant reason for delaying this workshop until after the first increment is that you can only properly evaluate the effects of each element in your methodology after you have delivered running, tested software to a user. Only then can you see what was overdone, and what was underdone.

There is a second reason for holding the workshop at the end of the increment: People are quite often exhausted after getting the software out the door. This meeting provides a chance to breathe and reflect. Done regularly, it becomes part of the project rhythm. After each increment, the staff benefit from a short shifting of mental and social gears.

Whether you take two hours or two days, the two questions you want to address are: "What did we learn?" "What can we do better?"

The responses may cross every boundary of the project, from management intervention, to timecards, group communication, seating, project reviews, standards, and team composition.

Very often, teams tighten standards after the first increment, get more training, streamline the work flow, increase testing, and reorganize the teaming structures.

The changes will be much smaller after the second and subsequent increments, since the team has already delivered several times.

In the Middle of the Subsequent Increments

After the first increment, the team has established one (barely) successful way of working. This is a methodology design to fall back on, if needed.

Having that as a fallback plan, you can be much more adventuresome in suggesting changes in the mid-increment meetings you hold in the second and later increments.

In those mid-increment meetings, and particularly after the second successful delivery, look to invent new and better ways of delivering.

See if you can do any of the following: Cut out entire sections of the methodology. Do more concurrent development Use informal communications more to bind the project information Introduce new and better testing frameworks Introduce new and better test-writing habits Get closer collaboration between the key groups in the project, between domain and usage experts, programmers, testers, training people, the customer care center, and the people doing field repair.

You might use interviews or a reflection workshop for these mid-increment adjustment. By this time, your team will have had quite a bit of practice with these meetings, and will have an idea of how to behave.

You may omit the mid-increment workshops if the project is using increments three weeks or shorter.

Why bother with mid-increment reviews, when the project is already delivering, and you already have post-increment reviews in place?

In the middle of the development cycle, those things that are not working properly are right in people's faces. The details of the problems will not be as clear four or six weeks later, at the post-increment meeting. Therefore, you can pick up more details in the middle of the increment, get feedback immediately about the idea, and try out a new idea the same day, instead in several weeks or months.

What if a new idea doesn't work out?

Sometimes the team tries a new idea on the second or third increment, and finds that the idea simply does not work well. Mid-Project Team Structure Changes

On one project, we went through three different team structures during the third increment.

A short way into the third increment, we decided that the team structure we had been using was weak. So we chose a new team structure to use on increment three.

It was catastrophically bad. We knew within two weeks that we had to change it immediately. Rather than revert to the original, awkward but successful team structure, we created a new suggestion and tried it out right away.

It turned out to be successful, and we kept it for the duration of the project. In inventing new ways of working in these later increments, you create the opportunity to significantly improve your methodology. This is an opportunity not to be missed.

The Post-Project Review

Given the mid- and post-increment reflection workshops, I place less emphasis on having a post-project reviews. I feel that the time to reflect is during the project, when the reflection and discussion will do the project some good. After the project, it is too late.

Usually, I find that teams that run post-project reviews did not bother to reflect during the project, and suddenly wants to know how to do better for the next project. If you find yourself in such a meeting, put forward the suggestion that next time, you want to use incremental development, and hold postincrement reviews instead.

Nonetheless, it may be that the post-project review is the only time you get to make statements regarding the staffing and project management used. If this is the case, I suggest getting and using the book Project Retrospectives (Kerth 2001), which describes running a two-day post-project review.

If you hold a post-project review, think about who is going to make use of the information, and what they can really use, as they run their next project. You might draft a short (two-page) set of notes for the next project team to read, outlining the lessons learned from this project.

Of course, you might write yourself a one-page lessons learned reminder after each of your own increments, as a normal outcome of your reflection workshop.

A Reflection Workshop Technique

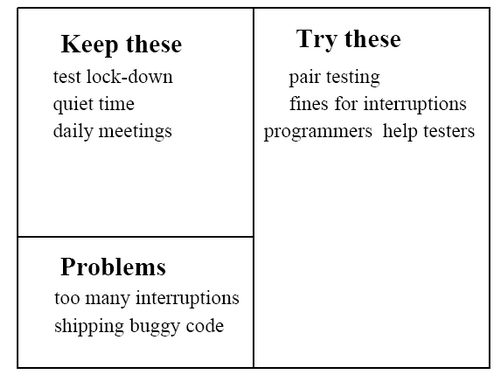

The tangible output of a mid- or post-increment reflection workshop is a flipchart that get posted on the wall in some prominently visible place and seen by the project participants as they go about their business.

I like to write directly onto the flipchart that will get posted. It is the one that contains the group memories. Other people like to copy the list from the scratched- and scribbled-on flipchart to a fresh sheet for posting. The people who created the one shown in Figure 3-10 decided to use sticky notes instead of writing on the flipchart.

A Sample Reflection Workshop Technique

There are several different formats for running the workshop, and for sharing the results (of course). I tend to run the simplest version I can think of. It goes approximately like this:

A Reflection Workshop

Hi, welcome to this workshop to reflect on how we can get better at producing our software. The purpose of this meeting is not to point fingers, to blame people, or to escape blame. It is to announce where we are getting stuck, and nominate ideas for getting past that stuckness.

The outcome of this workshop will be a single flipchart on which we'll write the ideas we intend to try out during the next increment, the things we want to keep in mind as we work. Let's break this flipchart into three pieces. On the left side, let's capture the things we are doing well, that we want to make sure we don't lose in the next increment.

On the right side, let's capture the new things we want to focus on doing.

On the supposition that the list of what we're doing right will be the shorter of the two, let's write down the major problems we're fighting with, halfway down the left side here (see Figure 6-1).

Let's start with what we're doing right. Is there anything that we're doing right, that we want to make sure we keep around for the next increment?

Figure 6-1. Sample poster from reflection workshop.

At this point some discussion ensues. It is possible that someone starts naming problems, instead of good things. If they are significant, write them down under the Problems section. Allow some time for people to reflect and discuss.

Eventually, move the discussion along:

All right, what are some of the key problems we had this last time, and what can we do to improve things?

Write as little as possible in the Problems section: write as few words as possible, and merge problems together if possible. The point of this poster is to post suggestions for improvement, not to focus on problems.

Collect the suggestions. If the list gets very long, question how many new practices or habits the group really wants to take on during this next period. It is sometimes depressing to see an enormous list of reminders. It is more effective to have a shorter list of things to focus on. Writing on a single flipchart with a fat flipchart pen is a nice, self-limiting way of handling this.

Periodically, see if someone has thought of more good things the team is doing that should be kept.

Toward the end of the workshop, review the list. See if people really are in agreement to try the new ideas, or if they were just being quiet.

After the workshop, post the list where everyone can see it.

At the start of the next workshop, you might bring in the poster from the previous workshop, and start by asking whether this way of writing and posting the workshop outcome was effective, or what else you might try.

Holding this meeting every two to six weeks will allow your team to track its local and evolving culture, to create its own, agile methodology.

The Value of Reflection

The article on Shu-Ha-Ri excerpted in Chapter 2 continues with the following very relevant discussion of reflection:

And What Should I Do Tomorrow?

Consider "agile" as an attitude, not a formula. In that frame of mind, look at your current project and ask, "How can we, in this situation, work in an agile way?"

"As you learn a technique, and as it asymptotically approaches your mental model of the technique as you see others practicing it, you can begin to reason about the technique. It seems the important questions to ask are:

1. How does this technique work?

2. Why does this technique work?

3. How is this technique related to other techniques that I am practicing?

4. What are the necessary preconditions and postconditions to effectively apply this technique in the combatitive situation? ...

As you develop a reasonable repertoire of techniques that you can perform correctly, you will need to expose yourself to as broad a range of practitioners as possible. As you watch others, you need to ask and answer at least three questions:

1. Which other practitioners do I respect and admire?

2. How is what they do different from what I do?

3. How can I change my practice (both mental model and attempts to correspond to it) to incorporate the differences that I think are most important? ... The questions you need to ask yourself about a competition in your post mortems are:

1. Were you able to control the pace and actions of your opponents.

2. Were you able to keep calm and make your techniques effectively with an unhurried frame of mind.

3. Does your competition look like those of the practitioners you admire. ...

Throughout all of this, you must honestly evaluate the results of each 'test'. Cycle back to Shu through Ha and then Ri as you go down dead end paths."

I couldn't say it better.

Look for how far you are from the sweet spots in your development team. See how creative you can be in getting closer to, or simulating them. Look for where your team can lighten its methodology. Look for where it is not-yet sufficient. Perform one project interview as described. Get several people to perform one each, and share results. Find the common thread in your interview results. Hold a one-hour reflection workshop within your project. As you encounter difficulty in this, reflect on which aspects of people are showing up; compare them to the list I gave in Chapter 3. Look for antidotes and extend my list. Post the reflection workshop flipchart, and check how many people ever look at it. See what it takes to hold a second one. Learn how to get people to complain less and make more positive suggestions a these workshops.. Develop yourself into a Level 2 methodology designer. Yes, it is part of your profession.

- Attributes

- State NEW packets but no SYN bit set

- 1. TERMS AND CONDITIONS FOR COPYING, DISTRIBUTION AND MODIFICATION

- Distributed Denial of Service

- 10.3.2 Automatic Highlighting and Coloring

- 5.3. TRENDS IN DISTRIBUTED FILE SYSTEMS

- 4.3. Элементы управления MultiPage, ScrollBar, SpinButton

- Mini-CD Linux Distributions

- Floppy-Based Linux Distributions

- Various Intel-Based Linux Distributions

- PowerPC-Based Linux Distributions

- 2.4. Embedded Linux Distributions