Êíèãà: Code 2.0

Z-Theory

Z-Theory

“So, like, it didn’t happen, Lessig. You said in 1999 that commerce and government would work together to build the perfectly regulable net. As I look through my spam-infested inbox, while my virus checker runs in the background, I wonder what you think now. Whatever was possible hasn’t happened. Doesn’t that show that you’re wrong?”

So writes a friend to me as I began this project to update Code v1. And while I never actually said anything about when the change I was predicting would happen, there is something in the criticism. The theory of Code v1 is missing a part: Whatever incentives there are to push in small ways to the perfectly regulable Net, the theory doesn’t explain what would motivate the final push. What gets us over the tipping point?

The answer is not fully written, but its introduction was published this year. In May 2006, the Harvard Law Review gave Professor Jonathan Zittrain (hence “Z-theory”) 67 pages to explain “The Generative Internet.”[36] The article is brilliant; the book will be even better; and the argument is the missing piece in Code v1.

Much of The Generative Internet will be familiar to readers of this book. General-purpose computers plus an end-to-end network, Zittrain argues, have produced an extraordinarily innovative ( “generative”) platform for invention. We celebrate the good stuff this platform has produced. But we (I especially) who so celebrate don’t pay enough attention to the bad. For the very same design that makes it possible for an Indian immigrant to invent HoTMaiL, or Stanford dropouts to create Google, also makes it possible for malcontents and worse to create viruses and worse. These sorts use the generative Internet to generate evil. And as Zittrain rightly observes, we’ve just begun to see the evil this malware will produce. Consider just a few of his examples:

• In 2003, in a test designed to measure the sophistication of spammers in finding “open relay” servers through which they could send their spam undetected, within 10 hours spammers had found the server. Within 66 hours they had sent more than 3.3 million messages to 229,468 people.[37]

• In 2004, the Sasser worm was able to compromise more than 500,000 computers — in just 3 days.[38] The year before, the Slammer worm infected 90 percent of a particular Microsoft server — in just 15 minutes.[39]

• In 2003, the SoBig.F e-mail virus accounted for almost 70 percent of the e-mails sent while it was spreading. More than 23.2 million messages were sent to AOL users alone.[40]

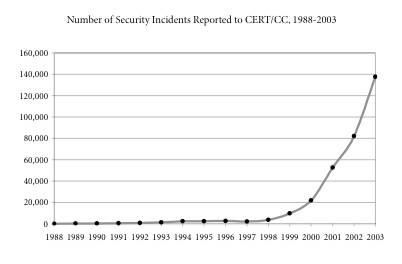

These are of course not isolated events. They are instead part of a growing pattern. As the U.S. Computer Emergency Readiness Team calculates, there has been an explosion of security incidents reported to CERT. Here is the graph Zittrain produced from the data:[41]

The graph ends in 2004 because CERT concluded that the incidents were so “commonplace and widespread as to be indistinguishable from one another.”[42]

That there is malware on the Internet isn’t surprising. That it is growing isn’t surprising either. What is surprising is that, so far at least, this malware has not been as destructive as it could be. Given the ability of malware authors to get their malicious code on many machines very quickly, why haven’t more tried to do real harm?

For example, imagine a worm that worked itself onto a million machines, and in a synchronized attack, simultaneously deleted the hard drive of all million machines. Zittrain’s point is not that this is easy, but rather, that it is just as difficult as the kind of worms that are already successfully spreading themselves everywhere. So why doesn’t one of the malicious code writers do real damage? What’s stopping cyber-Armageddon?

The answer is that there’s no good answer. And when there’s no good explanation for why something hasn’t happened yet, there’s good reason to worry that it will happen. And when this happens — when a malware author produces a really devastatingly destructive worm — that will trigger the political resolve to do what so far governments have not done: push to complete the work of transforming the Net into a regulable space.

This is the crucial (and once you see it, obvious) insight of Z-theory. Terror motivates radical change. Think about, for example, the changes in law enforcement (and the protection of civil rights) effected by the “Patriot Act.”[43] This massively extensive piece of legislation was enacted 45 days after the terror attacks on 9/11. But most of that bill had been written long before 9/11. The authors knew that until there was a serious terrorist attack, there would be insufficient political will to change law enforcement significantly. But once the trigger of 9/11 was pulled, radical change was possible.

The same will be true of the Internet. The malware we’ve seen so far has caused great damage. We’ve suffered this damage as annoyance rather than threat. But when the Internet’s equivalent of 9/11 happens — whether sponsored by “terrorists” or not — annoyance will mature into political will. And that political will will produce real change.

Zittrain’s aim is to prepare us for that change. His powerful and extensive analysis works through the trade-offs we could make as we change the Internet into something less generative. And while his analysis is worthy of a book of its own, I’ll let him write it. My goal in pointing to it here is to provide an outline to an answer that plugs the hole in the theory of Code v1. Code v1 described the means. Z-theory provides the motive.

There was an awful movie released in 1996 called Independence Day. The story is about an invasion by aliens. When the aliens first appear, many earthlings are eager to welcome them. For these idealists, there is no reason to assume hostility, and so a general joy spreads among the hopeful across the globe in reaction to what before had seemed just a dream: really cool alien life.

Soon after the aliens appear, however, and well into the celebration, the mood changes. Quite suddenly, Earth’s leaders realize that the intentions of these aliens are not at all friendly. Indeed, they are quite hostile. Within a very short time of this realization, Earth is captured. (Only Jeff Goldblum realizes what’s going on beforehand, but he always gets it first.)

My story here is similar (though I hope not as awful). We have been as welcoming and joyous about the Net as the earthlings were about the aliens in Independence Day; we have accepted its growth in our lives without questioning its final effect. But at some point, we too will come to see a potential threat. We will see that cyberspace does not guarantee its own freedom but instead carries an extraordinary potential for control. And then we will ask: How should we respond?

I have spent many pages making a point that some may find obvious. But I have found that, for some reason, the people for whom this point should be most important do not get it. Too many take this freedom as nature. Too many believe liberty will take care of itself. Too many miss how different architectures embed different values, and that only by selecting these different architectures — these different codes — can we establish and promote our values.

Now it should be apparent why I began this book with an account of the rediscovery of the role for self-government, or control, that has marked recent history in post-Communist Europe. Market forces encourage architectures of identity to facilitate online commerce. Government needs to do very little — indeed, nothing at all — to induce just this sort of development. The market forces are too powerful; the potential here is too great. If anything is certain, it is that an architecture of identity will develop on the Net — and thereby fundamentally transform its regulability.

But isn’t it clear that government should do something to make this architecture consistent with important public values? If commerce is going to define the emerging architectures of cyberspace, isn’t the role of government to ensure that those public values that are not in commerce’s interest are also built into the architecture?

Architecture is a kind of law: It determines what people can and cannot do. When commercial interests determine the architecture, they create a kind of privatized law. I am not against private enterprise; my strong presumption in most cases is to let the market produce. But isn’t it absolutely clear that there must be limits to this presumption? That public values are not exhausted by the sum of what IBM might desire? That what is good for America Online is not necessarily good for America?

Ordinarily, when we describe competing collections of values, and the choices we make among them, we call these choices “political.” They are choices about how the world will be ordered and about which values will be given precedence.

Choices among values, choices about regulation, about control, choices about the definition of spaces of freedom — all this is the stuff of politics. Code codifies values, and yet, oddly, most people speak as if code were just a question of engineering. Or as if code is best left to the market. Or best left unaddressed by government.

But these attitudes are mistaken. Politics is that process by which we collectively decide how we should live. That is not to say it is a space where we collectivize — a collective can choose a libertarian form of government. The point is not the substance of the choice. The point about politics is process. Politics is the process by which we reason about how things ought to be.

Two decades ago, in a powerful trilogy drawing together a movement in legal theory, Roberto Unger preached that “it’s all politics.”[44] He meant that we should not accept that any part of what defines the world is removed from politics — everything should be considered “up for grabs” and subject to reform.

Many believed Unger was arguing that we should put everything up for grabs all the time, that nothing should be certain or fixed, that everything should be in constant flux. But that is not what he meant.

His meaning was instead just this: That we should interrogate the necessities of any particular social order and ask whether they are in fact necessities, and we should demand that those necessities justify the powers that they order. As Bruce Ackerman puts it, we must ask of every exercise of power: Why?[45] Perhaps not exactly at the moment when the power is exercised, but sometime.

“Power”, in this account, is just another word for constraints that humans can do something about. Meteors crashing to earth are not “power” within the domain of “it’s all politics.” Where the meteor hits is not politics, though the consequences may well be. Where it hits, instead, is nothing we can do anything about.

But the architecture of cyberspace is power in this sense; how it is could be different. Politics is about how we decide, how that power is exercised, and by whom.

If code is law, then, as William Mitchell writes, “control of code is power”: “For citizens of cyberspace, . . . code . . . is becoming a crucial focus of political contest. Who shall write that software that increasingly structures our daily lives? ”[46] As the world is now, code writers are increasingly lawmakers. They determine what the defaults of the Internet will be; whether privacy will be protected; the degree to which anonymity will be allowed; the extent to which access will be guaranteed. They are the ones who set its nature. Their decisions, now made in the interstices of how the Net is coded, define what the Net is.

How the code regulates, who the code writers are, and who controls the code writers — these are questions on which any practice of justice must focus in the age of cyberspace. The answers reveal how cyberspace is regulated. My claim in this part of the book is that cyberspace is regulated by its code, and that the code is changing. Its regulation is its code, and its code is changing.

We are entering an age when the power of regulation will be relocated to a structure whose properties and possibilities are fundamentally different. As I said about Russia at the start of this book, one form of power may be destroyed, but another is taking its place.

Our aim must be to understand this power and to ask whether it is properly exercised. As David Brin asks, “If we admire the Net, should not a burden of proof fall on those who would change the basic assumptions that brought it about in the first place? ”[47]

These “basic assumptions” were grounded in liberty and openness. An invisible hand now threatens both. We need to understand how.

One example of the developing struggle over cyber freedoms is the still-not-free China. The Chinese government has taken an increasingly aggressive stand against behavior in cyberspace that violates real-space norms. Purveyors of porn get 10 years in jail. Critics of the government get the same. If this is the people’s republic, this is the people’s tough love.

To make these prosecutions possible, the Chinese need the help of network providers. And local law requires that network providers in China help. So story after story now reports major network providers — including Yahoo! and Microsoft — helping the government do the sort of stuff that would make our Constitution cringe.

The extremes are bad enough. But the more revealing example of the pattern I’m describing here is Google. Google is (rightly) famous for its fantastic search engine. Its brand has been built on the idea that no irrelevant factor controls its search results. Companies can buy search words, but their results are bracketed and separate from the main search results. The central search results — that part of the screen your eyes instinctively go to — are not to be tampered with.

Unless the company seeking to tamper with the results is China, Inc. For China, Google has promised to build a special routine.[48] Sites China wants to block won’t appear in the Google.CN search engine. No notice will be presented. No system will inform searchers that the search results they are reading have been filtered by Chinese censors. Instead, to the Chinese viewer, this will look like normal old Google. And because Google is so great, the Chinese government knows most will be driven to Google, even if Google filters what the government doesn’t want its people to have.

Here is the perfect dance of commerce with government. Google can build the technology the Chinese need to make China’s regulation more perfectly enabled, and China can extract that talent from Google by mandating it as a condition of being in China’s market.

The value of that market is thus worth more to Google than the value of its “neutral search” principle. Or at least, it better be, if this deal makes any sense.

My purpose here is not to criticize Google — or Microsoft, or Yahoo! These companies have stockholders; maximizing corporate value is their charge. Were I running any of these companies, I’m not sure I would have acted differently.

But that in the end is my point: Commerce has a purpose, and government can exploit that to its own end. It will, increasingly and more frequently, and when it does, the character of the Net will change.

Radically so.

- Example NAT machine in theory

- Peter Naur, Programming as Theory Building

- String Theory

- 8.5.9. Theory of Constraints (TOC, Òåîðèÿ îãðàíè÷åíèé)

- Caveats using NAT

- What's next?

- example rc.firewall

- The DISPLAY Processor

- Before You Begin the Installation

- Using X

- Temporary File Storage in the

- Using the Text Editors