Книга: Distributed operating systems

2.3.5. Buffered versus Unbuffered Primitives

2.3.5. Buffered versus Unbuffered Primitives

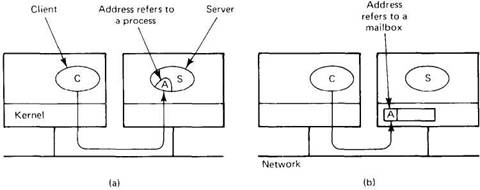

Just as system designers have a choice between blocking and nonblocking primitives, they also have a choice between buffered and unbuffered primitives. The primitives we have described so far are essentially unbuffered primitives. What this means is that an address refers to a specific process, as in Fig. 2-9. A call receive(addr, &m) tells the kernel of the machine on which it is running that the calling process is listening to address addr and is prepared to receive one message sent to that address. A single message buffer, pointed to by m, is provided to hold the incoming message. When the message comes in, the receiving kernel copies it to the buffer and unblocks the receiving process. The use of an address to refer to a specific process is illustrated in Fig. 2-12(a).

Fig. 2-12. (a) Unbuffered message passing. (b) Buffered message passing.

This scheme works fine as long as the server calls receive before the client calls send. The call to receive is the mechanism that tells the server's kernel which address the server is using and where to put the incoming message. The problem arises when the send is done before the receive. How does the server's kernel know which of its processes (if any) is using the address in the newly arrived message, and how does it know where to copy the message? The answer is simple: it does not.

One implementation strategy is to just discard the message, let the client time out, and hope the server has called receive before the client retransmits. This approach is easy to implement, but with bad luck, the client (or more likely, the client's kernel) may have to try several times before succeeding. Worse yet, if enough consecutive attempts fail, the client's kernel may give up, falsely concluding that the server has crashed or that the address is invalid.

In a similar vein, suppose that two or more clients are using the server of Fig. 2-9(a). After the server has accepted a message from one of them, it is no longer listening to its address until it has finished its work and gone back to the top of the loop to call receive again. If it takes a while to do the work, the other clients may make multiple attempts to send to it, and some of them may give up, depending on the values of their retransmission timers and how impatient they are.

The second approach to dealing with this problem is to have the receiving kernel keep incoming messages around for a little while, just in case an appropriate receive is done shortly. Whenever an "unwanted" message arrives, a timer is started. If the timer expires before a suitable receive happens, the message is discarded.

Although this method reduces the chance that a message will have to be thrown away, it introduces the problem of storing and managing prematurely arriving messages. Buffers are needed and have to be allocated, freed, and generally managed. A conceptually simple way of dealing with this buffer management is to define a new data structure called a mailbox. A process that is interested in receiving messages tells the kernel to create a mailbox for it, and specifies an address to look for in network packets. Henceforth, all incoming messages with that address are put in the mailbox. The call to receive now just removes one message from the mailbox, or blocks (assuming blocking primitives) if none is present. In this way, the kernel knows what to do with incoming messages and has a place to put them. This technique is frequently referred to as a buffered primitive, and is illustrated in Fig. 2-12(b).

At first glance, mailboxes appear to eliminate the race conditions caused by messages being discarded and clients giving up. However, mailboxes are finite and can fill up. When a message arrives for a mailbox that is full, the kernel once again is confronted with the choice of either keeping it around for a while, hoping that at least one message will be extracted from the mailbox in time, or discarding it. These are precisely the same choices we had in the unbuffered case. Although we have perhaps reduced the probability of trouble, we have not eliminated it, and have not even managed to change its nature.

In some systems, another option is available: do not let a process send a message if there is no room to store it at the destination. To make this scheme work, the sender must block until an acknowledgement comes back saying that the message has been received. If the mailbox is full, the sender can be backed up and retroactively suspended as though the scheduler had decided to suspend it just before it tried to send the message. When space becomes available in the mailbox, the sender is allowed to try again.

- 3D Primitives

- Virtualization Versus Paravirtualization

- Choosing a Database: MySQL Versus PostgreSQL

- 1.3.1. Free Versus Freedom

- 2.1.1. BIOS Versus Bootloader

- 7.2.2. Flash Versus RAM

- 2.3.4. Blocking versus Nonblocking Primitives

- 2.3.6. Reliable versus Unreliable Primitives

- 4.5.3. Synchronous versus Asynchronous Systems

- PAP Versus CHAP

- NIS Versus NIS+

- 14.3.2 Pseudo versus True Concurrent Execution