Книга: Distributed operating systems

1.4.3. Multiprocessor Timesharing Systems

1.4.3. Multiprocessor Timesharing Systems

The last combination we wish to discuss is tightly-coupled software on tightly-coupled hardware. While various special-purpose machines exist in this category (such as dedicated data base machines), the most common general-purpose examples are multiprocessors that are operated as a UNIX timesharing system, but with multiple CPUs instead of one CPU. To the outside world, a multiprocessor with 32 30-MIPS CPUs acts very much like a single 960-MIPS CPU (this is the single-system image discussed above). Except that implementing it on a multiprocessor makes life much easier, since the entire design can be centralized.

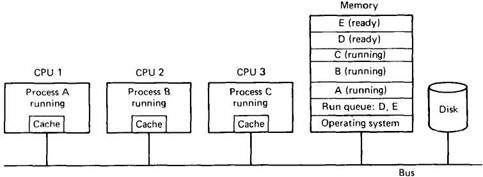

The key characteristic of this class of system is the existence of a single run queue: a list of all the processes in the system that are logically unblocked and ready to run. The run queue is a data structure kept in the shared memory. As an example, consider the system of Fig. 1-11, which has three CPUs and five processes that are ready to run. All five processes are located in the shared memory, and three of them are currently executing: process A on CPU 1, process В on CPU 2, and process С on CPU 3. The other two processes, D and E, are also in memory, waiting their turn.

Fig. 1-11. A multiprocessor with a single run queue.

Now suppose that process В blocks waiting for I/O or its quantum runs out. Either way, CPU 2 must suspend it, and find another process to run. CPU 2 will normally begin executing operating system code (located in the shared memory). After having saved all of B's registers, it will enter a critical region to run the scheduler to look for another process to run. It is essential that the scheduler be run as a critical region to prevent two CPUs from choosing the same process to run next. The necessary mutual exclusion can be achieved by using monitors, semaphores, or any other standard construction used in singleprocessor systems.

Once CPU 2 has gained exclusive access to the run queue, it can remove the first entry, D, exit from the critical region, and begin executing D. Initially, execution will be slow, since CPU 2's cache is full of words belonging to that part of the shared memory containing process B, but after a little while, these will have been purged and the cache will be full of D's code and data, so execution will speed up.

Because none of the CPUs have local memory and all programs are stored in the global shared memory, it does not matter on which CPU a process runs. If a long-running process is scheduled many times before it completes, on the average, it will spend about the same amount of time running on each CPU. The only factor that has any effect at all on CPU choice is the slight gain in performance when a process starts up on a CPU that is currently caching part of its address space. In other words, if all CPUs are idle, waiting for I/O, and one process becomes ready, it is slightly preferable to allocate it to the CPU it was last using, assuming that no other process has used that CPU since (Vaswani and Zahorjan, 1991).

As an aside, if a process blocks for I/O on a multiprocessor, the operating system has the choice of suspending it or just letting it do busy waiting. If most I/O is completed in less time than it takes to do a process switch, busy waiting is preferable. Some systems let the process keep its processor for a few milliseconds, in the hope that the I/O will complete soon, but if that does not occur before the timer runs out, a process switch is made (Karlin et al., 1991). If most critical regions are short, this approach can avoid many expensive process switches.

An area in which this kind of multiprocessor differs appreciably from a network or distributed system is in the organization of the file system. The operating system normally contains a traditional file system, including a single, unified block cache. When any process executes a system call, a trap is made to the operating system, which carries it out, using semaphores, monitors, or something equivalent, to lock out other CPUs while critical sections are being executed or central tables are being accessed. In this way, when a WRITE system call is done, the central block cache is locked, the new data entered into the cache, and the lock released. Any subsequent READ call will see the new data, just as on a single-processor system. On the whole, the file system is hardly different from a single-processor file system. In fact, on some multiprocessors, one of the CPUs is dedicated to running the operating system; the other ones run user programs. This situation is undesirable, however, as the operating system machine is often a bottleneck. This point is discussed in detail by Boykin and Langerman (1990).

It should be clear that the methods used on the multiprocessor to achieve the appearance of a virtual uniprocessor are not applicable to machines that do not have shared memory. Centralized run queues and block only caches work when all CPUs have access to them with very low delay. Although these data structures could be simulated on a network of machines, the communication costs make this approach prohibitively expensive.

Figure 1-12 shows some of the differences between the three kinds of systems we have examined above.

| Item | Network operating system | Distributed operating system | Multiprocessor operating system |

|---|---|---|---|

| Does it look like a virtual uniprocessor? | No | Yes | Yes |

| Do all have to run the same operating system? | No | Yes | Yes |

| How many copies of the operating system are there? | N | N | 1 |

| How is communication achieved? | Shared files | Messages | Shared memory |

| Are agreed upon network protocols required? | Yes | Yes | No |

| Is there a single run queue? | No | No | Yes |

| Does file sharing have well-defined semantics? | Usually no | Yes | Yes |

Fig. 1-12. Comparison of three different ways of organizing n CPUs.

- 14.5.1. Open Systems Interconnection

- 5.3. TRENDS IN DISTRIBUTED FILE SYSTEMS

- 1. Basic microprocessor systems

- Setting Up Guest Operating Systems

- Network and Disk File Systems

- Viewing Your System's File Systems

- Verifying File Integrity in ext3 File Systems with the fsck Utility

- Other File Systems Available to Fedora

- JFS and XFS File Systems

- DOS File Systems

- CD-ROM File Systems

- Mounting File Systems