Книга: Agile Software Development

An Ecosystem That Ships Software

The purpose of this chapter is to discuss and boil the topic of methodologies it until the rules of the methodology design game, and how to play that game, are clear.

"Methodology Concepts" covers the basic vocabulary and concepts needed to design and compare methodologies. These include the obvious concepts such as roles, techniques, and standards and also less-obvious concepts such as weight, ceremony, precision, stability, and tolerance. In terms of "Levels of Audience" as described in the introduction, this is largely Level 1 material. It is needed for the more advanced discussions that follow.

"Methodology Design Principles" discusses seven principles that can be used to guide the design of a methodology. The principles highlight the cost of moving to a heavier methodology as well as when to accept that cost. They also show how to use work-product stability in deciding how much concurrent development to employ.

"XP under Glass" applies the principles to analyze an existing, agile methodology. It also discusses using the principles to adjust XP for slightly different situations.

"Why Methodology at All?" revisits that key question in the light of the preceding discussion and presents the different uses to which methodologies are put.

An Ecosystem That Ships Software

"Methodology is a social construction," Ralph Hodgson told me in 1993. Two years went by before I started to understand.

Your "methodology" is everything you regularly do to get your software out. It includes who you hire, what you hire them for, how they work together, what they produce, and how they share. It is the combined job descriptions, procedures, and conventions of everyone on your team. It is the product of your particular ecosystem and is therefore a unique construction of your organization.

All organizations have a methodology: It is simply how they do business. Even the proverbial trio in a garage have a way of working?a way of trading information, of separating work, of putting it back together?all founded on assumed values and cultural norms. The way of working includes what people choose to spend their time on, how they

I use the word methodology as found in the Merriam-Webster dictionaries: "A series of related methods or techniques." A method is a "systematic procedure," similar to a technique.

(Readers of the Oxford English Dictionary may note that some OED editions only carry the definition of methodology as "study of methods," while others carry both. This helps explain the controversy over the word methodology.)

The distinction between methodology and method is useful. Reading the phrases "a method for finding classes from use cases" or "different methods are suited for different problems," we understand that the author is discussing techniques and procedures, not establishing team rules and conventions. That frees the use of the word methodology for the larger issues of coordinating people's activities on a team.

Only a few companies bother to try to write it all down (usually just the large consulting houses and the military). A few have gone so far as to create an expert system that prints out the full methodology needed for a project based on project staffing, complexity, deadlines, and the like. None I have seen captures cultural assumptions or provides for variations among values or cultures.

Boil and condense the subject of methodology long enough and you get this one-sentence summary: ?A methodology is the conventions that your group agrees to.?

"The conventions your group agrees to" is a social construction. It is also a construction that you can and should revisit from time to time.

Coordination is important. The same average people who produce average designs when working alone often produce good designs in collaboration. Conversely, all the smartest people together still won't produce group success without coordination, cooperation, and communication. Most of us have witnessed or heard of such groups. Team success hinges on cooperation, communication, and coordination.

Structural Terms

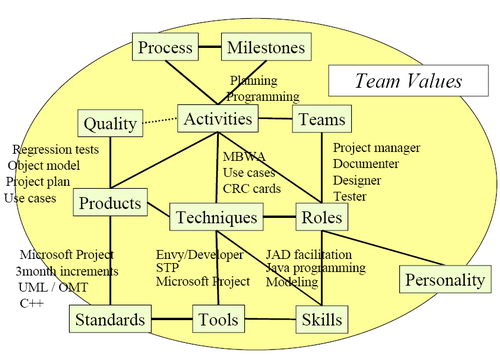

The first methodology structure I saw contained about seven elements. The one I now draw contains 13 (see Figure 4-1). The elements apply to any team endeavor, whether it is software development, rock climbing, or poetry writing. What you write for each box will vary, but the names of the elements won't.

Methodology Concepts

Figure 4-1. Elements of a methodology.

Roles. Who you employ, what you employ them for, what skills they are supposed to have. Equally importantly, it turns out, is the personality traits expected of the person. A project manager should be good with people, a user interface designer should have nature visual talents and some empathy for user behavior, an object-oriented program designer should have good abstraction faculties, and a mentor should be good at explaining things.

It is bad for the project when the individuals in the jobs don't have the traits needed for the job (for example, a project manager who can't make decisions or a mentor who does not like to communicate). Skills. The skills needed for the roles. The "personal prowess" of a person in a role is a product of his training and talent.

Programmers attend classes to learn object-oriented, Java programming and unit-testing skills.

User interface designers learn how to conduct usability examinations and do paper-based prototyping.

Managers learn interviewing, motivating, hiring, and critical-path task-management skills.

The best people draw heavily upon their natural talent, but in most cases adequate skills can be acquired through training and practice. Teams. The roles that work together under various circumstances.

There may be only one team on a small project. On a large project, there are likely to be multiple, overlapping teams, some aimed at harnessing specific technologies and some aimed at steering the project or the system's architecture. Techniques. The specific procedures people use to accomplish tasks. Some apply to a single person (writing a use case, managing by walking around, designing a class or test case), while others are aimed at groups of people (project retrospectives, group planning sessions). In general, I use the word technique if there is a prescriptive presentation of how to accomplish a task, using an understood body of knowledge.

Activities. How the people spend their days. Planning, programming, testing, and meeting are sample activities.

Some methodologies are work-product intensive, meaning that they focus on the work products that need to be produced. Others are activity-intensive, meaning that they focus on what the people should be doing during the day. Thus, where the Rational Unified Process is tool- and work-product intensive, Extreme Programming is activity intensive. It achieves its effectiveness, in part, by describing what the people should be doing with their day (pair programming, test-first development, refactoring, etc.).

Process. How activities fit together over time, often with pre- and post-conditions for the activities (for example, a design review is held two days after the material is sent out to participants and produces a list of recommendations for improvement). Process-intensive methodologies focus on the flow of work among the team members.

Process charts rarely convey the presence of loopback paths, where rework gets done. Thus, process charts are usually best viewed as workflow diagrams, describing who receives what from whom. Work products. What someone constructs. A work product may be disposable, as with CRC design cards, or it may be relatively permanent, as the usage manual or source code.

I find it useful to reserve deliverable to mean "a work product that gets passed across an organizational boundary." This allows us to apply the term deliverable at different scales: The deliverables that pass between two subteams are work products in terms of the larger project. The work products that pass between a project team and the team working on the next system are deliverables of the project and need to be handled more carefully.

Work products are described in generic terms such as "source code" and "domain object model." Rules about the notation to be used for each work product get described in the work product standards. Examples of source-code standards include Java, Visual Basic, and executable visual models. Examples of class diagram standards could be UML or OML. Milestones. Events marking progress or completion. Some milestones are simply assertions that a task has been performed, and some involve the publication of documents or code.

A milestone has two key characteristics: It occurs in an instant of time, and it is either fully met or not met (it is not partially met). A document is either published or not, the code is delivered or not, the meeting was held or not. Standards. The conventions the team adopts for particular tools, work products, and decision policies.

A coding standard might declare this: "Every function has the following header comment..."

A language standard might be this: "We'll be using fully portable Java."

A drawing standard for class diagrams might be this: "Only show public methods of persistent functions."

A tool standard might be this: "We'll use Microsoft Project, Together/J, JUnit, ..."

A project-management standard might be this: "Use milestones of two days to two weeks and incremental deliveries every two to three months."

Quality. Quality may refer to the activities or the work products.

In XP, the quality of the team's program is evaluated by examining the source code work product: "All checked-in code must pass unit tests at 100% at all times."

The XP team also evaluates the quality of their activities: Do they hold a stand-up meeting every day? How often do the programmers shift programming partners? How available are the customers for questions? In some cases, quality is given a numerical value; in other cases, a fuzzy value ("I wasn't happy with the team morale on the last iteration."). Team Values. The rest of the methodology elements are governed by the team's value system. An aggressive team working on quick-to-market values will work very differently than a group that values families and goes home at a regular time every night.

As Jim Highsmith likes to point out, a group whose mission is to explore and locate new oil fields will operate on different values and produce different rules than a group whose mission is to squeeze every barrel out of a known oil field at the least possible cost.

Types of Methodologies

Rechtin (1997) categorizes methodologies themselves as being either normative, rational, participative, or heuristic.

Normative methodologies are based on solutions or sequences of steps known to work for the discipline. Electrical and other building codes in house wiring are examples. In software development, one would include state diagram verification in this category.

Rational methodologies (no connection with the company) are based on method and technique. They would be used for system analysis and engineering disciplines.

Participative methodologies are stakeholder based and capture aspects of customer involvement.

Heuristic methodologies are based on lessons learned. Rechtin cites their use in the aerospace business (space and aircraft design).

As a body of knowledge grows, sections of the methodology move from heuristic to normative and become codified as standard solutions for standard problems. In computer programming, searching algorithms have reached that point. The decision about whether to put people in common or private offices has not.

Most of software development is still in the stage where heuristic methodologies are appropriate.

Milestones

Milestones are markers for where interesting things happen in the project. At each milestone, one or more people in some named roles must get together to affect the course of a work product.

Three kinds of milestones are used on projects, each with its particular characteristics. They are

· Reviews

· Publications

· Declarations

In a review, several people examine a work product. With respect to reviews, we care about the following questions: Who is doing the reviewing? What are they reviewing? Who created that item? What is the outcome of the review? Few reviews cause a project to halt; most end with a list of suggestions that are supposed to be incorporated.

A publication occurs whenever a work product is distributed or posted for open viewing. Sending out meeting minutes, checking source code into a configuration-management system, and deploying software to users' workstations are different forms of publication. With respect to publications, we care about the following: What is being published? Who publishes it? Who receives it? What causes it to be published?

The declaration milestone is a verbal notice from one person to another, or to multiple people, that a milestone was reached. There is no object measure for a declaration; it is simply an announcement or a promise. Declarations are interesting because they construct a web of promises inside the team's social structure. This form of milestone came as a surprise to me, when I first detected it. Discovering Declarations

The first declaration milestone I detected was made during a discussion with the manager of the technical writers on a 100-person project. I asked how she knew when to assign a person to start writing the on-line help text (its birth event).

She said it was when a team lead told her that a section of the application was "ready" for her.

I asked her what "ready" meant, whether it meant that the screen design was complete.

She said it only meant that the screen design was relatively stable. The team lead was, in essence, making the following promise:

"We estimate that the changes that we are still going to make are relatively small compared to the work the tech writer will be doing, and the rework the writer will do will be relatively small compared to the overall work. So this would be a good time to get the writing started."

That assertion is full of social promises. It is a promise, given by a trained person, that in his judgement the tradeoffs are balanced and that this is a good time to start.

A declaration ("It's ready!") is often the form of milestone that moves code from development to test, alpha delivery, beta delivery, and even deployment.

Declarations are interesting to me as a researcher, because I have not seen them described in process-centric methodologies, which focus on process entry and exit criteria. They are easier to discuss when we consider software development as a cooperative game. In a cooperative game, the project team's web of interrelationships, and the promises holding them together, are more apparent.

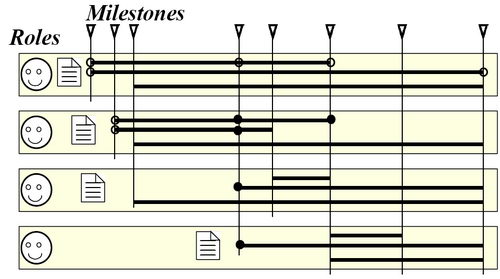

The role-deliverable-milestone chart is a quick way to view the methodology in brief and has an advantage over process diagrams in that it shows the parallelism involved in the project quite clearly. It also allows the team to see the key stages of completion the artifacts go through. This helps them manage their actions according to the intermediate states of the artifacts, as recommended in some modern methodologies (Highsmith 1999).

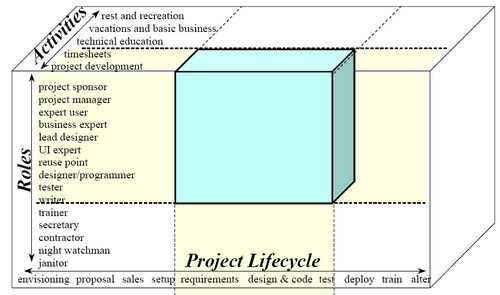

Figure 4-2. The three dimensions of scope.

Scope

The scope of a methodology consists of the range of roles and activities that it attempts to cover (Figure 4-2).

The earliest object-oriented methodologies presented the designer as having the key role and discussed the techniques, deliverables, and standards for the design activity of that role. These methodologies were considered inadequate in two ways:

· They were not as broad as needed. A real project involves more roles than just the OO designer, and each role involves more activities, more deliverables, and more techniques than these books presented.

· They were too constricting. Designers need more than one design technique in their toolbox.

Groups with a long history of continuous experience, such as the U.S. Department of Defense, Andersen Consulting, James Martin and Associates, IBM, and Ernst & Young already had methodologies covering the standard life-cycle of a project, even starting from the point of project sales and project setup. Their methodologies cover every person needed on the project, from staff assistant through sales staff, designer, project manager, and tester.

The point is that both are "methodologies." The scope of their concerns is different.

The scope of a methodology can be characterized along three axes: lifecycle coverage, role coverage, and activity coverage (Figure 4-3).

· Life-cycle coverage indicates when in the life cycle of the project the methodology comes into play, and when it ends.

· Role coverage refers to which roles fall into the domain of discussion.

· Activity coverage defines which activities of those roles fall into the domain of discussion. The methodology may take into account filling out time sheets (a natural inclusion as part of the project manager's project monitoring and scheduling assignment) and may omit vacation requests (because it is part of basic business operations).

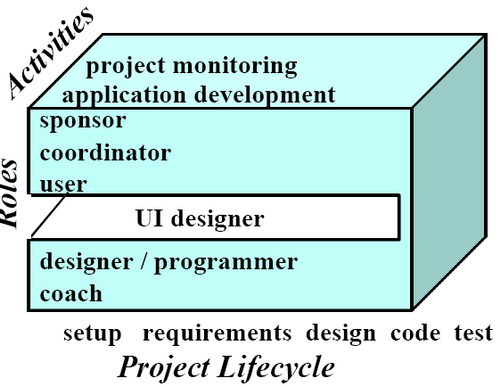

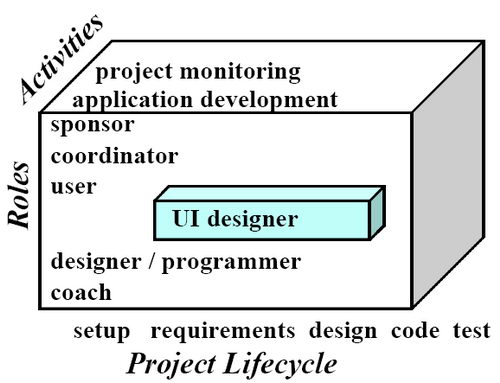

Figure 4-3. Scope of Extreme Programming.

Clarifying a methodology's intended scope helps take some of the heat out of methodology arguments. Often, two seemingly incompatible methodologies target different parts of the life cycle or different roles. Discussions about their differences go nowhere until their respective scope intentions are clarified.

In this light, we see that the early OO methodologies had a relatively small scope. They addressed typically only one role, the domain designer or modeler. For that role, only the actual domain modeling activity is represented, and only during the analysis and design stages. Within that very narrow scope, they covered one or a few techniques and outlined one or a few deliverables with standards. No wonder experienced designers felt they were inadequate for overall development.

The scope diagram helps us see where methodology fragments combine well. An example is the natural fit of Constantine and Lockwood's user interface design recommendations (Constantine 1999) with methodologies that omit discussion of UI design activities (leaving that aspect to authors who know more about the subject).

Figure 4-4. Scope of Constantine & Lockwood's Design for Use methodology fragment.

Without having these scoping axes at hand, people would ask Larry Constantine, "How does your methodology relate to the other Agile Methodologies on the market?" In a talk at Software Development 2001, Larry Constantine said he didn't know he was designing a methodology, he was just discussing good ways to design user interfaces.

Having the methodology scope diagram in view, we easily see how they fit. XP's scope of concerns is shown in Figure 4-3. Note that it lacks discussion of user interface design. The scope of concerns for Design for Use is shown in Figure 4-4. We see, from these figures, that the two fit together. The same applies for Design for Use and Crystal Clear.

Conceptual Terms

To discuss the design of a methodology, we need different terms: methodology size, ceremony, and weight, problem size, project size, system criticality, precision, accuracy, relevance, tolerance, visibility, scale, and stability.

Methodology Size The number of control elements in the methodology. Each deliverable, standard, activity, quality measure, and technique description is an element of control. Some projects and authors will wish for smaller methodologies; some will wish for larger.

Ceremony The amount of precision and the tightness of tolerance in the methodology. Greater ceremony corresponds to tighter controls (Booch 1995). One team may write use cases on napkins and review them over lunch. Another team may prefer to fill in a three-page template and hold half-day reviews. Both groups write and review use cases, the former using low ceremony, the latter using high ceremony.

The amount of ceremony in a methodology depends on how life critical the system will be and on the fears and wishes of the methodology author, as we will see. Methodology Weight The product of size and ceremony, the number of control elements multiplied by the ceremony involved in each. This is a conceptual product (because numbers are not attached to size and ceremony), but it is still useful.

Problem Size The number of elements in the problem and their inherent cross-complexity.

There is no absolute measure of problem size, because a person with different knowledge is likely to see a simplifying pattern that reduces the size of the problem. Some problems are clearly different enough from others that relative magnitudes can be discussed (launching a space shuttle is a bigger problem than printing a company's invoices).

The difficulty in deciding the problem size is that there will often be controversy over how many people are needed to deliver the product and what the corresponding methodology weight is.

Project Size The number of people whose efforts need to be coordinated: staff size. Depending on the situation, you may be coordinating only programmers or an entire department with many roles.

Many people use the phrase "project size" ambiguously, shifting the meaning from staff size to problem size even within a sentence. This causes much confusion, particularly because a small, sharp team often outperforms a large, average team.

The relationship between problem, staff, and methodology size are discussed in the next section.

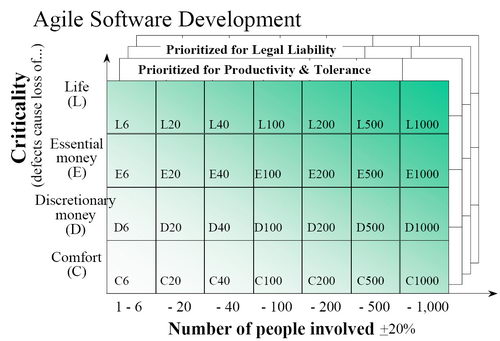

System Criticality The damage from undetected defects. I currently classify criticality simply as one of loss of comfort, loss of discretionary money, loss of irreplaceable money, or loss of life. Other classifications are possible.

Precision How much you care to say about a particular topic. Pi to one decimal place of precision is 3.1, to four decimal places is 3.1416. Source code contains more precision than a class diagram; assembler code contains more than its high-level source code. Some methodologies call for more precision earlier than others, according to the methodology author's wishes.

Accuracy How correct you are when you speak about a topic. To say "Pi to one decimal place is 3.3" would be inaccurate. The final object model needs to be more accurate than the initial one. The final GUI description is more accurate than the low-fidelity prototypes. Methodologies cover the growth of accuracy as well as precision.

Relevance Whether or not to speak about a topic. User interface prototypes do not discuss the domain model. Infrastructure design is not relevant to collecting user functional requirements. Methodologies discuss different areas of relevance.

Tolerance How much variation is permitted.

The team standards may require revision dates to be put into the program code?or not. The tolerance statement may say that a date must be found, either put in by hand or added by some automated tool. A methodology may specify line breaks and indentation, leave those to peoples' discretion, or state acceptable bounds. An example in a decision standard is stating that a working release must be available every 3 months, plus or minus one month.

Visibility How easily an outsider can tell if the methodology is being followed. Process initiatives such as ISO9001 focus on visibility issues. Because achieving visibility creates overhead (cost in time, money, or both), agile methodologies as a group lower the emphasis on such visibility. As with ceremony, different amounts of visibility are appropriate for different situations. Scale How many items are rolled together to be presented as a single item. Booch's former "class categories" provided for a scaled view of a set of classes. The UML "package" allows for scaled views of use cases, classes, or hardware boxes. Project plans, requirements, and designs can all be presented at different scales.

Scale interacts somewhat with precision. The printer or monitor's dot density limits the amount of detail that can be put onto one screen or page. However, even if it could all be put onto one page, some people would not want to see all that detail. They want to see a rolled-up or high-level version.

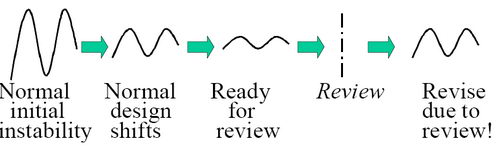

Stability How likely it is to change. I use only three stability levels: wildly fluctuating, as when a team is just getting started; varying, as when some development activity is in mid-stride; and relatively stable, as just before a requirements / design / code review or product shipment.

One way to find the stability state is to ask: "If I were to ask the same questions today and in two weeks, how likely would I be to get the same answers?"

In the wildly fluctuating state, the answer is "Are you kidding? Who knows what this will be like in two weeks!"

In the varying state, the answer is "Somewhat similar, but of course the details are likely to change."

In the relatively stable state, the answer is "Pretty likely, although a few things will probably be different."

Other ways to determine the stability may include measuring the "churn" in the use case text, the diagrams, the code base, the test cases, and so on (I have not tried these).

Figure 4-5. A project map: a low-precision version of a project plan.

Precision

Precision is a core concept manipulated within a methodology. Every category of work product has low-, medium-, and high-precision versions.

Here are the low-, medium-, and high-precision versions of some key work products.

The Project Plan

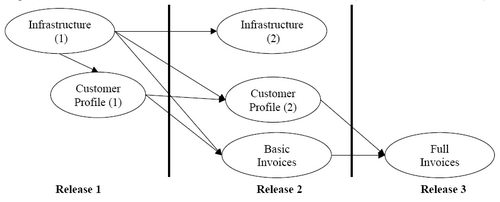

The low-precision view of a project plan is the project map (Figure 4-5). It shows the fundamental items to be produced, their dependencies, and which are to be deployed together. It may show the relative magnitudes of effort needed for each item. It does not show who will do the work or how long the work will take (which is why it is called a map and not a plan).

Those who are used to working with PERT charts will recognize the project map as a coarse-grained PERT chart showing project dependencies, augmented with marks showing where releases occur.

This low-precision project map is very useful in organizing the project before the staffing and timelines are established. In fact, I use it to derive timelines and staffing plans.

The medium-precision version of the project plan is a project map expanded to show the dependencies between the teams and the due dates.

The high-precision version of the project plan is the well-known, task-based GANTT chart, showing task times, assignments, and dependencies.

The more precision in the plan, the more fragile it is, which is why constructing GANTT charts is so feared: it is time-consuming to produce and gets out of date with the slightest surprise event. Behavioral Requirements / Use Cases Behavioral requirements are often written with use cases.

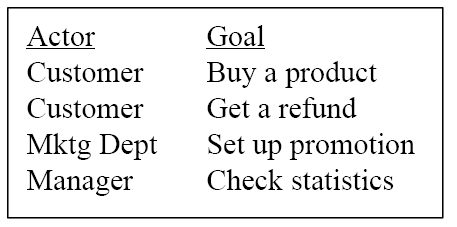

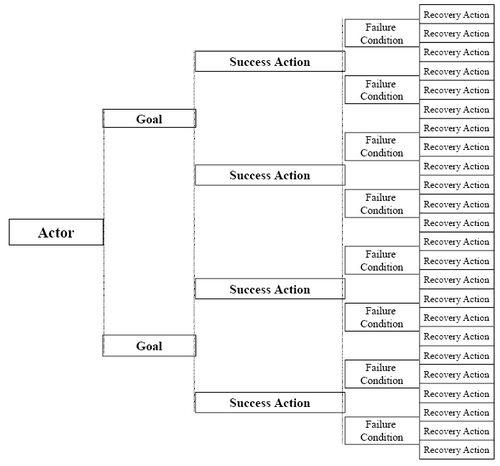

The lowest level of precision version of a set of use cases is the Actors-Goals list, the list of primary actors and the goals they have with respect to the system (Figure 4-6). This lowest-precision view is useful at the start of the project when you are prioritizing the use cases and allocating work to teams. It is useful again whenever an overview of the system is needed.

Figure 4-6. An Actors-Goals list: the lowest-precision view of behavioral requirements.

The medium level of precision consists of a one-paragraph brief synopsis of the use case, or the use case's title and main success scenario.

The medium-high level of precision contains extensions and error conditions, named but not expanded.

The final, highest level of precision includes both the extension conditions and their handling.

These levels of precision are further described in (Cockburn 2000).

The Program Design

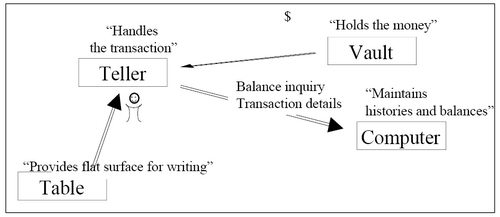

The lowest level of precision in an object-oriented design is a Responsibility-Collaborations diagram, a very coarse-grained variation on the UML object collaboration diagram (Figure 4-7). The interesting thing about this simple design presentation is that people can already review it and comment on the allocation of responsibilities.

A medium level of precision is the list of major classes, their major purpose, and primary collaboration channels.

A medium-high level is the class diagram, showing classes, attributes, and relationships with cardinality.

A high level of precision is the list of classes, attributes, relations with cardinality constraints, and functions with function signatures. These often are listed on the class diagram.

The final, highest level of precision is the source code.

Figure 4-7. A Responsibility-Collaboration diagram: the low-precision view of an object-oriented design.

These levels for design show the natural progression from Responsibility-Driven Design (Beck 1987, Cunningham URL-CRC) through object modeling with UML, to final source code. The three are not in opposition, as some imagine, but rather occur along very natural progression of precision.

As we get better at generating final code from diagrams, the designers will add precision and code-generation annotations to the diagrams. As a consequence, the diagrams plus annotations become the "source code." The C++ or Java stops being source code and becomes generated code.

The User Interface Design

The low-precision description of the user interface is the screen flow diagram, which states only the purpose and linkage of each screen.

The medium level of precision description consists of the screen definitions with field lengths and the various field or button activation rules.

The highest precision definition of the user interface design is the program's source code.

Figure 4-8. Using low levels of precision to trigger other activities.

Working with "Precision"

People do a lot with these low-precision views. During the early stages of the project, they plan and evaluate. At later stages, they use the low-precision views for training.

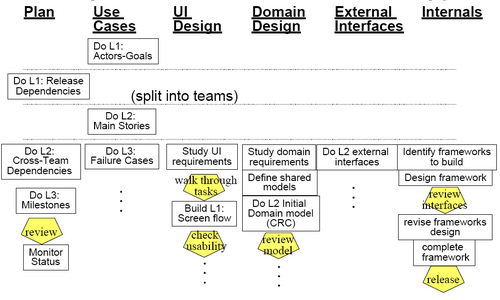

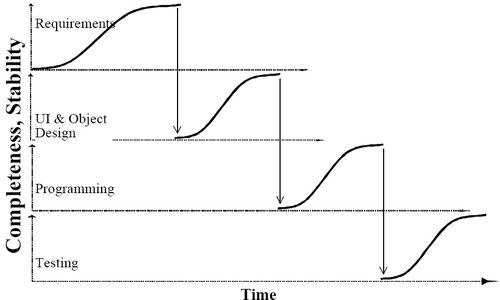

I currently think of a level of precision as being reached when there is enough information to allow another team to start work. Figure 4-8 shows the evolution of six types of work products on a project: the project plan, the use cases, the user interface design, the domain design, the external interfaces, and the infrastructure design.

In looking at Figure 4-8, we see that having the actor-goal list in place permits a preliminary project plan to be drawn up. This may consist of the project map along with time and staffing assignments and estimates. Having those, the teams can split up and capture the use-case briefs in parallel. As soon as the use-case briefs?or a significant subset of them?are in place, all the specialist teams can start working in parallel, evolving their own work products.

One thing to note about precision is that the work involved expands rapidly as precision increases. Figure 4-9 shows the work increasing as the use cases grow from actors, to actors and goals, to main success scenarios, to the various failure and other extension conditions, and finally to the recovery actions. A similar diagram could be drawn for each of the other types of work products.

Because higher-precision work products require more energy and also change more often than their low-precision counterparts, a general project strategy is to defer, or at least carefully manage, their construction and evolution.

Figure 4-9. Work expands with increasing precision level (shown for use cases).

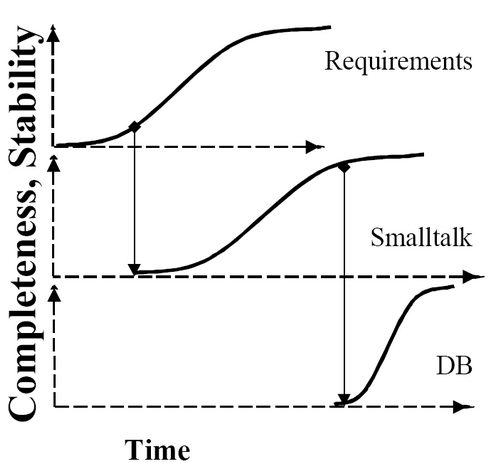

Stability and Concurrent Development

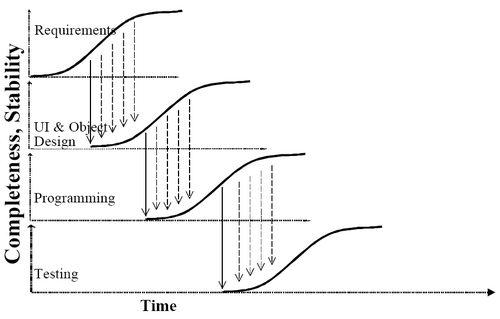

Stability, the "likelihood of change," varies over the course of the project (Figure 4-10).

A team starts in a situation of instability. Over time, team members reduce the fluctuations and reach a varying state as the design progresses. They finally get their work relatively stable just prior to a design review or publication. At that point, the reviewers and users provide new information to the development team, which makes the work less stable again for a period.

On many projects, instability jumps unexpectedly on occasions, such as when a supplier suddenly announces that he will not deliver on time, a product does not perform as predicted, or an algorithm does not scale as expected.

You might think that you should strive for maximum stability on a project.

However, the appropriate amount of stability to target varies by topic, by project priorities, and by stage in the project. Different experts have different recommendations about how to deal with the varying rates of changes across the work products and the project stages.

Figure 4-10. Reducing fluctuations over the course of a project.

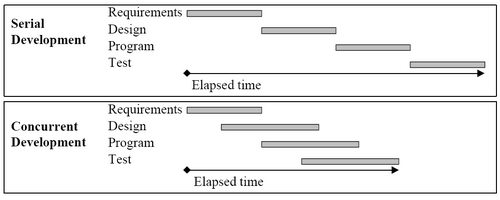

Figure 4-11. Successful serial development takes longer (but fewer workdays) compared to successful concurrent development.

The simplest approach is to say, "Don't start designing until the requirements are Stable (with a capital 'S'); don't start programming until the design is Stable,? and so on. This is serial development. Its two advantages make it attractive to many people. It is, however, fraught with problems.

The first advantage is its simplicity. The person doing the scheduling simply sequences the activities one after the other, scheduling a downstream activity to start when an upstream one gets finished.

The second advantage is that, if there are no major surprises that force a change to the requirements or the design, a manager can minimize the number of work-hours spent on the project, by carefully scheduling when people arrive to work on their particular tasks.

There are three problems, though.

The first problem is that the elapsed time needed for the project is the straight sum of the times needed for requirements, design, programming, test, and so on. This is the longest time that can be needed for the project. With the most careful management, the project manager will get the longest elapsed time at the minimum labor cost. For projects on which reducing elapsed time is a top priority; this is a bad tradeoff.

The second problem is that surprises usually do crop up during the project. When one does, it causes unexpectedly revision of the requirements or design, raising the development cost. In the end, the project manager minimizes neither the labor cost nor the development time.

The third problem is absence of feedback from the downstream activities to the upstream activities.

In rare instances, the people doing the upstream activity can produce high-quality results without feedback from the downstream team. On most projects, though, the people creating the requirements need to see a running version of what they ordered, so they can correct and finalize their requests. Usually, after seeing the system in action, they change their requests, which forces changes in the design, coding, testing, and so on. Incorporating these changes lengthens the project's elapsed time and increases total project costs.

Selecting the serial-development strategy really only makes sense if you can be sure that the team will be able to produce good, final requirements and design on the first pass. Few teams can do this.

Figure 4-12. In serial development, each workgroup waits for the upstream workgroup to achieve complete stability before starting.

Figure 4-13. In concurrent development, each group starts as early as its communications and rework capabilities indicate. As it progresses, the upstream group passes update information to the downstream group in a continuous stream (the dashed arrows).

A different strategy, concurrent development, shortens the elapsed time and provides feedback opportunities at the cost of increased rework. Figure 4-11 and Figure 4-13 illustrate it, and Principle 7, "Efficiency is expendable away from bottleneck activities," on page ???, analyzes it further. [Insert cross-reference. Verify figure numbers.]

In concurrent development, each downstream activity starts at some point judged to be appropriate with respect to the completeness and stability of the upstream team's work (different downstream groups may start at different moments with respect to their upstream groups, of course). The downstream team starts operating with the available information, and as the upstream team continues work, it passes new information along to the downstream team.

To the extent that the downstream team guesses right about where the upstream team is going and the upstream team does not encounter major surprises, the downstream team will get its work approximately right. The team will do some rework along the way, as new information shows up.

The key issue in concurrent development is judging the completeness, stability, rework capability, and communication effectiveness of the teams.

The advantages of concurrent development are twofold, the exact opposites of the disadvantages of serial development:

· The upstream teams get feedback from the downstream teams. The designers can indicate how difficult the requirements are to implement. The programmers may produce code soon enough for the requirements group to get feedback on the desirability of the requirements.

· Although each downstream activity takes longer than it would if done serially and the upstream team never changed its mind, the downstream activity starts much earlier. The net effect is that the downstream team finishes sooner than it otherwise would, possibly just a few days or weeks after the upstream work is finished.

Such concurrent development is described as the Gold Rush strategy in Surviving Object-Oriented Projects (Cockburn 1998). The Gold Rush strategy presupposes both good communication and rework capacity. The Gold Rush strategy is suited to situations in which the requirements gathering is predicted to go on for longer than can be tolerated in the project plan, so there would simply not be enough time for proper design if the next team had to wait for the requirements to settle.

Actually, many projects fit this profile.

Gold-Rush-type strategies are not risk free. There are three pitfalls to watch out for:

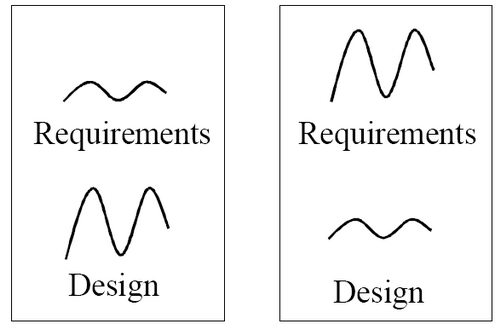

· The first pitfall is overdoing the strategy; for example, allowing the design team to get ahead of the requirements team (Figure 4-14). One such team announced one day that its design was already stable and ready for review. The team was just waiting for the requirements people to hurry up and generate the requirements!

Figure 4-14. Keeping upstream activities more stable than downstream activities. The wavy lines show the instability of work products in requirements and design. In the healthy situation (left) both fluctuate at the same time, but the requirements fluctuation is smaller than the design. In the unhealthy situation, the design is already stable before the requirements have even started settling down!

· The second pitfall is when the communications path between the teams is not rich enough. If the teams are geographically separated, for example, it is harder for the upstream team to pass along its changing information. As the communications cost rises, it eventually becomes more effective to wait for greater stability in the upstream work before triggering the downstream team.

· The third pitfall is making a mistake in estimating a team's rework capacity. Where a team has little or no spare capacity, it must be given much more stable inputs to work from.

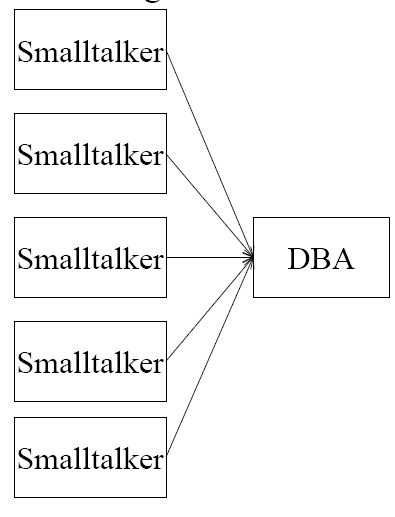

16 Smalltalkers, 2 Database Designers

One project had 16 Smalltalk programmers and only two database designers.

In this situation, we could let the Smalltalk programmers start working as soon as the requirements were starting to shape up. At the same time, we could not afford to trigger the database designers to start their work until the object model had been given passing marks in its design review.

Only after the object model had passed "stable enough for review" and actually been reviewed, with the DBAs in the review group, could the DBAs take the time to start their design work.

The complete discussion about when and where to apply concurrent development is presented in Principle 7 of methodology design, "Rework is acceptable away from bottleneck activities," on page ???. [Insert cross-reference.]

The point to understand now is that stability plays a role in methodology design.

Both XP and Adaptive Software Development (Highsmith 2000) suggest maximizing concurrency. This is because both are intended for situations with strong time-to-market priorities and requirements that are likely to change as a consequence of producing the emerging system.

Fixed-price contracts often benefit from a mixed strategy: In those situations, it is useful to have the requirements quite stable before getting far into design. The mix will vary by project. Sometimes, the company making the bid may do some designing or even coding just to prepare its bid.

Figure 4-15. Role-deliverable-milestone view of a methodology.

Publishing a Methodology

Publishing a methodology has two components: the pictorial view and the text itself.

The Pictorial View

One way to present the design of a methodology is to show how the roles interact across work products (Figure 4-15). In such a "Role-Deliverable-Milestone" view, time runs from left to right across the page, roles are represented as broad bands across the page, and work products are shown as single lines within a band. The line of a work product shows critical events in its life: its birth event (what causes someone to create it), its review events (who examines it), and its death event (at what moment it ceases to have relevance, if ever).

Although the Role-Deliverable-Milestone view is a convenient way to capture the work-product dependencies within a methodology, it evidently is also good for putting people to sleep: Methodology Chart as Sleeping Aid I once created the proverbial wall chart of the methodology for a large project, meticulously showing the several hundred interlocking parts of the group's methodology using the Role-Deliverable-Milestone view to condense the information.

Many people had been asking to see the entire methodology, so I printed the chart, several feet on each side, and put it on a large wall. It was interesting to watch people's eyes glaze over whenever I was pointing to the time line for another project role, such as the project managers or technical writers, and only come back into focus when I got to their own section. It turned out that most people really only wanted to see the section of the methodology that affected them and not what everyone in the organization was doing.

The pictorial view misses the practices, standards, and other forms of collaboration so important to the group. Those don't have a convenient graphical portrayal and must be listed textually.

The Methodology Text

In published form, a methodology is a text that describes the techniques, activities, meetings, quality measures, and standards of all the job roles involved. You can find examples in Object-Oriented Methods: Pragmatic Considerations (Martin 1996), and The OPEN Process Specification (Graham 1997). The Rational Unified Process has its own Web site with thousands of Web pages.

Methodology texts are large. At some level there is no escape from this size. Even a tiny methodology, with four roles, four work products per role, and three milestones per work product has 68 (4 + 16 + 48) interlocking parts to describe, leaving out any technique discussions. And even XP, which initially weighed in at only about 200 pages (Beck 1999), now approaches 1,000 pages when expanded to include additional guidance about each of its parts (Jeffries 2000, Beck 2000, Auer 2001, Newkirk 2001).

There are two reasons why most organizations don't issue a thousand-page text describing their methodology to each new employee:

· The first is what Jim Highsmith neatly captures with the distinction, "documentation versus understanding."

The real methodology resides in the minds of the staff and in their habits of action and conversation.

Documenting chunks of the methodology is not at all the same as providing understanding, and having understanding does not presuppose having documentation. Understanding is faster to gain, because it grows through the normal job experiences of new employees.

· The second is that the needs of the organization are always changing.

It is impractical, if not impossible, to keep the thousand-page text current with the needs of the project teams. As new technologies show up, the teams must invent new ways of working to handle them, and those cannot be written in advance. An organization needs ways to evolve new variants of the methodologies on the fly and to transfer the good habits of one team to the next team. You will learn how to do that as you proceed through this book.

Reducing Methodology Bulk

There are several ways to reduce the physical size of the methodology publication:

Provide examples of work products

Provide worked examples rather than templates. Take advantage of people's strengths in working with tangibles and examples, as discussed earlier.

Collect reasonably good examples of various work products: a project plan, a risk list, a use case, a class diagram, a test case, a function header, a code sample.

Place them online, with encouragement to copy and modify them. Instead of writing a standards document for the user interface, post a sample of a good screen for people to copy and work from. You may need to annotate the example showing which parts are important.

Doing these things will lower the work effort required to establish the standards and will lower the barrier to people using them.

One of the few books to show deliverables and their standards is Developing Object-Oriented Software (OOTC 1997), which was prepared for IBM by its Object-Oriented Technology Center in the late 1990s and was then made public.

Remove the technique guides

Rather than trying to teach the techniques by providing detailed descriptions of them within the methodology document, let the methodology simply name the recommended techniques in the methodology, along with any known books and courses that teach them.

Techniques-in-use involve tacit knowledge. Let people learn from experts, using apprenticeship-based learning, or let them learn from a hands-on course in which they can practice the technique in a learning environment.

Where possible, get people up to speed on the techniques before they arrive on the project, instead of teaching the technique as part of a project methodology on project time. The techniques will then become skills owned by people, who simply do their jobs in their natural ways.

Organize the text by role

It is possible to write a low-precision but descriptive paragraph about each role, work product, and milestone, linking the descriptions with the Role-Deliverable-Milestone chart. The sample role descriptions might look something like these:

Executive Sponsor. A person who acts in the capacity to support and monitor the progress of an approved project. Responsible for scoping, prioritizing, and funding at the project level. Cross-team Lead. A person who is responsible for the progress of multiple teams, for uniting the efforts of these teams, for establishing priorities across teams, and for allocating resources (people) across teams.

Team Lead. A person who is responsible for the direction and progress of one team. Developer. A technical person who develops the software product. This may include UI, business classes, infrastructure, or data. Writer. A person who publishes technical communication about various subjects through media such as manuals, white papers, shared drives, intranet, or Internet. Rollout. One or more persons who communicate and coordinate field technicians and customer representatives and who roll out the products. External Tester. One or more persons who perform QA-related test functions outside of the development groups.

Maintainer. A person who makes necessary changes to the product after it ships.

For the work products, you need to record who writes them, who reads them, and what they contain. A fuller version would contain a sample, noting the tolerances permitted and the milestones that apply. Here are a few simple descriptions:

Overall Project Plan

Writer: Cross-team Lead.

Readers: Executive Sponsor, Team Leads, newcomers.

Contains: Across all teams, what is planned to be in the next several releases, the cross-team dependencies between their contents, the planned timing of development.

Dependency Table

Writer: Team Lead.

Readers: Team Leads, Cross-team Leads. Contains: What this team needs from every other team, and the date each item is needed. May include a fallback plan in case the item is not delivered on time.

Team Status Sheet

Writer: Team Lead.

Readers: Cross-team Lead, Developers. Contains: The current state of the team: rolled up list of things being worked on, next milestone, what is holding up progress, and stability level for each.

For the review milestones, record what is being reviewed, who is to review it, and what the outcome is. For example:

Release Proposal Review

Reviewers: Application Team Lead, Cross-team Lead, and Executive Sponsor.

Purpose: Basically a scope review. Reviewing: Use case summary, use cases, actors, external system description, development plan. Outcome: Modifications to scope, priorities, dates, possibly corrections to actor list or external systems.

Application Design Review

Reviewers: Team Lead, related Cross-team Leads, Cross-Team Mentors, Business experts. Purpose: Check quality, correctness, and conformance of the application design. Reviewing: Use cases, actors, domain class diagram, screen flows, screen designs, class tables (if any), and interaction diagrams (if any). Outcome: Factual corrections to the domain model, to the screen details. Suggestions or requirements for improved UI or application design, based on either quality or conformance considerations.

With these short paragraphs in place, the methodology can be summarized by role (as the following two examples show). The written form of the methodology, summarized by role, is a checklist for each person that can be fit onto one sheet of paper and pinned up in the person?s workspace. That sheet of paper contains no surprises (after the first reading) but serves to remind team members of what they already know.

Here is a slightly abridged example for the programmers:

Designer-Programmer

Writes

Weekly status sheet

Source code

Unit tests

Release notes ...

Reads:Actor descriptions

UI style guide ...

Reviews:

Application design review

(etc.)

Publishes:

Application. configuration

Test cases

(etc.)

Declares: UI Stable

You can see that this is not a methodology used to stifle creativity. To a newcomer, it is a list outlining how he is to participate on the team. To the ongoing developer, it is a reminder.

Using the Process Miniature

Publishing a methodology does not convey the visceral understanding that forms tacit knowledge. It does not convey the life of the methodology, which resides in the many small actions that accompany teamwork. People need to see or personally enact the methodology.

My currently favorite way of conveying the methodology is through a technique I call the Process Miniature.

In a Process Miniature, the participants play-act one or two releases of the process in a very short period of time

On one team I interviewed, new people were asked to spend their first week developing a (small) piece of software all the way from requirements to delivery. The purpose of the week-long exercise was to introduce the new person to the people, the roles, the standards, and the physical placement of things in the company.

More recently, Peter Merel invented a one-hour process miniature for Extreme Programming, giving it the nickname Extreme Hour. The purpose of the Extreme Hour is to give people a visceral encounter with XP so that they can discuss its concepts from a base of almost-real experience.

In the Extreme Hour, some people are designated "customers." Within the first 10 minutes of the hour, they present their requests with developers and work through the XP planning session.

In the next 20 minutes, the developers sketch and test their design on overhead transparencies. The total length of time for the first iteration is 30 minutes.

In the next 30 minutes, the entire cycle is repeated so that two cycles of XP are experienced in just 60 minutes.

Designer-Programmer

Writes Weekly status sheet

Source code

Unit tests

Release notes ... Reads:Actor descriptions

UI style guide ... Reviews: Application design review

(etc.) Publishes: Application. configuration

Test cases

(etc.)

Usually, the hosts of the Extreme Hour choose a fun assignment, such as designing a fish-catching device that keeps the fish alive until delivering them to the cooking area at the end of the day and also keeps the beer cold during the day. (Yes, they do have to cut scope during the iterations!)

We used a 90-minute process miniature to help the staff of a 50-person company experience a new development process we were proposing (you might notice the similarity of this process miniature experience to the informance described on page ???) [insert cross-ref]

In this case, we were primarily interested in conveying the programming and testing rules we wanted people to use. We therefore could not use a drawing-based problem such as the fish trap but had to select a real programming problem that would produce running, tested code for a Web application. A Process Miniature Experience

We wanted to demonstrate two full iterations of the process in 90 minutes. We wanted to show people negotiating over requirements and then creating and testing production of code, using the official five-layer architecture, execution database, configuration management system, official Web style sheets, and fully automated regression test suites. We therefore had to choose a tiny application. We elected to construct a simple up-down counter that would stick at 0 and 20 and could be reset to 0. The counter would use a Web browser interface and store its value in the official company database.

To meet the constraint of 45 minutes per iteration, we choreographed the show to a small extent. The marketing analysts were told to ask for more than the team could possibly deliver in 30 minutes of programming ("Could we please have a graphical, radial dial for the counter, in three colors?"). We did this in order to let the audience experience scope negotiation as they would encounter it in real life.

We also rehearsed how much the programmers would bid to complete the first iteration and how they might cut scope during the middle of the iteration so that the audience could see this in action.

The point of scripting these pieces was to give the entire company a view of what we wanted to establish as the social conventions for normal scope negotiation during project runs. We left the actual programming as live action. Even though the team knew the assignment, they still had to type it all in, in real time, as part of the experience. The audience, sitting through all of the typing, came to appreciate the amount of work that went into even such a trivial system.

Whatever form of Process Miniature you use, plan on replaying it from time to time in order to reinforce the team?s social conventions. Many of these conventions, such as the scope negotiation rules just described, won't find a place in the documentation but can be partially captured in the play.

Methodology Design Principles

Designing a methodology is not at all like designing software, hardware, bridges, or factories. Four things, in particular, get in the way:

· Variations in people. People are not the reliable components that designers count on when designing the other systems.

· Variations across projects. The appropriate methodology varies by project, nationality, and local culture.

Long debug cycles. The test and debug cycle for a methodology is on the order of months and years.

Changing technologies. By the time the methodology designer debugs one methodology design, the technologies, techniques, and cultures have changed and the design needs updating.

Common Design Errors

People who come freshly to their assignment of designing a methodology make a standard set of errors:

One size for all projects

Here is a conversation that I have heard all too often over the years: "Hi, Alistair. We have projects in many technologies all over the globe. We desperately need a common methodology for all of them. Could you please design one for us?"

"I'm afraid that would not be practical: The different technologies, cultures, and project priorities call for different ways of working."

"Right, got that. Now, please do tell us what our common methodology will be." "...!!?" This request is so widespread that I spend most of the next chapter on methodology tailoring.

The need for localized methodologies may be clear to you by now, but it will not be clear to your new colleague who gets handed the assignment to design the corporation's common methodology. Intolerant

Novice methodology designers have this notion that they have the answer for software development and that everyone really ought to work that way.

Software development is a fluid activity. It requires that people notice small discrepancies wherever they lie and that they communicate and resolve the discrepancies in whatever way is most practical. Different people thrive on different ways of working.

A methodology is, in fact, a straightjacket. It is exactly the set of conventions and policies the people agree to use: It is the size and shape of straightjacket they choose for themselves.

Given the varying characteristics of different people, though, that straightjacket should not be made any tighter than it absolutely needs to be.

Techniques are one particular section of the methodology that usually can be made tolerant. Many techniques work quite well, and different ones suit different people at different times.

The subject of how much tolerance belongs in the methodology should be a conscious topic of discussion in the design of your methodology.

Heavy

We have developed, over the years, an assumption that a heavier methodology, with closer tracking and more artifacts, will somehow be "safer" for the project than a lighter methodology with fewer artifacts.

The opposite is actually the case, as the principles in this section should make clear. However, that initial assumption persists, and it manifests itself in most methodology designs.

The heavier-is-safer assumption probably comes from the fear that project managers experience when they can't look at the code and detect the state of the project with their own eyes. Fear grows with the distance from the code. So they quite naturally request more reports summarizing various states of affairs and more coordination points. The alternative is to ... trust people. This can be a truly horrifying thought during a project under intense pressure. Being a Smalltalk programmer, I felt this fear firsthand when I had to coordinate a COBOL programming project.

Fear or no fear, adding weight to the methodology is not likely to improve the team's chance of delivering. If anything, it makes the team less likely to deliver, because people will spend more time filling in reports than making progress. Slower development often translates to loss of a market window, decreased morale, and greater likelihood of losing the project altogether.

Part of the art of project management is learning when and how to trust people and when not to trust them. Part of the art of methodology design is to learn what constraints add more burden than safety. Some of these constraints are explored in this chapter.

Embellished

Without exception, every methodology I have ever seen has been unnecessarily embellished with rules, practices, and ideas that are not strictly necessary. They may not even belong in the methodology. This even applies to the methodologies I have designed. It is so insidious that I have posted on the wall in front of me, in large print: "Embellishment is the pitfall of the methodologist." Embellishing a Methodology

I detected this tendency in myself while designing my first methodology. I asked a programmer colleague, a very practical person freshly returned from a live project, to double-check, edit, and trim my design. He indeed found the embellishments I was worried about. However, he then added one chapter to the methodology, calling for the production of contract-based design and deliverables he had just read about. I phoned him. "Surely you don't mean to say you used these on your last project?" I asked.

He replied, "Well, no, not on that project. But it's a really good idea and I think we ought to do it."

From this experience, I learned that the words "ought to" and "should" indicate embellishment. If someone says that people "should" do something, it probably means that they have never done it yet, they have successfully delivered software without it, and there probably is no chance of getting people to use it in the future.

Here is a sample story about that.

Discovering "Should"

Tester: "And then the developers have a meeting with the testers in which they describe the basic design."

Me: "Really, do they do that?"

Tester: "What do you mean? Of course they do."

Me: "Oh, yeah. They really do that, do they?"

Tester: "They've got to, or else the testers can't do their job!"

Me: "Right. Um ... In that case, there was such a meeting, and I can interview those people to find out what happened in the meeting. Can you tell me the date of such a meeting, and who was in the room?"

Tester: "Well, we were going to have it. I mean, you really should have that meeting, it's really valuable ..."

We didn't have to go much farther than that. Of course, no such meeting had taken place. Further, it was doubtful that we could enforce such a meeting in that company at that time, however useful it might have been.

There is another side to this embellishment business. Typically, the process owner has a distorted view of how developers really work. In my interviews, I rarely ever find a team of people who works the way the process owner says they work. This is so pervasive that I have had to mark as unreliable any interview in which I only got to speak with the manager or process designer.

The following is a sample, and typical, conversation from one of my interviews. At the time, I was looking for successful implementations of Object Modeling Technique (OMT). The person who was both process and team lead told me that he had a successful OMT project for me to review. I flew to California to interview this team, and the process and team lead told me that the team had a successful project for me to review. Uncovering Process Shortcuts

Me: "These are samples of the work products?... This is a state diagram?"

Leader: "Well, it's not really one. It's more of a flow diagram. I have to teach them how to make state diagrams properly."

Me: "But these are actual samples of the work products produced. Did you use an iterative and incremental process?"

Developer nods.

Leader: "We used a modification of Boehm's spiral model."

Me: "OK. And did the requirements or the design change in the second iteration?"

Developer: "Of course."

Me: "OK. ... How did you manage to update all these diagrams in the second iteration?" Developer: "Oh, we didn't. We just changed the code..."

Extreme Programming stands in contrast to the usual, deliverable-based methodologies. XP is based around activities. The rigor of the methodology resides in people carrying out their activities properly.

Not being aware of the difference between deliverable-based and activity-based methodologies, I was unsure how to investigate my first XP project. After all, the team has no drawings to keep up to date, so obviously there would be no out-of-date work products to discover!

An activity-based methodology relies on activities in action. XP relies on programming in pairs, writing unit tests, refactoring, and the like.

When I visit a project that claims to be an XP project, I usually find pair programming working well (or else they wouldn't declare it an XP project). Then, while they are pair programming, the people are more likely to write unit tests, and so I usually see some amount of test-writing going on.

The most common deviation from XP is that the people do not refactor their code often, which results in the code base becoming cluttered in ways that properly developed XP code shouldn't.

In general, though, XP has so few rules to follow that most of the areas of embellishment have been removed. XP is a special case of a methodology, and I'll analyze it separately at the end of the chapter.

Personally, I tend to embellish around design reviews and testing. I can't seem to resist sneaking an extra review or an extra testing activity through the "should" door ("Of course they should do that testing!" I hear you cry. Shouldn't they?!).

The way to catch embellishment is to have the directly affected people review the proposal. Watch their faces closely to discover what they know they won't do but are afraid to say they won?t do.

Untried

Most methodologies are untried. Many are simply proposals created from nothing. This is the fullblown "should" in action: "Well, this really looks like it should work."

After looking at dozens of methodology proposals in the last decade, I have concluded that nothing is obvious in methodology design. Many things that look like they should work don't (testing and keeping documentation up to date, for example), and many things that look like they shouldn't work actually do work (pair programming and test-first development, for example).

The late Wayne Stevens, designer of the IBM Consulting Group's Information Engineering methodology in the early 1990s, was well aware of this trap.

Whenever someone proposed a new object-centered / object-based / object-hybrid methodology for us to include in the methodology library, he would say, "Try it on a project, and tell us afterwards how it worked." They would typically object, "But that will take years! It is obvious that this is great!" To my recollection, not one of these obvious new methodologies was ever used on a project.

Since that time, I use Wayne Stevens' approach and see the same thing happen.

How are new methodologies made? Here's how I work when I am personally involved in a project:

· I adjust, tune, and invent whatever is needed to take the project to success.

· After the project, I extract those things I would repeat again under similar circumstances and add them to my repertoire of tactics and strategies.

· I listen to other project teams when they describe their experiences and the lessons they learned.

But when someone sends me a methodology proposal, I ask him to try it on a project first and report back afterwards.

Used once

The successor to "untried" is "used once." The methodology author, having discovered one project on which the methodology works, now announces it as a general solution. The reality is that different projects need different methodologies, and so any one methodology has limited ability to transfer to another project.

I went through this phase with my Crystal Orange methodology (Cockburn 1998), and so did the authors of XP. Fortunately, each of us had the good sense to create a "Truth in Advertising" label describing our own methodology?s area of applicability.

We will revisit this theme throughout the rest of the book: How do we identify the area of applicability of a methodology, and how do we tailor a methodology to a project in time to benefit the project?

Methodologically Successful Projects

You may be wondering about these project interviews I keep referring to. My work is based on looking for "methodologically successful" projects. These have three characteristics:

· The project was delivered. I don't ask if it was completed on time and on budget, just that the software went out the door and was used.

· The leadership remained intact. They didn't get fired for what they were doing.

· The people on the project would work the same way again.

The first criterion is obvious. I set the bar low for this criterion, because there are so many strange forces that affect how people refer to the "successfulness" of a project. If the software is released and gets used, then the methodology was at least that good.

The second criterion was added after I was called in to interview the people involved with a project that was advertised as being "successful." I found, after I got there, that the project manager had been fired a year into the project because no code had been developed up to that time, despite the mountains of paperwork the team had produced. This was not a large military or life-critical project, where such an approach might have been appropriate, but it was a rather ordinary, 18-developer technical software project.

The third criterion is the difficult one. For the purpose of discovering a successful methodology, it is essential that the team be willing to work in the prescribed way. It is very easy for the developers to block a methodology. Typically all they have to say is, "If I do that, it will move the delivery date out two weeks." Usually they are right, too.

If they don't block it directly, they can subvert it. I usually discover during the interview that the team subverted the process, or else they tolerated it once but wouldn't choose to work that way again.

Sometimes, the people follow a methodology because the methodology designer is present on the project. I have to apply this criterion to myself and disallow some of my own projects. If the people on the project were using my suggestions just to humor me, I couldn't know if they would use them when I wasn't present.

The pertinent question is, ?Would the developers continue to work that way if the methodology author was no longer present??

So far, I have discovered three methodologies that people are willing to use twice in a row. They are

· Responsibility-Driven Design (Wirfs-Brock 1991)

· Extreme Programming (Beck 1999)

· Crystal Clear (Cockburn 2002)

(I exclude Crystal Orange from this list, because I was the process designer and lead consultant. Also, as written, it deals with a specific configuration of technologies and so needs to be reevaluated in a different, newly adapted setting.)

Even if you are not a full-time methodology designer, you can borrow one lesson from this section about project interviews. Most of what I have learned about good development habits has come from interviewing project teams. The interviews are so informative that I keep on doing them.

This avenue of improvement is also available to you. Start your own project interview file, and discover good things that other people do that you can use yourself.

Author Sensitivity

A methodology's principles are not arrived at through an emotionally neutral algorithm but come from the author's personal background. To reverse the saying from The Wizard of Oz, "Pay great attention to the man behind the curtain."

Each person has had experiences that inform his present views and serve as their anchor points. Methodology authors are no different.

In recognition of this, Jim Highsmith has started interviewing methodology authors about their backgrounds. In Agile Software Development Ecosystems (Highsmith 2002), he will present not only each author's methodology but also his or her background.

A person's anchor points are not generally open to negotiation. They are fixed in childhood, early project experiences, or personal philosophy. Although we can renormalize a discussion with respect to vocabulary and scope, we cannot do that with personal beliefs. We can only accept the person's anchor points or disagree with them.

When Kent Beck quipped, "All methodology is based on fears," I first thought he was just being dismissive. Over time, I have found it to be largely true. One can almost guess at a methodology author's past experiences by looking at the methodology. Each element in the methodology can be viewed as a prevention against a bad experience the methodology author has had.

· Afraid that programmers make many little mistakes? Hold code reviews.

· Afraid that users don't know what they really want? Create prototypes.

· Afraid that designers will leave in the middle of the project? Have them write extensive design documentation as they go.

Of course, as the old saying goes, just because you are paranoid doesn't mean that they aren't after you. Some of your fears may be well founded. We found this in one project, as told to us over time by an adventuresome team leader. Here is the story as we heard it in our discussion group: Don't Touch My Private Variables

A team leader wanted to simplify the complex design surrounding the use of not-quite-private methods that wrote to certain local variables.

Someone in our group proposed making all methods public. This would simplify the design tremendously.

The team leader thought for a moment and then identified that he was operating on a fear that the programmers would not follow the necessary programming convention to keep the software safe. He wanted the programmers to use those public methods only for the particular programming situation that was causing trouble.

He was afraid that in the frenzy of deadlines, they would use them all the time, which would cause maintenance problems. He was willing to try the experiment of making them public and just writing on the team's whiteboard the very simple rule restricting their use.

I said, "Maybe your fears are well founded. How about if you don't just trust the people to behave well, but also write a little script to check the actual use of those methods over time? This way you will discover whether your fears are well founded or not."

The team leader agreed. The team leader went on vacation for two weeks. When he returned, he ran the script and found that the programmers had, in fact, been using the new, public methods, ignoring the note on the whiteboard.

(One person at the table chimed in here, "Well, sure, those were the only documented methods!")

This story raises an interesting point about trust: As much as I love to trust people, a weakness of people is being careless. Sometimes it is important to simply trust people, but sometimes it is important to install a mechanism to find out whether people can be trusted on a particular topic.

The final piece of personal baggage of the methodology authors is their individual philosophy. Some have a laissez-faire philosophy, some a military control philosophy. The philosophy comes with the person, shaping his experiences and being shaped by his experiences, fears, and wishes.

It is interesting to see how much of an author's methodology philosophy is used in his personal life. Does Watts Humphreys use a form of the Personal Software Process when he balances his checkbook? Does Kent Beck do the simplest thing that will work, getting incremental results and feedback as soon as he can? Do I travel light, and am I tolerant of other people's habits?

Here are some key bits of my background that either drive my methodology style or at least are consistent with it.

I travel light, as you might guess. I use a small laptop, carry a small phone, drive a small car, and see how little luggage I need when traveling. In terms of the eternal tug-of-war between mobility and armor, I am clearly on the side of mobility.

I have lived in many countries and among many cultures and keep finding that each works. This perhaps is the source of my sensitivity to development cultures and why I encourage tolerance in methodologies.

I also like to think very hard about consequences, so that I can give myself room to be sloppy. Thus, I balance the checkbook only when I absolutely have to, doing it in the fastest way possible, just to make sure checks don't bounce. I don't care about absolute accuracy. Once, when I built bookshelves, I worked out the fewest places where I had to be accurate in my cutting (and the most places where I could be sloppy) to get level and sturdy bookshelves.

When I started interviewing project teams, I was prepared to discover that process rigor was the secret to success. I was actually surprised to find that it wasn?t. However, after I found that using light methodologies, communicating, and being tolerant were effective, it was natural that I would capitalize on those results.

Beware the methodology author. Your experiences with a methodology may have a lot to do with how well your personal habits align with those of the methodology author.

Seven Principles

Over the years, I have found seven principles that are useful in designing and evaluating methodologies:

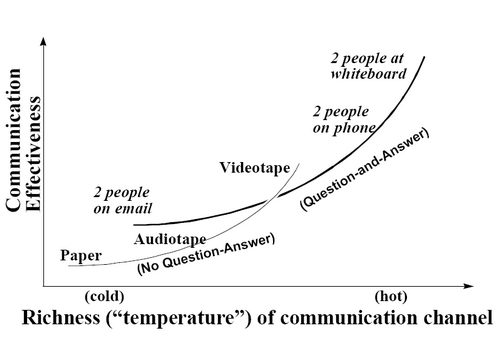

1. Interactive, face-to-face communication is the cheapest and fastest channel for exchanging information.

2. Excess methodology weight is costly.

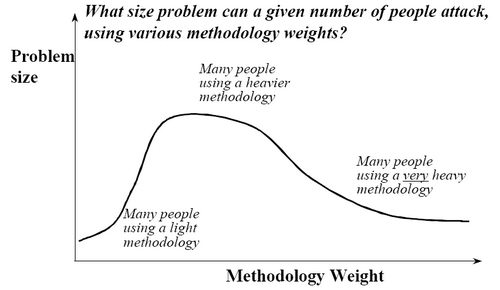

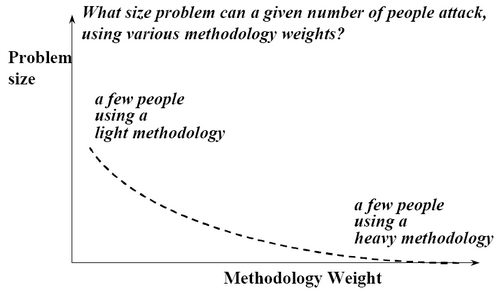

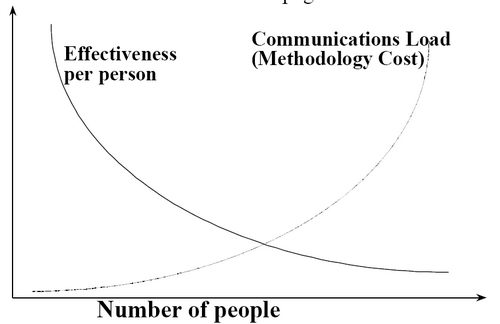

3. Larger teams need heavier methodologies.

4. Greater ceremony is appropriate for projects with greater criticality.

5. Increasing feedback and communication lowers the need for intermediate deliverables.

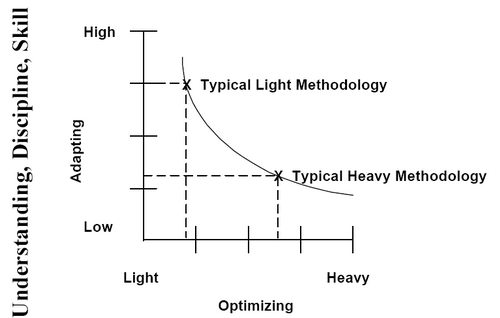

6. Discipline, skills, and understanding counter process, formality, and documentation.

7. Efficiency is expendable in non-bottleneck activities.

Following is a discussion of each principle.

Principle 1. Interactive, face-to-face communication is the cheapest and fastest channel for exchanging information.